- Chapter 4. Linear Methods for Classification

- \(\S\) 4.1. Introduction

- \(\S\) 4.2. Linear Regression of an Indicator Matrix

- \(\S\) 4.3. Linear Discriminant Analysis

- LDA from multivariate Gaussian

- Estimating parameters

- Simple correspondence between LDA and linear regression with two classes

- Practice beyond the Gaussian assumption

- Quadratic Discriminant Analysis

- Why LDA and QDA have such a good track record?

- \(\S\) 4.3.1. Regularized Discriminant Analysis

- \(\S\) 4.3.2. Computations for LDA

- \(\S\) 4.3.3. Reduced-Rank Linear Discriminant Analysis

- What if \(K>3\)? Principal components subspace

- Maximize between-class variance relative to within-class

- Algorithm for the generalized eigenvalue problem

- Summary

- Dimension reduction for classification

- Impact of prior information \(\pi_k\)

- Connection between Fisher’s reduced-rank discriminant analysis and regression of an indicator response matrix

- \(\S\) 4.4. Logistic Regression

- \(\S\) 4.4.1. Fitting Logistic Regression Models

- Maximum likelihood

- Maximum likelihood for \(K=2\) case

- Newton-Raphson algorithm

- The same thing in matrix notation

- Iteratively reweighted least squares

- Multiclass case with \(K\ge 3\)

- Goal of logistic regression

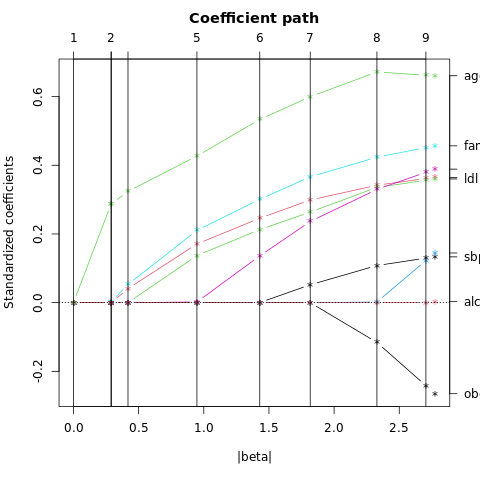

- \(\S\) 4.4.2. Example: South African Heart Disease

- \(\S\) 4.4.3. Quadratic Approximations and Inference

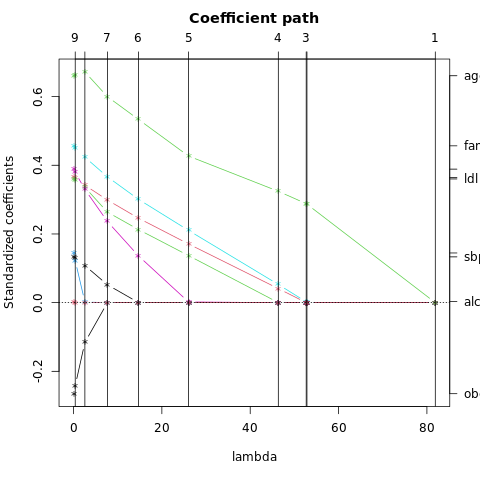

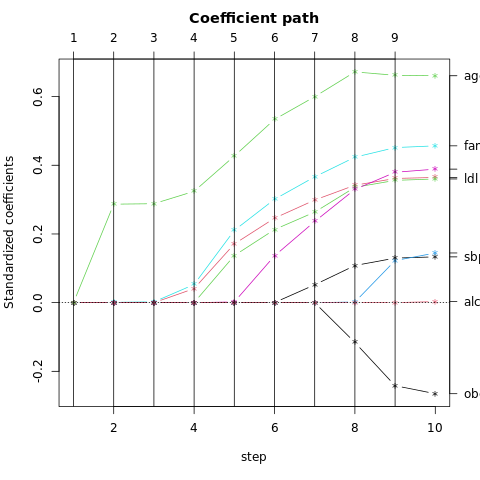

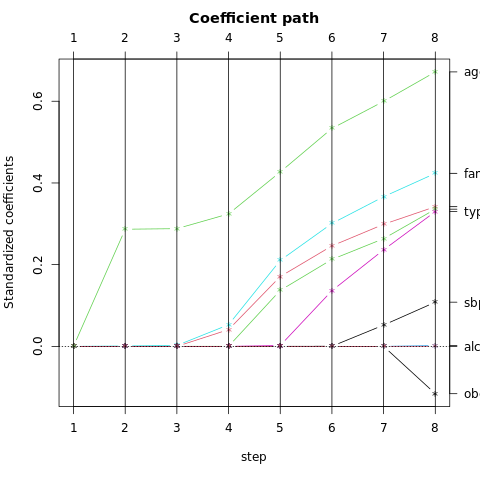

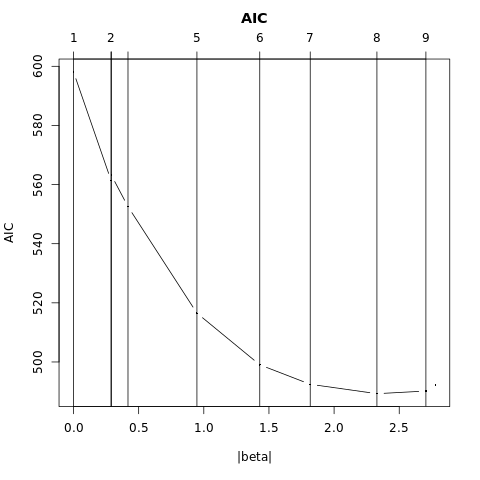

- \(\S\) 4.4.4 \(L_1\) Regularized Logistic Regression

- \(\S\) 4.4.5 Logistic Regression or LDA?

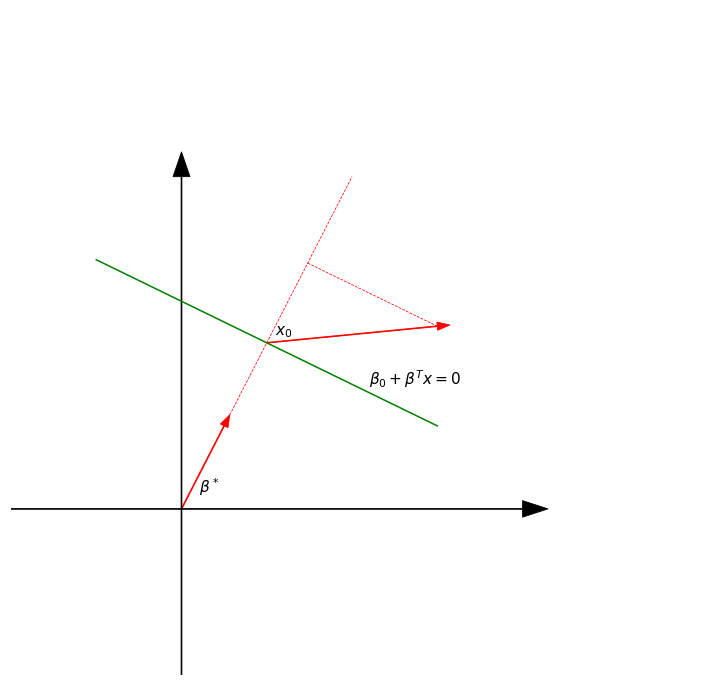

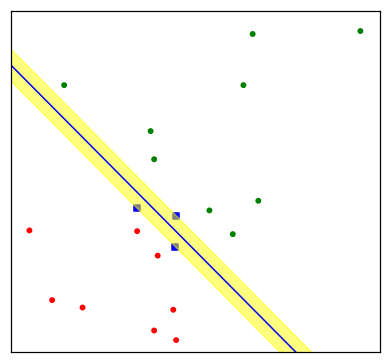

- \(\S\) 4.5. Separating Hyperplanes

- Exercises

- References

Chapter 4. Linear Methods for Classification

\(\S\) 4.1. Introduction

Since our predictor \(G(x)\) takes values in a discrete set \(\mathcal{G}\), we can always divide the input space into a collection of regions labeled according to the classification. We saw in Chapter 2 that the boundaries of these regions can be rough or smooth, depending on the prediction function. For an important class of procedures, these decision boundaries are linear; this is what we will mean by linear methodds for classification.

Linear regression

In Chapter 2 we fit linear regression models to the class indicator variable, and classify to the largest fit. Suppose there are \(K\) classes labeled \(1,\cdots,K\), and the fitted linear model for the \(k\)th indicator response variable is

\[\begin{equation} \hat{f}_k(x) = \hat\beta_{k0} + \hat\beta_k^Tx. \end{equation}\]

The decision boundary between class \(k\) and \(l\) is that set of points

\[\begin{equation} \left\lbrace x: \hat{f}_k(x) = \hat{f}_l(x) \right\rbrace = \left\lbrace x: (\hat\beta_{k0}-\hat\beta_{l0}) + (\hat\beta_k-\hat\beta_l)^Tx = 0 \right\rbrace, \end{equation}\]

which is an affine set or hyperplane. Since the same is true for any pair of classes, the input space is divided into regions of constant classification, with piecewise hyperplanar decision boundaries.

Discriminant function

The regression approach is a member of a class of methods that model discriminant functions \(\delta_k(x)\) for each class, and then classify \(x\) to the class with the largest value for its discriminant function. Methods that model the posterior probabilities \(\text{Pr}(G=k|X=x)\) are also in this class. Clearly, if either the \(\delta_k(x)\) or \(\text{Pr}(G=k|X=x)\) are linear in \(x\), then the decision boundaries will be linear.

Logit transformation

Actually, all we require is that some monotone transformation of \(\delta_k\) or \(\text{Pr}(G=k|X=x)\) be linear for the decision boundaries to be linear. For example, if there are two classes, a popular model for the posterior probabilities is

\[\begin{align} \text{Pr}(G=1|X=x) &= \frac{\exp(\beta_0+\beta^Tx)}{1+\exp(\beta_0+\beta^Tx)},\\ \text{Pr}(G=2|X=x) &= \frac{1}{1+\exp(\beta_0+\beta^Tx)},\\ \end{align}\]

where the monotone transformation is the logit transformation

\[\begin{equation} \log\frac{p}{1-p}, \end{equation}\]

and in fact we see that

\[\begin{equation} \log\frac{\text{Pr}(G=1|X=x)}{\text{Pr}(G=2|X=x)} = \beta_0 + \beta^Tx. \end{equation}\]

The decision boundary is the set of points for which the log-odds are zero, and this is a hyperplane defined by

\[\begin{equation} \left\lbrace x: \beta_0+\beta^Tx = 0 \right\rbrace. \end{equation}\]

We discuss two very popular but different methods that result in linear log-odds or logits: Linear discriminant analysis and linear logistic regression. Although they differ in their derivation, the essential difference between them is in the way the lineaer function is fit to the training data.

Separating hyperplanes

A more direct approach is to explicitly model the boundaries between the classes as linear. For a two-class problem, this amounts to modeling the decision boundary as a hyperplane; a normal vector and a cut-point.

We will look at two methods that explicitly look for “separating hyperplanes.”

- The well-known perceptron model of Rosenblatt (1958), with an algorithm that finds a separating hyperplane in the training data, if one exists.

- Vapnik (1996) finds an optimally separating hyperplane if one exists, else finds a hyperplane that minimizes some measure of overlap in the training data.

We treat separable cases here, and defer the nonseparable case to Chapter 12.

Scope for generalization

We can expand the input by including their squares \(X_1^2,X_2^2,\cdots\), and cross-products \(X_1X_2,\cdots\), thereby adding \(p(p+1)/2\) additional variables. Linear functions in the augmented space map down to quadratic decision boundaires. FIGURE 4.1 illustrates the idea.

This approach can be used with any basis transformation \(h(X): \mathbb{R}^p\mapsto\mathbb{R}^q\) with \(q > p\), and will be explored in later chapters.

\(\S\) 4.2. Linear Regression of an Indicator Matrix

Here each of the response categories are coded via an indicator variable. Thus if \(\mathcal{G}\) has \(K\) classes, there will be \(K\) such indicators \(Y_k\), \(k=1,\cdots,K\), with

\[\begin{equation} Y_k = 1 \text{ if } G = k \text{ else } 0. \end{equation}\]

These are collected together in a vector \(Y=(Y_1,\cdots,Y_k)\), and the \(N\) training instances of these form an \(N\times K\) indicator response matrix \(\mathbf{Y}\), which is a matrix of \(0\)’s and \(1\)’s, with each row having a single \(1\).

We fit a linear regression model to each of the columns of \(\mathbf{Y}\) simultaneously, and the fit is given by

\[\begin{equation} \underset{N\times K}{\hat{\mathbf{Y}}} = \mathbf{X}\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{Y} = \underset{N\times (p+1)}{\mathbf{X}}\underset{(p+1)\times K}{\hat{\mathbf{B}}} \end{equation}\]

Note that we have a coefficient vector for each response columns \(\mathbf{y}_k\), and hence a \((p+1)\times K\) coefficient matrix \(\hat{\mathbf{B}} = \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{Y}\). Here \(\mathbf{X}\) is the model matrix with \(p+1\) columns with a leading columns of \(1\)’s for the intercept.

A new observation with input \(x\) is classified as follows:

- Compute the fitted output \(\hat{f}(x)^T = (1, x^T)\hat{\mathbf{B}}\), a \(K\) vector.

- Identify the largest component and classify accordingly:

\[\begin{equation} \hat{G}(x) = \arg\max_{k\in\mathcal{G}} \hat{f}_k(x). \end{equation}\]

Rationale

One rather formal justification is to view the regression as an estimate of conditional expectation. For the random variable \(Y_k\),

\[\begin{equation} \text{E}(Y_k|X=x) = \text{Pr}(G=k|X=x), \end{equation}\]

so conditional expectation of each \(Y_k\) seems a sensible goal.

The real issue is: How good an approximation to conditional expectation is the rather rigid linear regression model? Alternatively, are the \(\hat{f}_k(x)\) reasonable estimates of the posterior probabilities \(\text{Pr}(G=k|X=x)\), and more importantly, does this matter?

It is quite straightforward to verify that, as long as the model has an intercept,

\[\begin{equation} \sum_{k\in\mathcal{G}}\hat{f}_k(x) = 1. \end{equation}\]

However it is possible that \(\hat{f}_k(x) < 0\) or \(\hat{f}_k(x) > 1\), and typically some are. This is a consequence of the rigid nature of linear regression, especially if we make predictions outside the hull of the training data. These violations in themselves do not guarantee that this approach will not work, and in fact on many problems it gives similar results to more standard linear methods for classification.

If we allow linear regression onto basis expansions \(h(X)\) of the inputs, this approach can lead to consistent estimates of the probabilities. As the size of the training set \(N\) grows bigger, we adaptively include more basis elements so that linear regression onto these basis functions approaches conditional expectation. We discuss such approaches in Chapter 5.

A more simplistic viewpoint

Denote \(t_k\) as the \(k\)th column of \(\mathbf{I}_K\), the \(K\times K\) identity matrix, then a more simplistic viewpoint is to construct targets \(t_k\) for each class. The response vector (\(i\)th row of \(\mathbf{Y}\))

\[\begin{equation} y_i = t_k \text{ if } g_i = k. \end{equation}\]

We might then fit the linear model by least squares: The criterion is a sum-of-squared Euclidean distances of the fitted vectors from their targets.

\[\begin{equation} \min_{\mathbf{B}} \sum_{i=1}^N \left\| y_i - \left[ (1,x_i^T)\mathbf{B} \right]^T \right\|^2. \end{equation}\]

Then a new observation is classified by computing its fitted vector \(\hat{f}(x)\) and classifying to the closest target:

\[\begin{equation} \hat{G}(x) = \arg\min_k \left\| \hat{f}(x)-t_k \right\|^2. \end{equation}\]

This is exactly the same as the previous linear regression approach. Below are the reasons:

The sum-of-squared-norm criterion is exactly the same with multiple response linear regression, just viewed slightly differently. The component decouple and can be rearranged as a separate linear model for each element because there is nothing in the model that binds the diferent response together.

The closest target classification rule is exactly the same as the maximum fitted component criterion.

Masked class with the regression approach

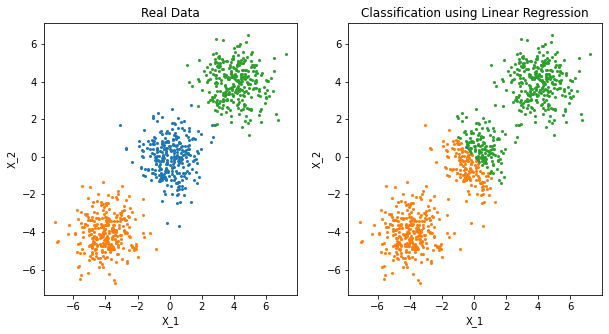

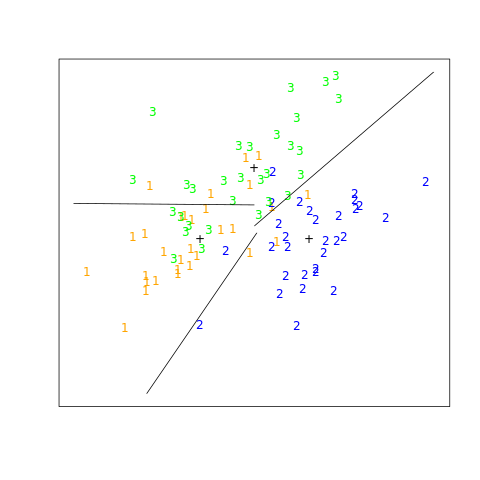

There is a serious problem with the regression approach when the number of class \(K\ge 3\), especially prevalent when \(K\) is large. Because of the rigid nature of the regression model, classes can be masked by others. FIGURE 4.2 illustrates an extreme situation when \(K=3\). The three classes are perfectly separated by linear decision boundaries, yet linear regression misses the middle class completely.

import scipy

import scipy.linalg

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import cvxpy as cp

import math

np.set_printoptions(precision=4, suppress=True)

%load_ext rpy2.ipython"""FIGURE 4.2. (Left) The data come from three classes in R^2 and are easily

separated by linear decision boundaries. This plot shows the boundaires

found by linear regression of the indicator response variables. The middle

class is completely masked (never dominates).

Instead of drawing the decision boundary, showing the classified data is

enough to illustrate masking phenomenon."""

# Make the simulation data

size_cluster = 300

mat_cov = np.eye(2)

cluster1 = np.random.multivariate_normal([-4, -4], mat_cov, size_cluster)

cluster2 = np.random.multivariate_normal([0, 0], mat_cov, size_cluster)

cluster3 = np.random.multivariate_normal([4, 4], mat_cov, size_cluster)

target1, target2, target3 = np.eye(3)

print(target1, target2, target3, type(target1))

mat_x0 = np.concatenate((cluster1, cluster2, cluster3))

mat_x = np.hstack((np.ones((size_cluster*3, 1)), mat_x0))

mat_y = np.vstack((np.tile(target1, (size_cluster, 1)),

np.tile(target2, (size_cluster, 1)),

np.tile(target3, (size_cluster, 1))))

print(mat_x.shape)

print(mat_y.shape)

# Multiple linear regression

mat_beta = scipy.linalg.solve(mat_x.T @ mat_x, mat_x.T @ mat_y)

mat_y_hat = mat_x @ mat_beta

#sum(axis=1) sum the row

assert np.allclose(mat_y_hat.sum(axis=1), 1)

print(mat_y_hat)

#argmax(axis=1) Returns the indices of the maximum values along the row.

idx_classified_y = mat_y_hat.argmax(axis=1)

print(idx_classified_y, idx_classified_y.size)

classified_cluster1 = mat_x0[idx_classified_y == 0]

classified_cluster2 = mat_x0[idx_classified_y == 1]

classified_cluster3 = mat_x0[idx_classified_y == 2][1. 0. 0.] [0. 1. 0.] [0. 0. 1.] <class 'numpy.ndarray'>

(900, 3)

(900, 3)

[[ 0.79118865 0.33248045 -0.1236691 ]

[ 0.85014483 0.33261569 -0.18276052]

[ 0.78423187 0.33116915 -0.11540103]

...

[-0.21401706 0.3339582 0.88005886]

[-0.21901358 0.33362912 0.88538446]

[-0.06011645 0.33403974 0.72607671]]

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 2 0 0 2 2 2 2 2 0 2 0 2 0 2 2 0 0 0 0 2 0 2 2 2 2 2 2 0 2 2 2 0

2 0 0 2 0 2 0 0 0 2 0 0 0 2 2 0 2 2 0 0 2 2 2 0 0 0 0 0 2 2 0 0 0 0 2 0 0

2 1 0 2 0 0 2 0 0 2 0 2 0 2 2 2 2 0 0 0 0 2 2 0 2 0 0 2 0 0 2 0 2 2 2 0 0

0 0 2 2 2 2 2 0 2 2 2 0 2 0 0 2 0 2 0 2 2 2 2 0 2 0 0 0 0 2 2 0 2 2 0 0 0

2 2 2 0 2 0 2 2 0 0 0 2 2 0 0 2 0 2 2 2 2 2 2 0 0 2 0 0 2 0 2 0 0 2 0 0 0

2 0 0 2 2 2 0 0 0 0 0 2 0 2 0 2 0 2 0 0 2 2 2 2 2 2 0 0 2 2 2 0 0 0 0 0 2

2 0 2 2 0 0 0 2 2 2 2 2 2 2 2 2 2 0 0 0 0 2 0 0 2 2 2 2 0 0 2 0 0 2 2 2 0

2 0 0 2 0 2 0 0 2 2 2 0 0 2 0 2 0 2 0 0 0 2 0 2 0 0 0 0 0 0 2 2 2 0 2 0 0

2 0 0 2 2 0 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2] 900fig42 = plt.figure(0, figsize=(10, 5))

ax1 = fig42.add_subplot(1, 2, 1)

ax1.plot(cluster1[:,0], cluster1[:,1], 'o', color='C1', markersize=2)

ax1.plot(cluster2[:,0], cluster2[:,1], 'o', color='C0', markersize=2)

ax1.plot(cluster3[:,0], cluster3[:,1], 'o', color='C2', markersize=2)

ax1.set_xlabel('X_1')

ax1.set_ylabel('X_2')

ax1.set_title('Real Data')

ax2 = fig42.add_subplot(1, 2, 2)

ax2.plot(classified_cluster1[:,0], classified_cluster1[:,1], 'o', color='C1', markersize=2)

ax2.plot(classified_cluster2[:,0], classified_cluster2[:,1], 'o', color='C0', markersize=2)

ax2.plot(classified_cluster3[:,0], classified_cluster3[:,1], 'o', color='C2', markersize=2)

ax2.set_xlabel('X_1')

ax2.set_ylabel('X_2')

ax2.set_title('Classification using Linear Regression')

plt.show()

png

FIGURE 4.2. The data come from three classes in IR2 and are easily separated by linear decision boundaries. The right plot shows the boundaries found by linear regression of the indicator response variables. The middle class is completely masked (never dominates).

%%R

## generate data and reproduce figure 4.2

mu = c(0.25, 0.5, 0.75)

sigma = 0.005*matrix(c(1, 0,

0, 1), 2, 2)

print("sigma")

print(sigma)

library(MASS)

set.seed(1650)

N = 300

X1 = mvrnorm(n = N, c(mu[1], mu[1]), Sigma = sigma)

X2 = mvrnorm(n = N, c(mu[2], mu[2]), Sigma = sigma)

X3 = mvrnorm(n = N, c(mu[3], mu[3]), Sigma = sigma)

X = rbind(X1, X2, X3)

plot(X1[,1],X1[,2],col="orange", xlim = c(0,1),ylim = c(0,1), pch=19,

xlab = expression(X[1]), ylab = expression(X[2]))

points(X2[,1],X2[,2],col="blue", pch=19)

points(X3[,1],X3[,2],col="green", pch=19)[1] "sigma"

[,1] [,2]

[1,] 0.005 0.000

[2,] 0.000 0.005

png

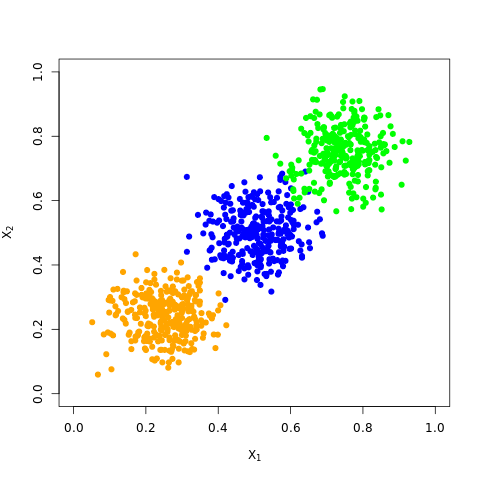

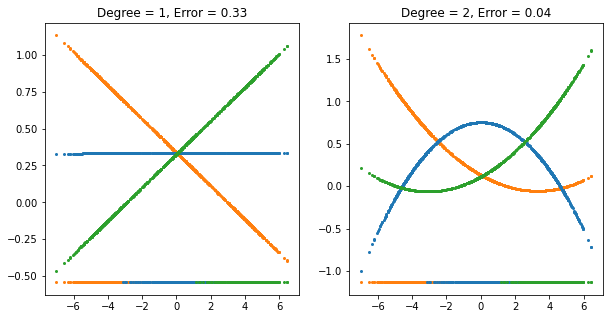

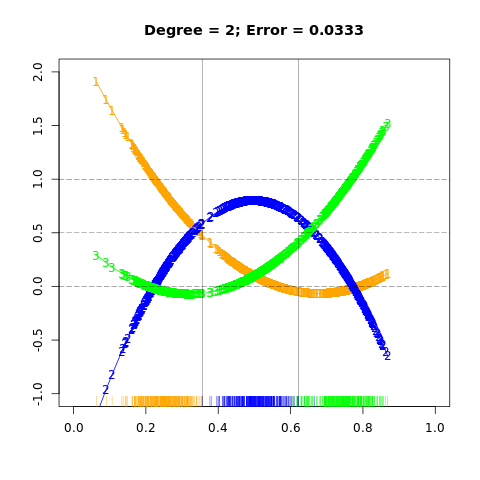

"""FIGURE 4.3. The effects of masking on linear regression in R for a three

class problem.

The rug plot at the base indicates the positions and class membership of

each observation. The three curves in each panel are the fitted regression

to the three-class indicator variables.

We see on the left that the middle class line is horizontal and its fitted

values are never dominant! Thus, observations from class 2 are classified

either as class 1 or 3."""

# Make the simulation data

size_cluster = 300

# np.random.normal() draw random samples from a normal (Gaussian) distribution with mean -4, 0, 4

cluster1 = np.random.normal(-4, size=(size_cluster,1))

cluster2 = np.random.normal(0, size=(size_cluster,1))

cluster3 = np.random.normal(4, size=(size_cluster,1))

target1, target2, target3 = np.eye(3)

print(target1, target2, target3, type(target1))

mat_x0 = np.concatenate((cluster1, cluster2, cluster3))

#mat_x is 900X2 matrix

mat_x = np.hstack((np.ones((size_cluster*3, 1)), mat_x0))

#mat_y is 900X3 matrix

mat_y = np.vstack((np.tile(target1, (size_cluster, 1)),

np.tile(target2, (size_cluster, 1)),

np.tile(target3, (size_cluster, 1))))

# Multiple linear regression with degree 1, mat_beta is 2X3 matrix

mat_beta = scipy.linalg.solve(mat_x.T @ mat_x, mat_x.T @ mat_y)

# mat_y_hat is 900X3 matrix

mat_y_hat = mat_x @ mat_beta

print("mat_y_hat")

print(mat_y_hat)

idx_classified_y = mat_y_hat.argmax(axis=1)[1. 0. 0.] [0. 1. 0.] [0. 0. 1.] <class 'numpy.ndarray'>

mat_y_hat

[[ 0.81458631 0.33179161 -0.14637792]

[ 0.82090514 0.33177137 -0.15267651]

[ 0.85306795 0.33166833 -0.18473629]

...

[-0.13503942 0.33483379 0.80020562]

[-0.08154107 0.33466241 0.74687866]

[-0.05041249 0.33456268 0.71584981]]fig43 = plt.figure(1, figsize=(10, 5))

ax1 = fig43.add_subplot(1, 2, 1)

ax1.plot(mat_x0, mat_y_hat[:, 0], 'o', color='C1', markersize=2)

ax1.plot(mat_x0, mat_y_hat[:, 1], 'o', color='C0', markersize=2)

ax1.plot(mat_x0, mat_y_hat[:, 2], 'o', color='C2', markersize=2)

y_floor, _ = ax1.get_ylim()

ax1.plot(cluster1, [y_floor]*size_cluster, 'o', color='C1', markersize=2)

ax1.plot(cluster2, [y_floor]*size_cluster, 'o', color='C0', markersize=2)

ax1.plot(cluster3, [y_floor]*size_cluster, 'o', color='C2', markersize=2)

ax1.set_title('Degree = 1, Error = 0.33')

# Multiple linear regression with degree 2

# mat_x2 is 900X3 matrix

mat_x2 = np.hstack((mat_x, mat_x0*mat_x0))

# mat_beta2 is 3X3 matrix

mat_beta2 = np.linalg.solve(mat_x2.T @ mat_x2, mat_x2.T @ mat_y)

# mat_y2_hat is 900X3 matrix

mat_y2_hat = mat_x2 @ mat_beta2

print("mat_y2_hat")

print(mat_y2_hat)

ax2 = fig43.add_subplot(1, 2, 2)

ax2.plot(mat_x0, mat_y2_hat[:, 0], 'o', color='C1', markersize=2)

ax2.plot(mat_x0, mat_y2_hat[:, 1], 'o', color='C0', markersize=2)

ax2.plot(mat_x0, mat_y2_hat[:, 2], 'o', color='C2', markersize=2)

y_floor, _ = ax2.get_ylim()

ax2.plot(cluster1, [y_floor]*size_cluster, 'o', color='C1', markersize=2)

ax2.plot(cluster2, [y_floor]*size_cluster, 'o', color='C0', markersize=2)

ax2.plot(cluster3, [y_floor]*size_cluster, 'o', color='C2', markersize=2)

ax2.set_title('Degree = 2, Error = 0.04')

plt.show()mat_y2_hat

[[ 0.91692888 0.12167828 -0.03860716]

[ 0.93141869 0.1048827 -0.03630139]

[ 1.00683423 0.01598011 -0.02281434]

...

[-0.04129243 0.14236755 0.89892488]

[-0.05170661 0.27341109 0.77829552]

[-0.05422768 0.34239542 0.71183226]]

png

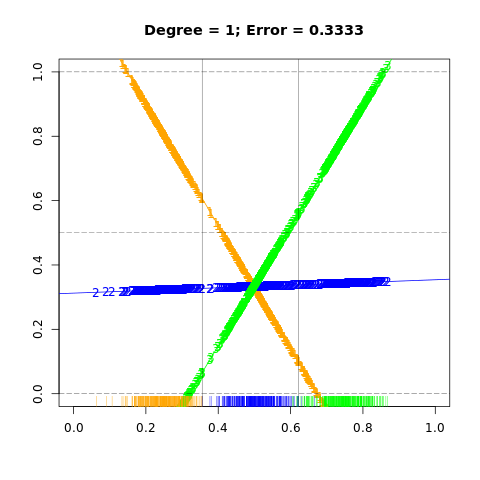

FIGURE 4.3. The effects of masking on linear regression in IR for a three-class problem. The rug plot at the base indicates the positions and class membership of each observation. The three curves in each panel are the fitted regressions to the three-class indicator variables; for example, for the blue class, \(y_{blue}\) is 1 for the blue observations, and 0 for the green and orange. The fits are linear and quadratic polynomials. Above each plot is the training error rate. The Bayes error rate is 0.025 for this problem, as is the LDA error rate.

%%R

## generate data and reproduce figure 4.3

mu = c(0.25, 0.5, 0.75)

sigma = 0.005*matrix(c(1, 0,

0, 1), 2, 2)

library(MASS)

set.seed(1650)

N = 300

X1 = mvrnorm(n = N, c(mu[1], mu[1]), Sigma = sigma)

X2 = mvrnorm(n = N, c(mu[2], mu[2]), Sigma = sigma)

X3 = mvrnorm(n = N, c(mu[3], mu[3]), Sigma = sigma)

# X is 900X2 matrix

X = rbind(X1, X2, X3)%%R

# X.proj is projection to [0.5,0.5]

X.proj = rowMeans(X)

## fit as in figure 4.3

## consider orange

Y1 = c(rep(1, N), rep(0, N*2))

## blue

Y2 = c(rep(0, N), rep(1, N), rep(0, N))

## green

Y3 = c(rep(0, N), rep(0, N), rep(1, N))

## regression

m1 = lm(Y1~X.proj)

print(m1)

print(coef(m1))

pred1 = as.numeric(fitted(m1)[order(X.proj)])

m2 = lm(Y2~X.proj)

print(m2)

print(coef(m2))

pred2 = as.numeric(fitted(m2)[order(X.proj)])

m3 = lm(Y3~X.proj)

print(m3)

print(coef(m3))

pred3 = as.numeric(fitted(m3)[order(X.proj)])

c1 = which(pred1 <= pred2)[1]

c2 = min(which(pred3 > pred2))

# class 1: 1 ~ c1

# class 2: c1+1 ~ c2

# class 3: c2+1 ~ end

# actually, c1 = c2

err1 = (abs(c2 - 2*N) + abs(c1 - N))/(3*N)

## reproduce figure 4.3 left

plot(0, 0, type = "n",

xlim = c(0, 1), ylim = c(0,1), xlab = "", ylab = "",

main = paste0("Degree = 1; Error = ", round(err1, digits = 4)))

abline(coef(m1), col = "orange")

abline(coef(m2), col = "blue")

abline(coef(m3), col = "green")

points(X.proj, fitted(m1), pch="1", col="orange")

points(X.proj, fitted(m2), pch = "2", col = "blue")

points(X.proj, fitted(m3), pch = "3", col = "green")

rug(X.proj[1:N], col = "orange")

rug(X.proj[(N+1):(2*N)], col = "blue")

rug(X.proj[(2*N+1):(3*N)], col = "green")

abline(h=c(0.0, 0.5, 1.0), lty=5, lwd = 0.4)

abline(v=c(sort(X.proj)[N], sort(X.proj)[N*2]), lwd = 0.4)

Call:

lm(formula = Y1 ~ X.proj)

Coefficients:

(Intercept) X.proj

1.283 -1.901

(Intercept) X.proj

1.282927 -1.901234

Call:

lm(formula = Y2 ~ X.proj)

Coefficients:

(Intercept) X.proj

0.31258 0.04155

(Intercept) X.proj

0.31257989 0.04155158

Call:

lm(formula = Y3 ~ X.proj)

Coefficients:

(Intercept) X.proj

-0.5955 1.8597

(Intercept) X.proj

-0.5955073 1.8596820

png

%%R

## polynomial regression

pm1 = lm(Y1~X.proj+I(X.proj^2))

pm2 = lm(Y2~X.proj+I(X.proj^2))

pm3 = lm(Y3~X.proj+I(X.proj^2))

## error rate for figure 4.3 right

pred21 = as.numeric(fitted(pm1)[order(X.proj)])

pred22 = as.numeric(fitted(pm2)[order(X.proj)])

pred23 = as.numeric(fitted(pm3)[order(X.proj)])

c1 = which(pred21 <= pred22)[1] - 1

c2 = max(which(pred23 <= pred22))

# class 1: 1 ~ c1

# class 2: c1+1 ~ c2

# class 3: c2+1 ~ end

err2 = (abs(c2 - 2*N) + abs(c1 - N))/(3*N)

## reproduce figure 4.3 right

plot(0, 0, type = "n",

xlim = c(0, 1), ylim = c(-1,2), xlab = "", ylab = "",

main = paste0("Degree = 2; Error = ", round(err2, digits = 4)))

lines(sort(X.proj), fitted(pm1)[order(X.proj)], col="orange", type = "o", pch = "1")

lines(sort(X.proj), fitted(pm2)[order(X.proj)], col="blue", type = "o", pch = "2")

lines(sort(X.proj), fitted(pm3)[order(X.proj)], col="green", type = "o", pch = "3")

abline(h=c(0.0, 0.5, 1.0), lty=5, lwd = 0.4)

## add rug

rug(X.proj[1:N], col = "orange")

rug(X.proj[(N+1):(2*N)], col = "blue")

rug(X.proj[(2*N+1):(3*N)], col = "green")

abline(v=c(sort(X.proj)[N], sort(X.proj)[N*2]), lwd = 0.4)

png

For this simple example a quadratic rather than linear fit would solve the problem. However, if there were 4 classes, a quadratic would not come down fast enough, and a cubic would be needed as well. A loose but general rule is that if \(K\ge 3\) classes are lined up, polynomial terms up to degree \(K-1\) might be needed to resolve them.

Note also that these are polynomials along the derived direction passing through the centroids, which can have orbitrary orientation. So in \(p\)-dimensional input space, one would need general polynomial terms and cross-products of total degree \(K-1\), \(O(p^{K-1})\) terms in all, to resolve such worst-case scenarios.

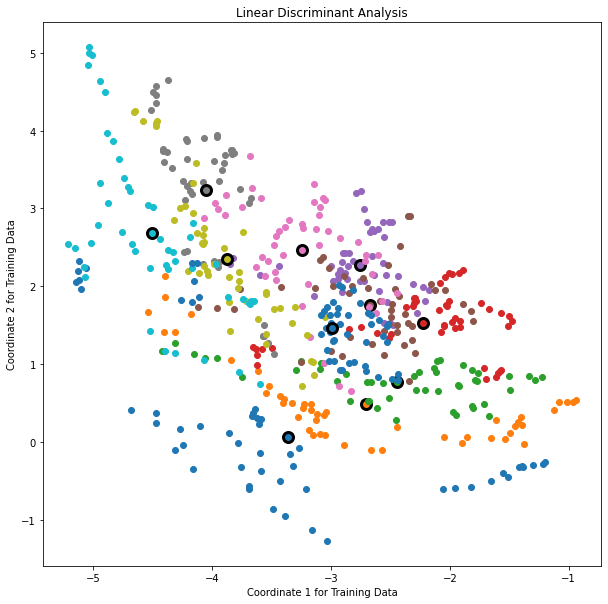

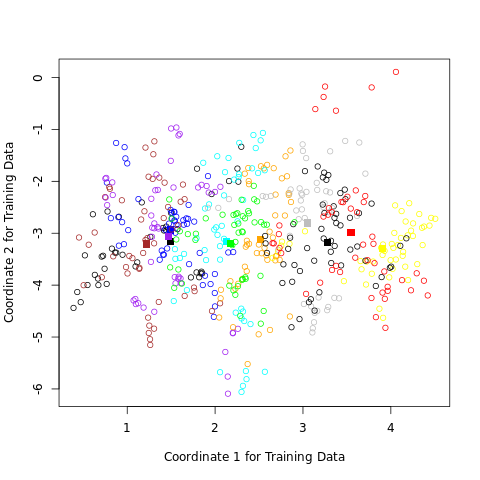

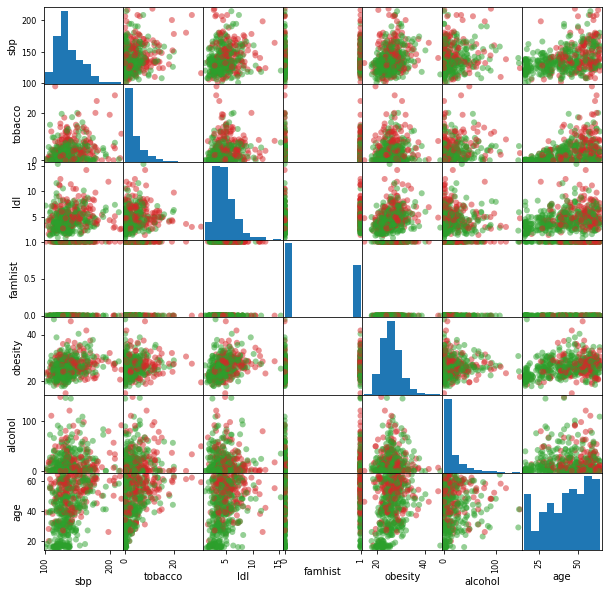

The example is extreme, but for large \(K\) and small \(p\) such maskings natrually occur. As a more realistic illustration, FIGURE 4.4 is a projection of the training data for a vowel recognition problem onto an informative two-dimensional subspace.

"""FIGURE 4.4. A two-dimensional plot of the vowel training data.

There are K=11 classes in p=10 dimensions, and this is the best view in

terms of a LDA model (Section 4.3.3). The heavy circles are the projected

mean vectors for each class. The class overlap is considerable.

This is a difficult classficiation problem, and the best methods achieve

around 40% errors on the test data. The main point here is summarized in

Table 4.1; masking has hurt in this case. Here simply the first 2 coordinates x.1 and x.2 are used."""

vowel_df = pd.read_csv('../../data/vowel/vowel.train', index_col=0)

df_y = vowel_df['y']

df_x2d = vowel_df[['x.1', 'x.2']]

vowel_df| y | x.1 | x.2 | x.3 | x.4 | x.5 | x.6 | x.7 | x.8 | x.9 | x.10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| row.names | |||||||||||

| 1 | 1 | -3.639 | 0.418 | -0.670 | 1.779 | -0.168 | 1.627 | -0.388 | 0.529 | -0.874 | -0.814 |

| 2 | 2 | -3.327 | 0.496 | -0.694 | 1.365 | -0.265 | 1.933 | -0.363 | 0.510 | -0.621 | -0.488 |

| 3 | 3 | -2.120 | 0.894 | -1.576 | 0.147 | -0.707 | 1.559 | -0.579 | 0.676 | -0.809 | -0.049 |

| 4 | 4 | -2.287 | 1.809 | -1.498 | 1.012 | -1.053 | 1.060 | -0.567 | 0.235 | -0.091 | -0.795 |

| 5 | 5 | -2.598 | 1.938 | -0.846 | 1.062 | -1.633 | 0.764 | 0.394 | -0.150 | 0.277 | -0.396 |

| … | … | … | … | … | … | … | … | … | … | … | … |

| 524 | 7 | -4.065 | 2.876 | -0.856 | -0.221 | -0.533 | 0.232 | 0.855 | 0.633 | -1.452 | 0.272 |

| 525 | 8 | -4.513 | 4.265 | -1.477 | -1.090 | 0.215 | 0.829 | 0.342 | 0.693 | -0.601 | -0.056 |

| 526 | 9 | -4.651 | 4.246 | -0.823 | -0.831 | 0.666 | 0.546 | -0.300 | 0.094 | -1.343 | 0.185 |

| 527 | 10 | -5.034 | 4.993 | -1.633 | -0.285 | 0.398 | 0.181 | -0.211 | -0.508 | -0.283 | 0.304 |

| 528 | 11 | -4.261 | 1.827 | -0.482 | -0.194 | 0.731 | 0.354 | -0.478 | 0.050 | -0.112 | 0.321 |

528 rows × 11 columns

df_x2d.describe()| x.1 | x.2 | |

|---|---|---|

| count | 528.000000 | 528.000000 |

| mean | -3.166695 | 1.735343 |

| std | 0.957965 | 1.160970 |

| min | -5.211000 | -1.274000 |

| 25% | -3.923000 | 0.916750 |

| 50% | -3.097000 | 1.733000 |

| 75% | -2.511750 | 2.403750 |

| max | -0.941000 | 5.074000 |

grouped = df_x2d.groupby(df_y)

fig44 = plt.figure(2, figsize=(10, 10))

ax = fig44.add_subplot(1, 1, 1)

for y, x in grouped:

x_mean = x.mean()

print(y)

print(x_mean)

color = next(ax._get_lines.prop_cycler)['color']

print(color)

ax.plot(x['x.1'], x['x.2'], 'o', color=color)

ax.plot(x_mean[0], x_mean[1], 'o', color=color, markersize=10,

markeredgecolor='black', markeredgewidth=3)

ax.set_xlabel('Coordinate 1 for Training Data')

ax.set_ylabel('Coordinate 2 for Training Data')

ax.set_title('Linear Discriminant Analysis')

plt.show()1

x.1 -3.359563

x.2 0.062938

dtype: float64

#1f77b4

2

x.1 -2.708875

x.2 0.490604

dtype: float64

#ff7f0e

3

x.1 -2.440250

x.2 0.774875

dtype: float64

#2ca02c

4

x.1 -2.226604

x.2 1.525833

dtype: float64

#d62728

5

x.1 -2.756312

x.2 2.275958

dtype: float64

#9467bd

6

x.1 -2.673542

x.2 1.758771

dtype: float64

#8c564b

7

x.1 -3.243729

x.2 2.468354

dtype: float64

#e377c2

8

x.1 -4.051333

x.2 3.233979

dtype: float64

#7f7f7f

9

x.1 -3.876896

x.2 2.345021

dtype: float64

#bcbd22

10

x.1 -4.506146

x.2 2.688562

dtype: float64

#17becf

11

x.1 -2.990396

x.2 1.463875

dtype: float64

#1f77b4

png

FIGURE 4.4. A two-dimensional plot of the vowel training data. There are eleven classes with \(X \in \mathbb R^{10}\), and this is the best view in terms of a LDA model (Section 4.3.3). The heavy circles are the projected mean vectors for each class. The class overlap is considerable.

%%R

load_vowel_data <- function(doScaling=FALSE,doRandomization=FALSE){

# Get the training data:

#

XTrain = read.csv("../../data/vowel/vowel.train", header=TRUE)

# Delete the column named "row.names":

#

XTrain$row.names = NULL

# Extract the true classification for each datum

#

labelsTrain = XTrain[,1]

# Delete the column of classification labels:

#

XTrain$y = NULL

#

# We try to scale ALL features so that they have mean zero and a

# standard deviation of one.

#

if( doScaling ){

XTrain = scale(XTrain, TRUE, TRUE)

means = attr(XTrain,"scaled:center")

stds = attr(XTrain,"scaled:scale")

XTrain = data.frame(XTrain)

}

#

# Sometime data is processed and stored on disk in a certain order. When doing cross validation

# on such data sets we don't want to bias our results if we grab the first or the last samples.

# Thus we randomize the order of the rows in the Training data frame to make sure that each

# cross validation training/testing set is as random as possible.

#

if( doRandomization ){

nSamples = dim(XTrain)[1]

inds = sample( 1:nSamples, nSamples )

XTrain = XTrain[inds,]

labelsTrain = labelsTrain[inds]

}

# Get the testing data:

#

XTest = read.csv("../../data/vowel/vowel.test", header=TRUE)

# Delete the column named "row.names":

#

XTest$row.names = NULL

# Extract the true classification for each datum

#

labelsTest = XTest[,1]

# Delete the column of classification labels:

#

XTest$y = NULL

# Scale the testing data using the same transformation as was applied to the training data:

# apply "1" for rows and "2" for columns

if( doScaling ){

XTest = t( apply( XTest, 1, '-', means ) )

XTest = t( apply( XTest, 1, '/', stds ) )

}

return( list( XTrain, labelsTrain, XTest, labelsTest ) )

}%%R

linear_regression_indicator_matrix = function(XTrain,yTrain){

# Inputs:

# XTrain = training matrix (without the common column of ones needed to represent the constant offset)

# yTrain = training labels matrix of true classifications with indices 1 - K (where K is the number of classes)

K = max(yTrain) # the number of classes

N = dim( XTrain )[1] # the number of samples

# form the indicator responce matrix Y

# mat.or.vec(nr, nc) creates an nr by nc zero matrix if nr is greater than 1, and a zero vector of length nr if nc equals 1.

Y = mat.or.vec( N, K )

for( ii in 1:N ){

jj = yTrain[ii]

Y[ii,jj] = 1.

}

#Y is a 528X11 matrix with 1 in the diagonal and 0 elsewhere

Y = as.matrix(Y)

# for the feature vector matrix XTrain ( append a leading column of ones ) and compute Yhat:

#

ones = as.matrix( mat.or.vec( N, 1 ) + 1.0 )

Xm = as.matrix( cbind( ones, XTrain ) )

# Bhat is a 11X11 matrix

Bhat = solve( t(Xm) %*% Xm, t(Xm) %*% Y ) # this is used for predictions on out of sample data

Yhat = Xm %*% Bhat # the discriminant predictions on the training data

#which.max() Determines the location, i.e., index of the first maximum of a numeric (or logical) vector.

#apply("1") for the row

# gHat is a 528 vector

gHat = apply( Yhat, 1, 'which.max' ) # classify this data

return( list(Bhat,Yhat,gHat) )

}%%R

library(MASS) # this has functions for lda and qda

out = load_vowel_data(doScaling=FALSE, doRandomization=FALSE)

XTrain = out[[1]]

labelsTrain = out[[2]]

XTest = out[[3]]

labelsTest = out[[4]]

lda( XTrain, labelsTrain )

predict( lda( XTrain, labelsTrain ), XTrain )$class [1] 1 1 3 4 5 5 5 8 9 10 11 1 1 3 4 5 5 5 8 9 10 11 1 1 3

[26] 4 5 5 7 8 9 10 11 1 1 3 4 5 5 7 8 9 10 11 1 2 3 4 6 5

[51] 7 8 9 10 11 1 2 3 4 11 5 5 8 9 10 11 1 2 3 4 5 6 7 8 8

[76] 10 11 1 2 3 4 5 6 7 8 8 10 6 1 2 3 4 5 6 7 8 8 10 6 1

[101] 2 3 4 5 6 7 8 8 10 11 1 2 3 4 5 6 7 8 8 10 11 1 2 3 4

[126] 5 6 7 8 10 10 11 3 2 2 6 7 6 7 8 7 10 9 3 3 2 6 7 7 7

[151] 8 7 10 11 2 3 3 6 7 6 7 8 7 10 11 2 2 3 6 7 6 7 8 9 10

[176] 11 2 2 2 6 7 6 7 8 10 10 11 2 2 2 6 7 6 7 8 9 10 11 2 2

[201] 3 4 7 6 7 7 9 9 11 2 11 3 4 7 5 7 7 9 9 11 2 2 3 4 5

[226] 7 11 7 9 9 11 2 2 3 4 5 7 11 7 9 10 11 1 2 3 4 5 11 11 7

[251] 9 9 11 1 2 3 4 5 11 6 7 9 10 11 1 2 3 4 5 6 7 8 10 10 11

[276] 1 2 3 4 5 6 7 8 10 10 11 1 2 3 4 5 6 7 8 10 10 11 1 2 3

[301] 4 5 6 7 8 10 10 11 1 2 3 4 5 2 7 8 9 10 11 1 3 3 4 5 2

[326] 7 8 10 10 3 1 1 3 4 5 6 7 8 10 10 11 1 1 3 4 5 6 5 8 10

[351] 10 11 1 1 3 4 5 6 7 8 9 10 11 1 1 3 4 5 11 7 8 10 10 11 1

[376] 1 3 4 5 11 5 8 10 10 11 1 1 3 4 5 11 5 8 9 10 11 1 2 3 4

[401] 5 5 7 8 9 9 3 1 2 3 4 5 5 7 8 9 10 4 1 2 3 4 5 5 7

[426] 8 9 9 4 1 2 3 4 5 5 7 10 9 9 4 1 2 3 4 5 6 5 10 9 9

[451] 4 1 2 3 4 5 4 5 10 9 9 4 10 3 3 11 6 6 7 8 9 8 11 10 3

[476] 3 11 5 6 7 9 9 8 11 10 3 3 11 6 6 9 10 9 8 11 10 3 3 11 6

[501] 11 9 10 9 8 11 10 3 11 11 6 11 9 10 9 8 11 9 3 11 11 6 11 7 10

[526] 9 8 11

Levels: 1 2 3 4 5 6 7 8 9 10 11%%R

library(nnet)

fm = data.frame( cbind(XTrain,labelsTrain) )

#nnet::multinom: Fit Multinomial Log-linear Models

m = multinom( labelsTrain ~ x.1 + x.2 + x.3 + x.4 + x.5 + x.6 + x.7 + x.8 + x.9 + x.10, data=fm )

summary(m)# weights: 132 (110 variable)

initial value 1266.088704

iter 10 value 800.991170

iter 20 value 609.647137

iter 30 value 467.787809

iter 40 value 378.168922

iter 50 value 353.235470

iter 60 value 346.430211

iter 70 value 341.952991

iter 80 value 339.249147

iter 90 value 338.593226

iter 100 value 338.518492

final value 338.518492

stopped after 100 iterations

Call:

multinom(formula = labelsTrain ~ x.1 + x.2 + x.3 + x.4 + x.5 +

x.6 + x.7 + x.8 + x.9 + x.10, data = fm)

Coefficients:

(Intercept) x.1 x.2 x.3 x.4 x.5 x.6

2 11.859912 5.015869 9.14065 -0.54668237 -5.842854 5.249095 4.5380736

3 22.991130 8.754650 10.51908 -8.09716943 -6.433698 2.844026 -3.0990773

4 21.211055 9.352021 14.27583 -11.44350228 -9.398762 -5.024023 -9.9120414

5 10.661226 7.994979 18.69133 -3.90686991 -10.227598 -6.879093 -2.3500276

6 17.371541 8.472878 16.80821 -3.55182322 -9.189781 -5.125459 -2.2086056

7 -4.496469 4.108638 19.49488 -2.84114847 -10.997593 -5.176146 -0.2774606

8 -39.710811 -2.641330 21.27174 -3.35135948 -10.611230 -1.537209 5.0932251

9 -25.061995 0.313354 21.23335 -0.04657761 -8.623545 2.898791 10.0112280

10 -56.893368 -6.189874 21.79140 0.53235180 -5.991305 10.081613 14.1554875

11 12.074591 6.162025 15.21914 -2.13476689 -9.304403 -2.280308 1.4693565

x.7 x.8 x.9 x.10

2 -6.9230019 -1.5923565 -0.1703113 3.852009

3 -11.7597260 -7.9008092 -0.2394799 2.098955

4 -8.8182541 -6.6685842 0.8984416 3.187334

5 -4.1403291 -5.6554346 2.1035335 4.041918

6 -4.4687362 -7.2742827 0.9639560 3.861004

7 -2.3347884 0.3774047 5.5473928 5.496751

8 0.2155082 9.7082600 12.3540217 10.138371

9 3.5998811 6.8699809 11.8096580 10.206290

10 9.1879114 8.2389248 16.1435153 11.816428

11 -6.8252204 -4.9959056 -0.5257904 2.252969

Std. Errors:

(Intercept) x.1 x.2 x.3 x.4 x.5 x.6 x.7

2 3.791808 1.580918 2.419225 1.220975 1.500400 1.561514 1.455001 1.748906

3 4.614425 1.841941 2.666256 2.012425 1.600956 2.175967 2.084401 2.360013

4 4.580466 1.799125 2.878256 2.158141 1.790547 2.251240 2.606130 2.143600

5 4.761204 1.813573 2.915254 1.669411 1.780769 2.085541 2.321362 2.083458

6 4.461622 1.772211 2.867150 1.576007 1.713658 2.056081 2.128165 2.016414

7 5.243927 1.843223 2.931897 1.610943 1.726087 2.065063 2.315576 2.087362

8 7.509088 2.155127 3.082591 1.771398 1.816429 2.384532 2.631285 2.315004

9 6.425780 1.956012 3.020526 1.460973 1.728442 2.058597 2.416913 2.190779

10 9.849426 2.554512 3.096157 1.513431 1.819314 2.332869 2.542162 2.536305

11 4.355988 1.753685 2.850742 1.439323 1.710062 1.925247 1.987077 1.984446

x.8 x.9 x.10

2 1.562736 1.046892 1.512046

3 2.191037 1.466805 1.646052

4 2.371566 1.507766 1.672210

5 2.360183 1.569246 1.705087

6 2.273602 1.392899 1.600117

7 2.520142 1.750712 1.713267

8 2.820331 2.284959 2.050160

9 2.648977 2.217472 1.950013

10 2.969891 2.488993 2.097796

11 2.148410 1.320647 1.552863

Residual Deviance: 677.037

AIC: 897.037 %%R

library(MASS) # this has functions for lda and qda

out = load_vowel_data(doScaling=FALSE, doRandomization=FALSE)

XTrain = out[[1]]

labelsTrain = out[[2]]

XTest = out[[3]]

labelsTest = out[[4]]

# TRAIN A LINEAR REGRESSION BASED MODEL:

#

out = linear_regression_indicator_matrix(XTrain,labelsTrain)

Bhat = out[[1]]

Yhat = out[[2]]

tpLabels = out[[3]]

numCC = sum( (tpLabels - labelsTrain) == 0 ) #total correct predictions

numICC = length(tpLabels)-numCC #total incorrect predictions

eRateTrain = numICC / length(tpLabels) # error rate

# predict on the testing data with this classifier:

#

N = length(labelsTest)

ones = as.matrix( mat.or.vec( N, 1 ) + 1.0 )

Xm = as.matrix( cbind( ones, XTest ) )

tpLabels = apply( Xm %*% Bhat, 1, 'which.max' )

numCC = sum( (tpLabels - labelsTest) == 0 )

numICC = length(tpLabels)-numCC

eRateTest = numICC / length(tpLabels)

print(sprintf("%40s: %10.6f; %10.6f","Linear Regression",eRateTrain,eRateTest))

# TRAIN A LDA MODEL:

# MASS::lda

ldam = lda( XTrain, labelsTrain )

# get this models predictions on the training data

#

predTrain = predict( ldam, XTrain )

tpLabels = as.double( predTrain$class )

numCC = sum( (tpLabels - labelsTrain) == 0 )

numICC = length(tpLabels)-numCC

eRateTrain = numICC / length(tpLabels)

# get this models predictions on the testing data

#

predTest = predict( ldam, XTest )

tpLabels = as.double( predTest$class )

numCC = sum( (tpLabels - labelsTest) == 0 )

numICC = length(tpLabels)-numCC

eRateTest = numICC / length(tpLabels)

print(sprintf("%40s: %10.6f; %10.6f","Linear Discriminant Analysis (LDA)",eRateTrain,eRateTest))

# TRAIN A QDA MODEL:

qdam = qda( XTrain, labelsTrain )

# get this models predictions on the training data

#

predTrain = predict( qdam, XTrain )

tpLabels = as.double( predTrain$class )

numCC = sum( (tpLabels - labelsTrain) == 0 )

numICC = length(tpLabels)-numCC

eRateTrain = numICC / length(tpLabels)

# get this models predictions on the testing data

#

predTest = predict( qdam, XTest )

tpLabels = as.double( predTest$class )

numCC = sum( (tpLabels - labelsTest) == 0 )

numICC = length(tpLabels)-numCC

eRateTest = numICC / length(tpLabels)

print(sprintf("%40s: %10.6f; %10.6f","Quadratic Discriminant Analysis (QDA)",eRateTrain,eRateTest))

library(nnet)

fm = data.frame( cbind(XTrain,labelsTrain) )

#nnet::multinom: Fit Multinomial Log-linear Models

m = multinom( labelsTrain ~ x.1 + x.2 + x.3 + x.4 + x.5 + x.6 + x.7 + x.8 + x.9 + x.10, data=fm )

yhat_train = predict( m, newdata=XTrain, "class" )

yhat_test = predict( m, newdata=XTest, "class" )

numCC = sum( (as.integer(yhat_train) - labelsTrain) == 0 )

numICC = length(labelsTrain)-numCC

eRateTrain = numICC / length(labelsTrain)

numCC = sum( (as.integer(yhat_test) - labelsTest) == 0 )

numICC = length(labelsTest)-numCC

eRateTest = numICC / length(labelsTest)

print(sprintf("%40s: %10.6f; %10.6f","Logistic Regression",eRateTrain,eRateTest))[1] " Linear Regression: 0.477273; 0.666667"

[1] " Linear Discriminant Analysis (LDA): 0.316288; 0.556277"

[1] " Quadratic Discriminant Analysis (QDA): 0.011364; 0.528139"

# weights: 132 (110 variable)

initial value 1266.088704

iter 10 value 800.991170

iter 20 value 609.647137

iter 30 value 467.787809

iter 40 value 378.168922

iter 50 value 353.235470

iter 60 value 346.430211

iter 70 value 341.952991

iter 80 value 339.249147

iter 90 value 338.593226

iter 100 value 338.518492

final value 338.518492

stopped after 100 iterations

[1] " Logistic Regression: 0.221591; 0.512987"TABLE 4.1. Training and test error rates using a variety of linear techniques on the vowel data. There are eleven classes in ten dimensions, of which three account for 90% of the variance (via a principal components analysis). We see that linear regression is hurt by masking, increasing the test and training error by over 10%.

\(\S\) 4.3. Linear Discriminant Analysis

\(\S\) 2.4. Decision theory for classification tells us that we need to know the class posteriors \(\text{Pr}(G|X)\) for optimal classification. Suppose

- \(f_k(x)\) is the class-conditional density of \(X\) in class \(G=k\),

- \(\pi_k\) is the prior probability of class \(k\), with \(\sum\pi_k=1\).

A simple application of Bayes theorem gives us

\[\begin{equation} \text{Pr}(G=k|X=x) = \frac{f_k(x)\pi_k}{\sum_{l=1}^K f_l(x)\pi_l}. \end{equation}\]

We see that in terms of ability to classify, it is enough to have the \(f_k(x)\).

Many techniques are based on models for the class densities:

- linear and quadratic discriminant analysis use Gaussian densities;

- more flexible mixtures of Gaussian allow for nonlinear decision boundaires (\(\S\) 6.8);

- general nonparametric density estimates for each class density allow the most flexibility (\(\S\) 6.6.2);

- Naive Bayes models are a variant of the previous case, and assume that the inputs are conditionally independent in each class; i.e., each of the class densities are products of marginal densities (\(\S\) 6.6.3).

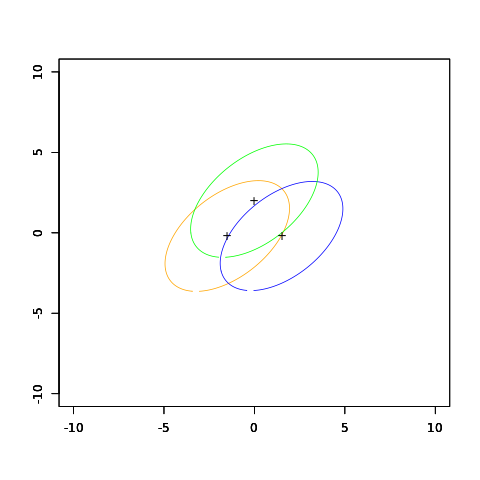

LDA from multivariate Gaussian

Suppose that we model each class density as multivariate Gaussian

\[\begin{equation} f_k(x) = \frac{1}{(2\pi)^{p/2}|\Sigma_k|^{1/2}}\exp\left\lbrace -\frac{1}{2}(x-\mu_k)^T\Sigma_k^{-1}(x-\mu_k) \right\rbrace \end{equation}\]

Linear discriminant analysis (LDA) arises in the special case when we assume that the classes have a common covariance matrix \(\Sigma_k=\Sigma,\forall k\).

In comparing two classes \(k\) and \(l\), it is sufficient to look at the log-ratio, and we see that as an equation linear in \(x\), \[ \begin{align} \log\frac{\text{Pr}(G=k|X=x)}{\text{Pr}(G=l|X=x)} &= \log\frac{f_k(x)}{f_l(x)} + \log\frac{\pi_k}{\pi_l} \\ &= \log\frac{\pi_k}{\pi_l} - \frac{1}{2}(\mu_k+\mu_l)^T\Sigma^{-1}(\mu_k-\mu_l)+x^T\Sigma^{-1}(\mu_k-\mu_l) \\ &= \log\frac{\pi_k}{\pi_l} - \frac{1}{2}\mu_k^T\Sigma^{-1}\mu_k + \frac{1}{2}\mu_l^T\Sigma^{-1}\mu_l + x^T\Sigma^{-1}(\mu_k-\mu_l) \\ &= \delta_k(x) - \delta_l(x), \end{align} \] where \(\delta_k\) is the linear discriminant function

\[\begin{equation} \delta_k(x) = -\frac{p}{2}\log(2\pi) -\frac{1}{2}\log(|\Sigma|) + x^T\Sigma^{-1}\mu_k - \frac{1}{2}\mu_k^T\Sigma^{-1}\mu_k + \log\pi_k \end{equation}\]

\[\begin{equation} \delta_l(x) = -\frac{p}{2}\log(2\pi) -\frac{1}{2}\log(|\Sigma|) + x^T\Sigma^{-1}\mu_l - \frac{1}{2}\mu_l^T\Sigma^{-1}\mu_l + \log\pi_l \end{equation}\]

This linear log-odds function implies that the decision boundary between classes \(k\) and \(l\)

\[\begin{equation} \left\lbrace x: \delta_k(x) - \delta_l(x) = 0 \right\rbrace \end{equation}\]

is linear in \(x\); in \(p\) dimensions a hyperplane. Also the linear discriminant functions are equivalent description of the decision rule, with

\[\begin{equation} G(x) = \arg\max_k \delta_k(x). \end{equation}\]

Estimating parameters

In practice we do not know the parameters of the Gaussian distributions, and will need to estimate them using our training data:

- \(\hat\pi_k = N_k/N\),

- \(\hat\mu_k = \sum_{g_i = k} x_i/N_k\);

- \(\hat\Sigma = \sum_{k=1}^K \sum_{g_i=k}(x_i-\hat\mu_k)(x_i-\hat\mu_k)^T/(N-K)\).

Simple correspondence between LDA and linear regression with two classes

The LDA rule classifies to class 2 if

\[\delta_2(x)-\delta_1(x)>0\] or

\[ \begin{equation} x^T\hat\Sigma^{-1}(\hat\mu_2-\hat\mu_1) > \frac{1}{2}(\hat\mu_2+\hat\mu_1)^T\hat\Sigma^{-1}(\hat\mu_2-\hat\mu_1) - \log\frac{N_2}{N_1}, \end{equation} \] and class 1 otherwise. If we code the targets in the two classees as \(+1\) and \(-1\) respectively, then the coefficient vector from least squares is proportional to the LDA direction shown above (Exercise 4.2). However unless \(N_1=N_2\) the intercepts are different and hence the resulting decision rules are different.

If \(K>2\), LDA is not the same as linear regression of the class indicator matrix, and it avoids the masking problems (Hastie et al., 1994). A correspondence can be established through the notion of optimal scoring, discussed in \(\S\) 12.5.

Practice beyond the Gaussian assumption

Since the derivation of the LDA direction via least squares does not use a Gaussian assumption for the features, its applicability extends beyond the realm of Gaussian data. However the derivation of the particular intercept or cut-point given in the above LDA rule does require Gaussian data. Thus it makes sense to instead choose the cut-point that empirically minimizes training error for a given dataset. This something we have found to work well in practive, but have not seen it mentioned in the literature.

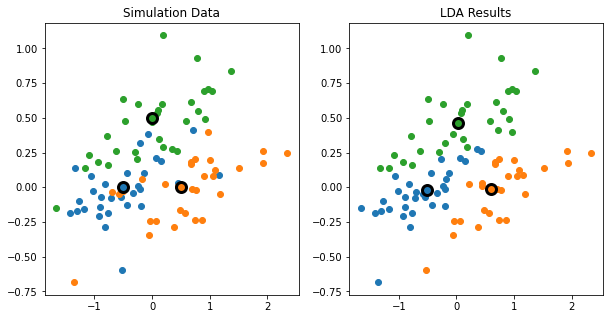

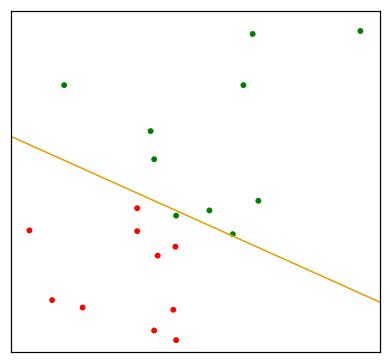

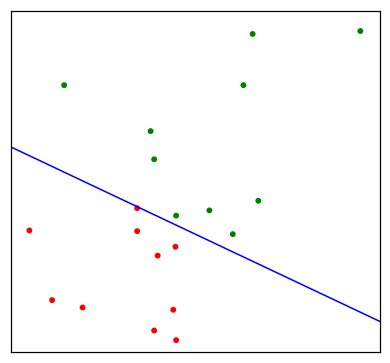

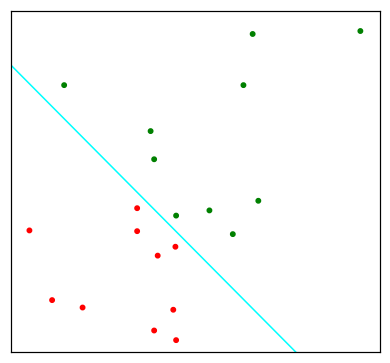

"""FIGURE 4.5. An idealized example with K=3, p=2, and a common covariance

Here the right panel shows the LDA classification results instead of the

decision boundaries."""size_cluster = 30

# mat_rand = scipy.rand(2, 2)

# cov = mat_rand.T @ mat_rand / 10

cov = np.array([[1.0876306, 0.2065698],

[0.2065698, 0.1157603]])/2

cluster1 = np.random.multivariate_normal([-.5, 0], cov, size_cluster)

cluster2 = np.random.multivariate_normal([.5, 0], cov, size_cluster)

cluster3 = np.random.multivariate_normal([0, .5], cov, size_cluster)

# Estimating parameters axis = 0 is the means of the rows

vec_mean1 = cluster1.mean(axis=0)

print(vec_mean1)

vec_mean2 = cluster2.mean(axis=0)

print(vec_mean2)

vec_mean3 = cluster3.mean(axis=0)

print(vec_mean3)

cluster_centered1 = cluster1 - vec_mean1

cluster_centered2 = cluster2 - vec_mean2

cluster_centered3 = cluster3 - vec_mean3

#mat_cov is 2X2 covariance matrix, which is the \hat\Sigma

mat_cov = (cluster_centered1.T @ cluster_centered1 +

cluster_centered2.T @ cluster_centered2 +

cluster_centered3.T @ cluster_centered3)/(3*size_cluster-3)

print(mat_cov)[-0.50612656 -0.01731414]

[ 0.59327288 -0.01532545]

[0.02818347 0.45984356]

[[0.53340587 0.10735396]

[0.10735396 0.05431232]]# Calculate linear discriminant scores

# mat_cov @ sigma_inv_mu123 = np.vstack((vec_mean1, vec_mean2, vec_mean3)).T

# sigma_inv_mu123 is \Sigma^{-1} @ u_{123}^T

sigma_inv_mu123 = scipy.linalg.solve(

mat_cov,

np.vstack((vec_mean1, vec_mean2, vec_mean3)).T,

)

print("sigma_inv_mu123")

print(sigma_inv_mu123)

print(sigma_inv_mu123.T)

sigma_inv_mu1, sigma_inv_mu2, sigma_inv_mu3 = sigma_inv_mu123.T

# mat_x is 90X2 matrix

# sigma_inv_mu123 is 2X3 matrix

mat_x = np.vstack((cluster1, cluster2, cluster3))

# mat_delta is 90X3 matrix

mat_delta = (mat_x @ sigma_inv_mu123 -

np.array((vec_mean1 @ sigma_inv_mu1.T,

vec_mean2 @ sigma_inv_mu2.T,

vec_mean3 @ sigma_inv_mu3.T))/2)

cluster_classified1 = mat_x[mat_delta.argmax(axis=1) == 0]

cluster_classified2 = mat_x[mat_delta.argmax(axis=1) == 1]

cluster_classified3 = mat_x[mat_delta.argmax(axis=1) == 2]sigma_inv_mu123

[[-1.46914484 1.9413035 -2.74196494]

[ 2.58512974 -4.1193617 13.88643346]]

[[-1.46914484 2.58512974]

[ 1.9413035 -4.1193617 ]

[-2.74196494 13.88643346]]fig45 = plt.figure(0, figsize=(10, 5))

ax1 = fig45.add_subplot(1, 2, 1)

ax1.plot(cluster1[:, 0], cluster1[:, 1], 'o', color='C0')

ax1.plot(cluster2[:, 0], cluster2[:, 1], 'o', color='C1')

ax1.plot(cluster3[:, 0], cluster3[:, 1], 'o', color='C2')

ax1.plot(-.5, 0, 'o', color='C0', markersize=10, markeredgecolor='black',

markeredgewidth=3)

ax1.plot(.5, 0, 'o', color='C1', markersize=10, markeredgecolor='black',

markeredgewidth=3)

ax1.plot(0, .5, 'o', color='C2', markersize=10, markeredgecolor='black',

markeredgewidth=3)

ax1.set_title('Simulation Data')

ax2 = fig45.add_subplot(1, 2, 2)

ax2.plot(cluster_classified1[:, 0], cluster_classified1[:, 1], 'o', color='C0')

ax2.plot(cluster_classified2[:, 0], cluster_classified2[:, 1], 'o', color='C1')

ax2.plot(cluster_classified3[:, 0], cluster_classified3[:, 1], 'o', color='C2')

ax2.plot(vec_mean1[0], vec_mean1[1], 'o', color='C0', markersize=10,

markeredgecolor='black', markeredgewidth=3)

ax2.plot(vec_mean2[0], vec_mean2[1], 'o', color='C1', markersize=10,

markeredgecolor='black', markeredgewidth=3)

ax2.plot(vec_mean3[0], vec_mean3[1], 'o', color='C2', markersize=10,

markeredgecolor='black', markeredgewidth=3)

ax2.set_title('LDA Results')

plt.show()

png

%%R

diag(1, 3, 3) [,1] [,2] [,3]

[1,] 1 0 0

[2,] 0 1 0

[3,] 0 0 1%%R

library(MASS)

library(mvtnorm)

#sigma = diag(1, 2, 2)

sigma = matrix(c(2,1,1,2), nrow = 2)

mu1 = c(-1.5, -0.2)

mu2 = c(1.5, -0.2)

mu3 = c(0, 2)

N = 1000

set.seed(123)

dm1 = mvrnorm(N, mu1, sigma)

dm2 = mvrnorm(N, mu2, sigma)

dm3 = mvrnorm(N, mu3, sigma)

# dmvnorm Calculates the probability density function of the multivariate normal distribution

z1 = dmvnorm(dm1, mu1, sigma)

z2 = dmvnorm(dm2, mu2, sigma)

z3 = dmvnorm(dm3, mu3, sigma)

lev1 = quantile(as.numeric(z1), 0.05)

lev2 = quantile(as.numeric(z2), 0.05)

lev3 = quantile(as.numeric(z3), 0.05)

myContour <- function(mu, sigma, level, col, n=300){

x.points <- seq(-10,10,length.out=n)

y.points <- x.points

z <- matrix(0,nrow=n,ncol=n)

for (i in 1:n) {

for (j in 1:n) {

z[i,j] <- dmvnorm(c(x.points[i],y.points[j]),

mean=mu,sigma=sigma)

}

}

contour(x.points,y.points,z, levels = level, col = col, xlim = c(-10, 10), ylim = c(-10, 10), labels = "")

}

myContour(mu1, sigma, lev1, "orange")

par(new=TRUE)

myContour(mu2, sigma, lev2, "blue")

par(new=TRUE)

myContour(mu3, sigma, lev3, "green")

points(rbind(mu1, mu2, mu3), pch="+", col="black")

png

%%R

N = 30

sigma = matrix(c(2,1,1,2), nrow = 2)

mu1 = c(-1.5, -0.2)

mu2 = c(1.5, -0.2)

mu3 = c(0, 2)

set.seed(123)

dm1 = mvrnorm(N, mu1, sigma)

dm2 = mvrnorm(N, mu2, sigma)

dm3 = mvrnorm(N, mu3, sigma)

m12 = lda(rbind(dm1, dm2), rep(c("c1","c2"), each=N))

m13 = lda(rbind(dm1, dm3), rep(c("c1","c3"), each=N))

m23 = lda(rbind(dm2, dm3), rep(c("c2","c3"), each=N))

calcY <- function(c, x) { return(-1*c[1]*x/c[2]) }

calcLD <- function(object) {

mu = object$means

mu.pool = colSums(mu)/2 ## (mu1+mu2)/2

scaling = object$scaling

intercept = sum(scaling * mu.pool)/scaling[2]

slope = -1* scaling[1]/scaling[2]

return(c(intercept, slope))

}

#plot

plot(dm1[, 1], dm1[, 2], col = "orange", pch="1",

xlim = c(-5, 5), ylim = c(-5, 5),

xaxt="n", yaxt="n", xlab = "", ylab = "")

points(dm2[, 1], dm2[, 2], col = "blue", pch="2")

points(dm3[, 1], dm3[, 2], col = "green", pch="3")

points(rbind(mu1, mu2, mu3), pch="+", col="black")

clip(-5,5,-5,0)

#abline(0, -1*m12$scaling[1]/m12$scaling[2])

abline(calcLD(m12))

clip(-5,0,-5,5)

#abline(0, -1*m13$scaling[1]/m13$scaling[2])

abline(calcLD(m13))

clip(0,5,-5,5)

#abline(0, -1*m23$scaling[1]/m23$scaling[2])

abline(calcLD(m23))

png

FIGURE 4.5. The upper panel shows three Gaussian distributions, with the same covariance and different means. Included are the contours of constant density enclosing 95% of the probability in each case. The Bayes decision boundaries between each pair of classes are shown (broken straight lines), and the Bayes decision boundaries separating all three classes are the thicker solid lines (a subset of the former). On the lower we see a sample of 30 drawn from each Gaussian distribution, and the fitted LDA decision boundaries.

%%R

## #######################################

## directly compute

## ######################################

## sample mean

zmu1 = colMeans(dm1)

zmu2 = colMeans(dm2)

zmu3 = colMeans(dm3)

## sample variance

zs1 = var(dm1)

zs2 = var(dm2)

zs3 = var(dm3)

zs12 = (zs1+zs2)/2 ## ((n1-1)S1+(n2-1)S2)/(n1+n2-2)

zs13 = (zs1+zs3)/2

zs23 = (zs2+zs3)/2

## #############################

## coef:

## a = S^{-1}(mu1-mu2)

## #############################

za12 = solve(zs12) %*% (zmu1-zmu2)

za12

za13 = solve(zs13) %*% (zmu1-zmu3)

za13

za23 = solve(zs23) %*% (zmu2-zmu3)

za23

## ############################

## constant

## 0.5*a'(mu1+mu2)

## ############################

c12 = sum(za12 * (zmu1+zmu2)/2)

c13 = sum(za13 * (zmu1+zmu3)/2)

c23 = sum(za23 * (zmu2+zmu3)/2)

calcLD2 <- function(za, c) {return(c(c/za[2], -za[1]/za[2]))}

calcLD2(za12, c12)

calcLD2(za13, c13)

calcLD2(za23, c23)

cat("for class 1 and class 2",

"\nuse lda results: ", calcLD(m12), "\ncompute directly: ", calcLD2(za12, c12),

"\n",

"\nfor class 1 and class 3",

"\nuse lda results: ", calcLD(m13), "\ncompute directly: ", calcLD2(za13, c13),

"\n",

"\nfor class 2 and class 3",

"\nuse lda results: ", calcLD(m23), "\ncompute directly: ", calcLD2(za23, c23))for class 1 and class 2

use lda results: -0.1122356 1.641163

compute directly: -0.1122356 1.641163

for class 1 and class 3

use lda results: 0.8667723 -0.009281836

compute directly: 0.8667723 -0.009281836

for class 2 and class 3

use lda results: 0.2141177 0.9654518

compute directly: 0.2141177 0.9654518Quadratic Discriminant Analysis

If the \(\Sigma_k\) are not assumed to be equal, then the convenient cancellations do not occur. We then get quadratic discriminant functions (QDA),

\[\begin{equation} \delta_k(x) =\log(p(x|\mathcal G_k))+\log(\pi_k) = -\frac{p}{2}\log(2\pi) -\frac{1}{2}\log|\Sigma_k| -\frac{1}{2}(x-\mu_k)^T\Sigma_k^{-1}(x-\mu_k) + \log\pi_k \end{equation}\]

The decision boundary between each pair of classes \(k\) and \(l\) is described by a quadratic equation \(\left\lbrace x: \delta_k(x) = \delta_l(x) \right\rbrace\).

This estimates for QDA are similar to those for LDA, except that separate covariance matrices must be estimated for each class. When \(p\) is large this can mean a dramatic increase in parameters.

Since the decision boundaries are functions of the parameters of the densities, counting the number of parameters must be done with care.

For LDA, it seems there are \((K-1)\times(p+1)\) paramters, since we only need the differences \(\delta_k(x)-\delta_K(x)\) between the discriminant functions where \(K\) is some pre-chosen class (here the last), and each difference requires \(p+1\) parameters. Likewise for QDA there will be \((K-1)\times\lbrace p(p+3)/2+1 \rbrace\) parameters.

Both LDA and QDA perform well on an amazingly large and diverse set of classification tasks.

Why LDA and QDA have such a good track record?

The data are approximately Gaussian, or for LDA the covariances are approximately equal? Maybe not.

More likely a reason is that the data can only support simple decision boundaries such as linear or quadratic, and the estimates provided via the Guassian models are stable.

This is a bias-variance tradeoff – we can put up with the bias of a linear decision boundary because it can be estimated with much lower variance than more exotic alternatives. This argument is less believable for QDA, since it can have many parameters itself, although perhaps fewer than the non-parametric alternatives.

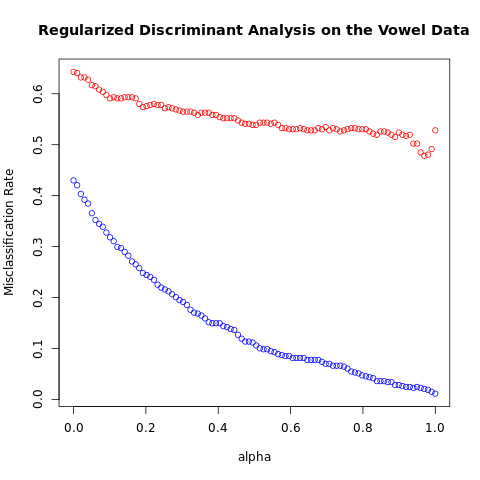

\(\S\) 4.3.1. Regularized Discriminant Analysis

\(\Sigma_k \leftrightarrow \Sigma\)

These methods are very similar in flavor to ridge regression. Friedman (1989) proposed a compromise between LDA and QDA, which allows one to shrink the separate covariances of QDA toward a common covariance \(\hat\Sigma\) as in LDA. The regularized covariance matrices have the form

\[\begin{equation} \hat\Sigma_k(\alpha) = \alpha\hat\Sigma_k + (1-\alpha)\hat\Sigma, \end{equation}\]

where \(\hat\Sigma\) is the pooled covariance matrix as used in LDA and \(\alpha\in[0,1]\) allows a continuum of models between LDA and QDA, and needs to be specified. In practice \(\alpha\) can be chosen based on the performance of the model on validation data, or by cross-validation.

\(\Sigma \leftrightarrow \sigma\)

Similar modifications allow \(\hat\Sigma\) itelf to be shrunk toward the scalar covariance,

\[\begin{equation} \hat\Sigma(\gamma) = \gamma\hat\Sigma + (1-\gamma)\hat\sigma^2\mathbf{I}, \end{equation}\]

for \(\gamma\in[0,1]\).

Combining two regularization leads to a more general family of covariances \[\hat\Sigma(\alpha,\gamma)=\alpha\hat\Sigma_k + (1-\alpha)\left(\gamma\hat\Sigma + (1-\gamma)\hat\sigma^2\mathbf{I}\right)\].

To be continued

In Chapter 12, we discuss other regularized versions of LDA, which are more suitable when the data arise from digitized analog signals and images. In these situations the features are high-dimensional and correlated, and the LDA coefficients can be regularized to be smooth or sparse in original domain of the signal.

In Chapter 18, we also deal with very high-dimensional problems, where for example, the features are gene-expression measurements in microarray studies.

%%R

repmat = function(X,m,n){

##R equivalent of repmat (matlab)

mx = dim(X)[1]

nx = dim(X)[2]

matrix(t(matrix(X,mx,nx*n)),mx*m,nx*n,byrow=T)

}%%R

rda = function( XTrain, yTrain, XTest, yTest, alpha=1.0, gamma=1.0 ){

#

# R code to implement classification using Regularized Discriminant Analysis

# Inputs:

# XTrain = training data frame

# yTrain = training labels of true classifications with indices 1 - K (where K is the number of classes)

# xTest = testing data frame

# yTest = testing response

#

# Note that

# gamma, alpha = (1.0, 1.0) gives quadratic discriminant analysis

# gamma, alpha = (1.0, 0.0) gives linear discriminant analysis

# Check that our class labels are all positive:

stopifnot( all( yTrain>0 ) )

stopifnot( all( yTest>0 ) )

K = length(unique(yTrain)) # the number of classes (expect the classes to be labeled 1, 2, 3, ..., K-1, K

N = dim( XTrain )[1] # the number of samples

p = dim( XTrain )[2] # the number of features

# Estimate \hat{sigma}^2 variance of all features:

#

XTM = as.matrix( XTrain )

dim(XTM) = prod(dim(XTM)) # we now have all data in one vector

sigmaHat2 = var(XTM) #\hat{\sigma}

# Compute the class independent covariance matrix:

#

SigmaHat = cov(XTrain) #\hat{\Sigma} 10X10 matrix

# Compute the class dependent mean vector and covariance matrices:

PiK = list()

MuHatK = list()

SigmaHatK = list()

for( ci in 1:K ){

inds = (yTrain == ci)

PiK[[ci]] = sum(inds)/N

MuHatK[[ci]] = as.matrix( colMeans( XTrain[ inds, ] ) ) # 10X1 matrix

SigmaHatK[[ci]] = cov( XTrain[ inds, ] )

}

# Blend the covariances as specified by Regularized Discriminant Analysis:

RDA_SigmaHatK = list()

for( ci in 1:K ){

RDA_SigmaHatK[[ci]] = alpha * SigmaHatK[[ci]] + ( 1 - alpha ) * ( gamma * SigmaHat + ( 1 - gamma ) * sigmaHat2 * diag(p) )

}

# Compute some of the things needed for classification via the discriminant functions:

#

RDA_SigmaHatK_Det = list()

RDA_SigmaHatK_Inv = list()

for( ci in 1:K ){

RDA_SigmaHatK_Det[[ci]] = det(RDA_SigmaHatK[[ci]])

RDA_SigmaHatK_Inv[[ci]] = solve(RDA_SigmaHatK[[ci]]) # there are numerically better ways of doing this but ...

}

# Classify Training data:

#

XTM = t(as.matrix( XTrain )) # dim= p x N

CDTrain = matrix( data=0, nrow=N, ncol=K ) # CDTrain = training class discriminants

for( ci in 1:K ){

MU = repmat( MuHatK[[ci]], 1, N ) # dim= p x N

X_minus_MU = XTM - MU # dim= p x N

SInv = RDA_SigmaHatK_Inv[[ci]] # dim= p x p

SX = SInv %*% X_minus_MU # dim= ( p x N ); S^{-1}(X-\mu)

for( si in 1:N ){

CDTrain[si,ci] = -0.5 * log(RDA_SigmaHatK_Det[[ci]]) - 0.5 * t(X_minus_MU[,si]) %*% SX[,si] + PiK[[ci]]

}

}

yHatTrain = apply( CDTrain, 1, which.max )

errRateTrain = sum( yHatTrain != yTrain )/N

# Classify Testing data:

#

N = dim( XTest )[1]

XTM = t(as.matrix( XTest )) # dim= p x N

CDTest = matrix( data=0, nrow=N, ncol=K ) # CDTest = testing class discriminants

for( ci in 1:K ){

MU = repmat( MuHatK[[ci]], 1, N ) # dim= p x N

X_minus_MU = XTM - MU # dim= p x N

SInv = RDA_SigmaHatK_Inv[[ci]] # dim= p x p

SX = SInv %*% X_minus_MU # dim= ( p x N )

for( si in 1:N ){

CDTest[si,ci] = -0.5 * log(RDA_SigmaHatK_Det[[ci]]) - 0.5 * t(X_minus_MU[,si]) %*% SX[,si] + log(PiK[[ci]])

}

}

yHatTest = apply( CDTest, 1, which.max )

errRateTest = sum( yHatTest != yTest )/N

return( list(yHatTrain,errRateTrain, yHatTest,errRateTest) )

}%%R

out = load_vowel_data( TRUE, FALSE )

XTrain = out[[1]]

yTrain = out[[2]]

XTest = out[[3]]

yTest = out[[4]]

alphas = seq(0.0,1.0,length.out=100)

err_rate_train = c()

err_rate_test = c()

for( apha in alphas ){

out = rda( XTrain, yTrain, XTest, yTest, apha )

err_rate_train = c(err_rate_train, out[[2]])

err_rate_test = c(err_rate_test, out[[4]])

}

plot( alphas, err_rate_train, type="p", col="blue", ylim=range(c(err_rate_train,err_rate_test)),

xlab="alpha", ylab="Misclassification Rate", main="Regularized Discriminant Analysis on the Vowel Data" )

lines( alphas, err_rate_test, type="p", col="red" )

min_err_rate_spot = which.min( err_rate_test )

print( sprintf( "Min test error rate= %10.6f; alpha= %10.6f",

err_rate_test[min_err_rate_spot], alphas[min_err_rate_spot] ) )

# run model selection with alpha and gamma models to combine to get Sigma_hat:

Nsamples = 100

alphas = seq(0.0,1.0,length.out=Nsamples)

gammas = seq(0.0,1.0,length.out=Nsamples)

err_rate_train = matrix( data=0, nrow=Nsamples, ncol=Nsamples )

err_rate_test = matrix( data=0, nrow=Nsamples, ncol=Nsamples )

for( ii in 1:Nsamples ){

a = alphas[ii]

for( jj in 1:Nsamples ){

g = gammas[jj]

out = rda( XTrain, yTrain, XTest, yTest, a, gamma=g )

err_rate_train[ii,jj] = out[[2]]

err_rate_test[ii,jj] = out[[4]]

}

}

inds = which( err_rate_test == min(err_rate_test), arr.ind=TRUE ); ii = inds[1]; jj = inds[2]

print( sprintf( "Min test error rate= %10.6f; alpha= %10.6f; gamma= %10.6f",

err_rate_test[ii,jj], alphas[ii], gammas[jj] ) )[1] "Min test error rate= 0.478355; alpha= 0.969697"

[1] "Min test error rate= 0.439394; alpha= 0.767677; gamma= 0.050505"

png

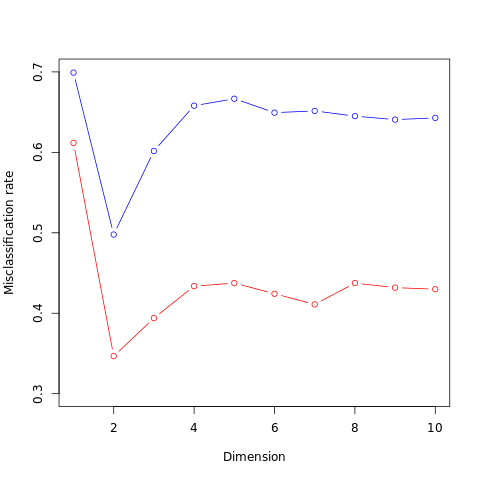

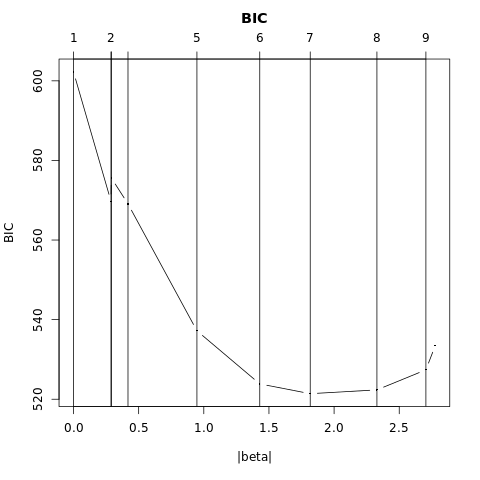

FIGURE 4.7. Test and training errors for the vowel data, using regularized discriminant analysis with a series of values of \(\alpha\in [0, 1]\). The optimum for the test data occurs around \(\alpha = 0.97\), close to quadratic discriminant analysis.

\(\S\) 4.3.2. Computations for LDA

Computations for LDA and QDA are simplified by diagonalizing \(\hat\Sigma\) or \(\hat\Sigma_k\). For the latter, suppose we compute the eigen-decomposition, for each \(k\),

\[\begin{equation} \hat\Sigma_k = \mathbf{U}_k\mathbf{D}_k\mathbf{U}_k^T, \end{equation}\]

where \(\mathbf{U}_k\) is \(p\times p\) orthogonal, and \(\mathbf{D}_k\) a diagonal matrix of positive eigenvalues \(d_{kl}\).

Then the ingredients for \(\delta_k(x)\) are

- \((x-\hat\mu_k)^T\hat\Sigma_k^{-1}(x-\hat\mu_k) = \left[\mathbf{U}_k^T(x-\hat\mu_k)\right]^T\mathbf{D}_k^{-1}\left[\mathbf{U}_k^T(x-\hat\mu_k)\right]\)

- \(\log|\hat\Sigma_k| = \sum_l \log d_{kl}\)

Note that the inversion of diagonal matrices only requires elementwise reciprocals.

The LDA classifier can be implemented by the following pair of steps:

- Sphere the data w.r.t. the common covariance estimate \(\hat\Sigma = \mathbf{U}\mathbf{D}\mathbf{U}^T\):

\[\begin{equation}

X^* \leftarrow \mathbf{D}^{-\frac{1}{2}}\mathbf{U}^TX,

\end{equation}\]

The common covariance estimate of \(X^*\) will now be the identity.

* Classify to the closest class centroid in the transformed space, modulo the effect of the class prior probabilities \(\pi_k\).

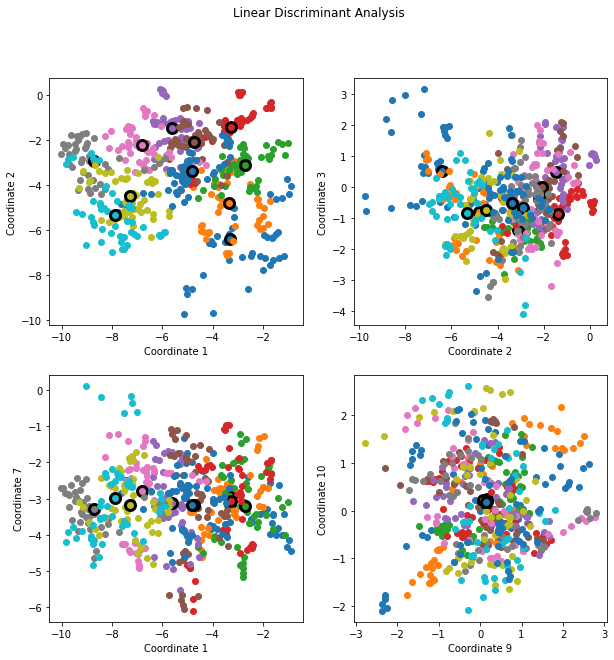

\(\S\) 4.3.3. Reduced-Rank Linear Discriminant Analysis

The \(K\) centroids in \(p\)-dimensional input space lie in an affine subspace of dimension \(\le K-1\), and if \(p \gg K\), then there will possibly be a considerable drop in dimension. Part of the popularity of LDA is due to such an additional restriction that allows us to view informative low-dimensional projections of the data.

Moreover, in locating the closest centroid, we can ignore distances orthogonal to this subspace, since they will contribute equally to each class. Thus we might just as well project the \(X^*\) onto this centroid-spanning subspace \(H_{K-1}\), and make distance comparisons there.

Therefore there is a fundamental dimension reduction in LDA, namely, that we need only consider the data in a subspace of dimension at most \(K-1\). If \(K=3\), e.g., this could allow us to view the data in \(\mathbb{R}^2\), color-coding the classes. In doing so we would not have relinquished any of the information needed for LDA classification.

What if \(K>3\)? Principal components subspace

We might then ask for a \(L<K-1\) dimensional subspace \(H_L \subseteq H_{K-1}\) optimal for LDA in some sense. Fisher defined optimal to mean that the projected centroids were spread out as much as possible in terms of variance. This amounts to finding principal component subspaces of the centroids themselves (\(\S\) 3.5.1, \(\S\) 14.5.1).

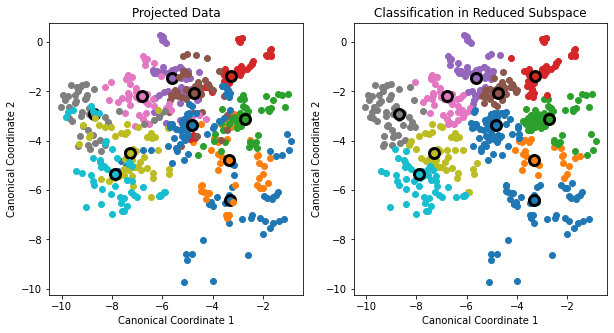

In FIGURE 4.4 with the vowel data, there are eleven classes, each a different vowel sound, in a 10D input space. The centroids require the full space in this case, since \(K-1=p\), but we have shown an optimal 2D subspace.

The dimensions are ordered, so we can compute additional dimensions in sequence. FIGURE 4.8 shows four additional pairs of coordinates, a.k.a. canonical or discriminant variables.

In summary then, finding the sequences of optimal subspaces for LDA involves the following steps:

- Compute the \(K\times p\) matrix of class centroids \(\mathbf{M}\)

the common covariance matrix \(\mathbf{W}\) (for within-class covariance). - Compute \(\mathbf{M}^* = \mathbf{MW}^{-\frac{1}{2}}\) using the eigen-decomposition of \(\mathbf{W}\).

- Compute \(\mathbf{B}^*\), the covariance matrix of \(\mathbf{M}^*\) (\(\mathbf{B}\) for between-class covariance),

and its eigen-decomposition \(\mathbf{B}^* = \mathbf{V}^*\mathbf{D}_B\mathbf{V}^{*T}\).

The columns \(v_l^*\) of \(\mathbf{V}^*\) in sequence from first to last define the coordinates of the optimal subspaces. - Then the \(l\)th discriminant variable is given by

\[\begin{equation} Z_l = v_l^TX \text{ with } v_l = \mathbf{W}^{-\frac{1}{2}}v_l^*. \end{equation}\]

"""FIGURE 4.8. Four projections onto pairs of canonical variates.

"""

df_vowel = pd.read_csv('../../data/vowel/vowel.train', index_col=0)

print('A pandas DataFrame of size {} x {} '

'has been loaded.'.format(*df_vowel.shape))

df_y = df_vowel.pop('y')

mat_x = df_vowel.values

df_vowelA pandas DataFrame of size 528 x 11 has been loaded.| x.1 | x.2 | x.3 | x.4 | x.5 | x.6 | x.7 | x.8 | x.9 | x.10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| row.names | ||||||||||

| 1 | -3.639 | 0.418 | -0.670 | 1.779 | -0.168 | 1.627 | -0.388 | 0.529 | -0.874 | -0.814 |

| 2 | -3.327 | 0.496 | -0.694 | 1.365 | -0.265 | 1.933 | -0.363 | 0.510 | -0.621 | -0.488 |

| 3 | -2.120 | 0.894 | -1.576 | 0.147 | -0.707 | 1.559 | -0.579 | 0.676 | -0.809 | -0.049 |

| 4 | -2.287 | 1.809 | -1.498 | 1.012 | -1.053 | 1.060 | -0.567 | 0.235 | -0.091 | -0.795 |

| 5 | -2.598 | 1.938 | -0.846 | 1.062 | -1.633 | 0.764 | 0.394 | -0.150 | 0.277 | -0.396 |

| … | … | … | … | … | … | … | … | … | … | … |

| 524 | -4.065 | 2.876 | -0.856 | -0.221 | -0.533 | 0.232 | 0.855 | 0.633 | -1.452 | 0.272 |

| 525 | -4.513 | 4.265 | -1.477 | -1.090 | 0.215 | 0.829 | 0.342 | 0.693 | -0.601 | -0.056 |

| 526 | -4.651 | 4.246 | -0.823 | -0.831 | 0.666 | 0.546 | -0.300 | 0.094 | -1.343 | 0.185 |

| 527 | -5.034 | 4.993 | -1.633 | -0.285 | 0.398 | 0.181 | -0.211 | -0.508 | -0.283 | 0.304 |

| 528 | -4.261 | 1.827 | -0.482 | -0.194 | 0.731 | 0.354 | -0.478 | 0.050 | -0.112 | 0.321 |

528 rows × 10 columns

df_x_grouped = df_vowel.groupby(df_y)

size_class = len(df_x_grouped)

df_mean = df_x_grouped.mean()

print(df_mean)

print(df_vowel[df_y == 1].mean()) x.1 x.2 x.3 x.4 x.5 x.6 x.7 \

y

1 -3.359563 0.062937 -0.294062 1.203333 0.387479 1.221896 0.096375

2 -2.708875 0.490604 -0.580229 0.813500 0.201938 1.063479 -0.190917

3 -2.440250 0.774875 -0.798396 0.808667 0.042458 0.569250 -0.280062

4 -2.226604 1.525833 -0.874437 0.422146 -0.371313 0.248354 -0.018958

5 -2.756313 2.275958 -0.465729 0.225312 -1.036792 0.389792 0.236417

6 -2.673542 1.758771 -0.474562 0.350562 -0.665854 0.417000 0.162333

7 -3.243729 2.468354 -0.105063 0.396458 -0.980292 0.162312 0.019583

8 -4.051333 3.233979 -0.173979 0.396583 -1.046021 0.195187 0.086667

9 -3.876896 2.345021 -0.366833 0.317042 -0.394500 0.803375 0.025042

10 -4.506146 2.688563 -0.284917 0.469563 -0.038792 0.638875 0.139167

11 -2.990396 1.463875 -0.509812 0.371646 -0.380396 0.725042 -0.083396

x.8 x.9 x.10

y

1 0.037104 -0.624354 -0.161625

2 0.373813 -0.515958 0.080604

3 0.204958 -0.478271 0.181875

4 0.107146 -0.326271 -0.053750

5 0.424625 -0.200708 -0.280708

6 0.229250 -0.207500 0.052708

7 0.762292 -0.030271 -0.122396

8 0.820771 0.104458 0.021229

9 0.736146 -0.231833 -0.148104

10 0.387562 -0.111021 -0.273354

11 0.507667 -0.327500 -0.226729

x.1 -3.359563

x.2 0.062938

x.3 -0.294063

x.4 1.203333

x.5 0.387479

x.6 1.221896

x.7 0.096375

x.8 0.037104

x.9 -0.624354

x.10 -0.161625

dtype: float64def within_cov(df_grouped: pd.DataFrame,

df_mean: pd.DataFrame)->np.ndarray:

"""Compute the within-class covariance matrix"""

size_class = len(df_grouped)

dim = df_mean.columns.size

mat_cov = np.zeros((dim, dim))

n = 0

for (c, df), (_, mean) in zip(df_grouped, df_mean.iterrows()):

n += df.shape[0] # df is the grouped dataframe, n is the sum of the lengths of each group

mat_centered = (df - mean).values # 48 X 10 matrix for each group

mat_cov += mat_centered.T @ mat_centered # sum of the 10 X 10 within covariance matrix for each group.

return mat_cov/(n-size_class)mat_M = df_mean.values #K X p matrix of class centroids 𝐌

mat_W = within_cov(df_x_grouped, df_mean) # sum of the 10 X 10 within covariance matrix for each group.

#scipy.linalg.eigh() Find eigenvalues array and optionally eigenvectors array of matrix.

vec_D, mat_U = scipy.linalg.eigh(mat_W) # mat_W = mat_U @ np.diag(vec_D) @ mat_U.T

print(np.allclose(mat_U @ np.diag(vec_D) @ mat_U.T, mat_W))Truemat_W_inv_sqrt = (mat_U @ np.diag(np.sqrt(np.reciprocal(vec_D))) @

mat_U.T)

mat_Mstar = mat_M @ mat_W_inv_sqrt # Compute 𝐌∗=𝐌𝐖^{−1/2} using the eigen-decomposition of 𝐖; (K X p)X(p X p)=K X p

vec_Mstar_mean = mat_Mstar.mean(axis=0) # axis=0 mean along the rows, 1 X p dataframe

mat_Mstar_centered = mat_Mstar - vec_Mstar_mean # K X p dataframe

#Compute 𝐁∗ , the covariance matrix of 𝐌∗ ( 𝐁 for between-class covariance)

mat_Bstar = mat_Mstar_centered.T @ mat_Mstar_centered/(mat_Mstar.shape[0]-1)

#and its eigen-decomposition 𝐁∗=𝐕∗𝐃_𝐵𝐕∗𝑇

vec_DBstar, mat_Vstar = scipy.linalg.eigh(mat_Bstar)

#The columns 𝑣∗𝑙 of 𝐕∗ in sequence from first to last define the coordinates of the optimal subspaces.

mat_V = mat_W_inv_sqrt @ mat_Vstar # (p X p)X(p X p)=p X p

mat_x_canonical = mat_x @ mat_V # 528 X pfig48 = plt.figure(0, figsize=(10, 10))

ax11 = fig48.add_subplot(2, 2, 1)

ax12 = fig48.add_subplot(2, 2, 2)

ax21 = fig48.add_subplot(2, 2, 3)

ax22 = fig48.add_subplot(2, 2, 4)

for y in range(1, size_class+1):

mat_x_grouped = mat_x_canonical[df_y == y]

c = next(ax11._get_lines.prop_cycler)['color']

ax11.plot(mat_x_grouped[:, -1], mat_x_grouped[:, -2], 'o', color=c)

ax12.plot(mat_x_grouped[:, -2], mat_x_grouped[:, -3], 'o', color=c)

ax21.plot(mat_x_grouped[:, -1], mat_x_grouped[:, -7], 'o', color=c)

ax22.plot(mat_x_grouped[:, -9], mat_x_grouped[:, -10], 'o', color=c)

vec_centroid = mat_x_grouped.mean(axis=0)

ax11.plot(vec_centroid[-1], vec_centroid[-2], 'o', color=c,

markersize=10, markeredgecolor='black', markeredgewidth=3)

ax12.plot(vec_centroid[-2], vec_centroid[-3], 'o', color=c,

markersize=10, markeredgecolor='black', markeredgewidth=3)

ax21.plot(vec_centroid[-1], vec_centroid[-7], 'o', color=c,

markersize=10, markeredgecolor='black', markeredgewidth=3)

ax22.plot(vec_centroid[-9], vec_centroid[-10], 'o', color=c,

markersize=10, markeredgecolor='black', markeredgewidth=3)

ax11.set_xlabel('Coordinate 1')

ax11.set_ylabel('Coordinate 2')

ax12.set_xlabel('Coordinate 2')

ax12.set_ylabel('Coordinate 3')

ax21.set_xlabel('Coordinate 1')

ax21.set_ylabel('Coordinate 7')

ax22.set_xlabel('Coordinate 9')

ax22.set_ylabel('Coordinate 10')

fig48.suptitle('Linear Discriminant Analysis')

plt.show()

png

%%R

reduced_rank_LDA = function( XTrain, yTrain, XTest, yTest ){

#

# R code to implement classification using Regularized Discriminant Analysis

#

# See the section with the same name as this function in Chapter 4 from the book ESLII

#

# Inputs:

# XTrain = training data frame

# yTrain = training labels of true classifications with indices 1 - K (where K is the number of classes)

# xTest = testing data frame

# yTest = testing response

K = length(unique(yTrain)) # the number of classes (expect the classes to be labeled 1, 2, 3, ..., K-1, K

N = dim( XTrain )[1] # the number of samples

p = dim( XTrain )[2] # the number of features

# Compute the class dependent probabilities and class dependent centroids:

#

PiK = matrix( data=0, nrow=K, ncol=1 )

M = matrix( data=0, nrow=K, ncol=p )

ScatterMatrices = list()

for( ci in 1:K ){

inds = yTrain == ci

Nci = sum(inds)

PiK[ci] = Nci/N

M[ci,] = t( as.matrix( colMeans( XTrain[ inds, ] ) ) )

}

# Compute W:

#

W = cov( XTrain )

# Compute M^* = M W^{-1/2} using the eigen-decomposition of W :

#

e = eigen(W)

V = e$vectors # W = V %*% diag(e$values) %*% t(V)

W_Minus_One_Half = V %*% diag( 1./sqrt(e$values) ) %*% t(V)

MStar = M %*% W_Minus_One_Half

# Compute B^* the covariance matrix of M^* and its eigen-decomposition:

#

BStar = cov( MStar )

e = eigen(BStar)