- Chapter 3. Linear Methods for Regression

- \(\S\) 3.1. Introduction

- \(\S\) 3.2. Linear Regression Models and Least Squares

- The linear model

- Least squares fit

- Solution of least squares

- Geometrical representation of the least squares estimate

- Sampling properties of \(\hat{\beta}\)

- Inference and hypothesis testing

- Confidence intervals

- \(\S\) 3.2.1. Example: Prostate Cancer

- \(\S\) 3.2.2. The Gauss-Markov Theorem

- \(\S\) 3.2.3. Multiple Regression from Simple Univariate Regression

- \(\S\) 3.2.4. Multiple Outputs

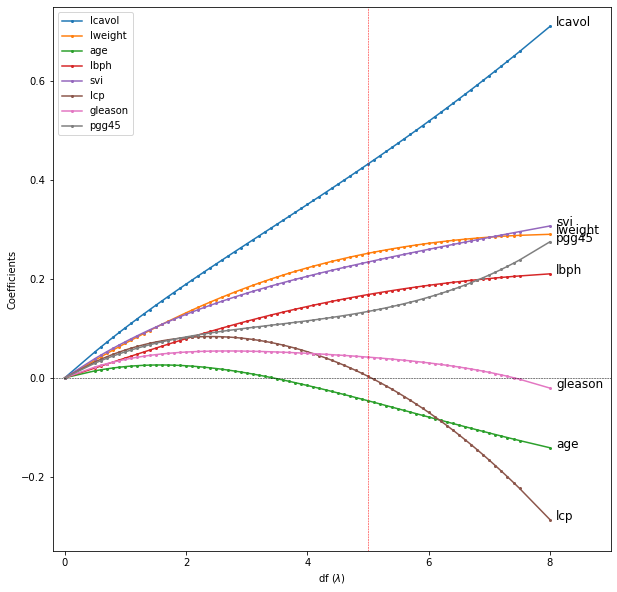

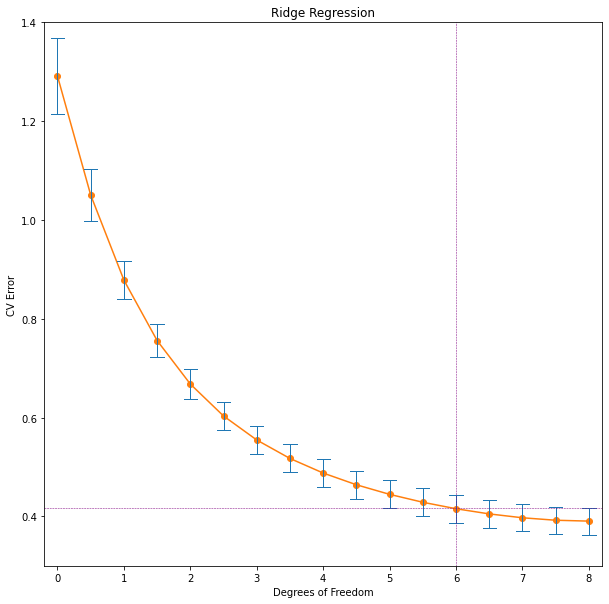

- \(\S\) 3.3. Subset Selection

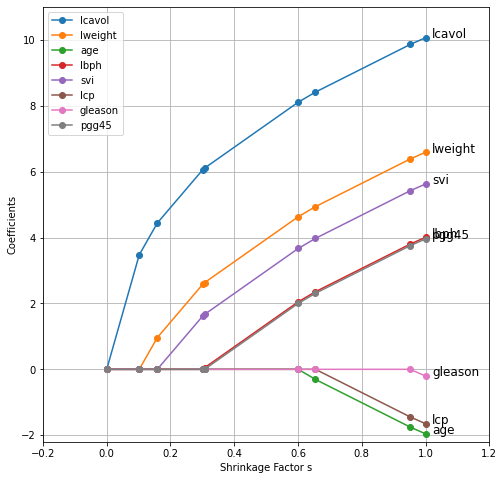

- \(\S\) 3.4. Shrinkage Methods

- \(\S\) 3.5. Methods Using Derived Input Directions

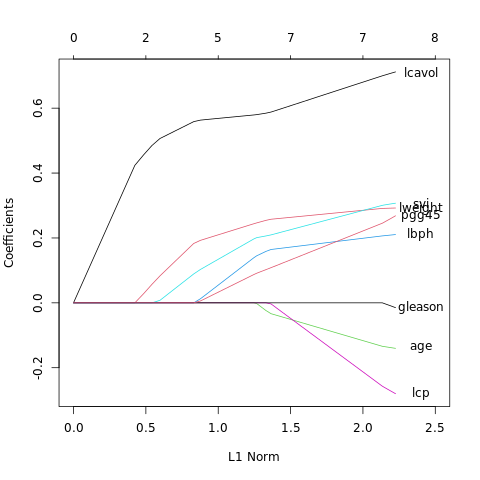

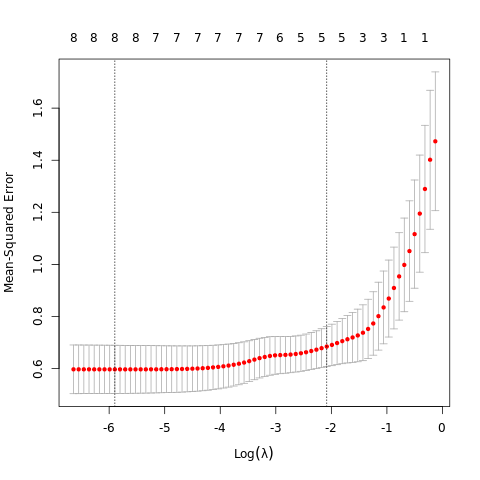

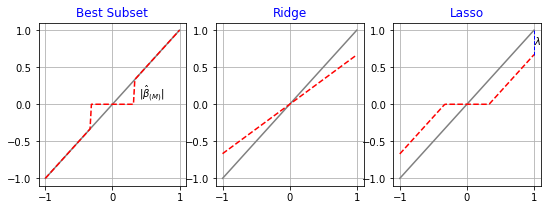

- \(\S\) 3.6. Discussion: A Comparison of the Selection and Shrinkage Methods

- \(\S\) 3.7 Multiple Outcome Shrinkage and Selection

- \(\S\) 3.8 More on the Lasso and Related Path Algorithms

- \(\S\) Exercises

- \(\S\) Ex. 3.1 (the F-statistic is equivalent to the square of the Z-score)

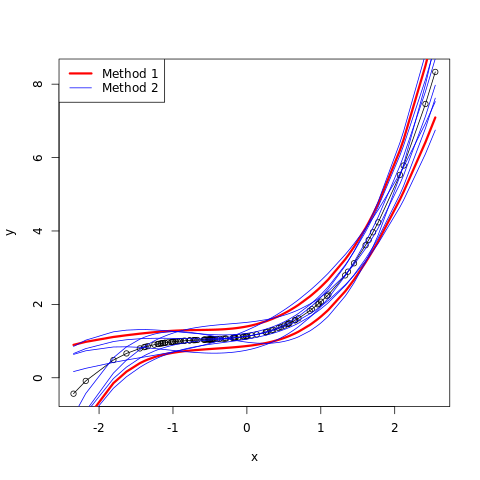

- \(\S\) Ex. 3.2 (confidence intervals on a cubic equation)

- \(\S\) Ex. 3.3 (the Gauss-Markov theorem)

- \(\S\) Ex. 3.4 (the vector of least squares coefficients from Gram-Schmidt)

- \(\S\) Ex. 3.5 (an equivalent problem to ridge regression)

- \(\S\) Ex. 3.6 (the ridge regression estimate)

- \(\S\) Ex. 3.7

- \(\S\) Ex. 3.8

- \(\S\) Ex. 3.9 (using the QR decomposition for fast forward-stepwise selection)

- References

Normal, Student’s Distributions

from scipy.stats import norm, t

import numpy as np

from matplotlib import pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

%matplotlib inline# create frozen RVs for standard normal and Student's with 30, 100 degrees of freedom

rv_t30, rv_t100, rv_norm = t(30), t(100), norm()# calculate tail probabilities Pr(|Z|>z) at some points

Z = np.linspace(1.8, 3, 13)

tail_probs = {rv_t30: [], rv_t100: [], rv_norm: []}

for z in Z:

for rv in tail_probs:

tail_probs[rv].append(1 - rv.cdf(z))

# FIGURE 3.3. explains that normal distribution is good for testing significance

for rv in tail_probs:

plt.plot(Z, tail_probs[rv])

png

for rv in tail_probs:

print(rv)<scipy.stats._distn_infrastructure.rv_frozen object at 0x7fb068298fd0>

<scipy.stats._distn_infrastructure.rv_frozen object at 0x7fb0913d9730>

<scipy.stats._distn_infrastructure.rv_frozen object at 0x7fb0913d9a60>F-distribution

from scipy.stats import f

rv_f4_58 = f(4, 58)

# 3.16 F statistics calculation

F = ((32.81-29.43)/(9-5)) / (29.43/(67-9))

# tail probability

1 - rv_f4_58.cdf(F)0.1703876176583532Mean, Variance, Standard Deviation

values = np.array([-14.82381293, -0.29423447, -13.56067979, -1.6288903, -0.31632439,

0.53459687, -1.34069996, -1.61042692, -4.03220519, -0.24332097])print('mean: ', np.mean(values))

print('variance: ', np.var(values))

print('standard deviation:', np.std(values))

print('---- unbiased estimates ----')

# ddof means Delta Degrees of Freedom

print('variance: ', np.var(values, ddof=1))

print('standard deviation:', np.std(values, ddof=1))mean: -3.7315998049999997

variance: 28.822364260579157

standard deviation: 5.36864640860051

---- unbiased estimates ----

variance: 32.024849178421285

standard deviation: 5.659050201086865Variance-Covariance, Correlation Matrix

import pandas as pddata = pd.read_csv("../../data/Prostate Cancer.txt")

names = ['lcavol', 'lweight', 'age', 'lbph', 'svi', 'lcp', 'gleason', 'pgg45']

X = data[names].values

len(X)97# correlation matrix using pandas

data[names].corr()| lcavol | lweight | age | lbph | svi | lcp | gleason | pgg45 | |

|---|---|---|---|---|---|---|---|---|

| lcavol | 1.000000 | 0.280521 | 0.225000 | 0.027350 | 0.538845 | 0.675310 | 0.432417 | 0.433652 |

| lweight | 0.280521 | 1.000000 | 0.347969 | 0.442264 | 0.155385 | 0.164537 | 0.056882 | 0.107354 |

| age | 0.225000 | 0.347969 | 1.000000 | 0.350186 | 0.117658 | 0.127668 | 0.268892 | 0.276112 |

| lbph | 0.027350 | 0.442264 | 0.350186 | 1.000000 | -0.085843 | -0.006999 | 0.077820 | 0.078460 |

| svi | 0.538845 | 0.155385 | 0.117658 | -0.085843 | 1.000000 | 0.673111 | 0.320412 | 0.457648 |

| lcp | 0.675310 | 0.164537 | 0.127668 | -0.006999 | 0.673111 | 1.000000 | 0.514830 | 0.631528 |

| gleason | 0.432417 | 0.056882 | 0.268892 | 0.077820 | 0.320412 | 0.514830 | 1.000000 | 0.751905 |

| pgg45 | 0.433652 | 0.107354 | 0.276112 | 0.078460 | 0.457648 | 0.631528 | 0.751905 | 1.000000 |

# correlation matrix using numpy

np.set_printoptions(precision=2, suppress=True)

np.corrcoef(X, rowvar=False)array([[ 1. , 0.28, 0.22, 0.03, 0.54, 0.68, 0.43, 0.43],

[ 0.28, 1. , 0.35, 0.44, 0.16, 0.16, 0.06, 0.11],

[ 0.22, 0.35, 1. , 0.35, 0.12, 0.13, 0.27, 0.28],

[ 0.03, 0.44, 0.35, 1. , -0.09, -0.01, 0.08, 0.08],

[ 0.54, 0.16, 0.12, -0.09, 1. , 0.67, 0.32, 0.46],

[ 0.68, 0.16, 0.13, -0.01, 0.67, 1. , 0.51, 0.63],

[ 0.43, 0.06, 0.27, 0.08, 0.32, 0.51, 1. , 0.75],

[ 0.43, 0.11, 0.28, 0.08, 0.46, 0.63, 0.75, 1. ]])# variance-covariance

np.cov(X, rowvar=False)array([[ 1.39, 0.14, 1.97, 0.05, 0.26, 1.11, 0.37, 14.42],

[ 0.14, 0.18, 1.11, 0.27, 0.03, 0.1 , 0.02, 1.3 ],

[ 1.97, 1.11, 55.43, 3.78, 0.36, 1.33, 1.45, 57.98],

[ 0.05, 0.27, 3.78, 2.1 , -0.05, -0.01, 0.08, 3.21],

[ 0.26, 0.03, 0.36, -0.05, 0.17, 0.39, 0.1 , 5.34],

[ 1.11, 0.1 , 1.33, -0.01, 0.39, 1.96, 0.52, 24.91],

[ 0.37, 0.02, 1.45, 0.08, 0.1 , 0.52, 0.52, 15.31],

[ 14.42, 1.3 , 57.98, 3.21, 5.34, 24.91, 15.31, 795.47]])# Use StandardScaler() function to standardize features by removing the mean and scaling to unit variance

# The standard score of a sample x is calculated as:

# z = (x - u) / s

# where u is the mean of the training samples or zero if with_mean=False, and s is the standard deviation of the training samples

# or one if with_std=False.

# Use fit(X[, y, sample_weight]) to compute the mean and std to be used for later scaling.

# transform(X[, copy]) Perform standardization by centering and scaling

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaler.fit(X)

X_transformed = scaler.transform(X)

# transformed variance-covariance matrix (nearly equals to correlation matrix)

np.cov(X_transformed, rowvar=False)array([[ 1.01, 0.28, 0.23, 0.03, 0.54, 0.68, 0.44, 0.44],

[ 0.28, 1.01, 0.35, 0.45, 0.16, 0.17, 0.06, 0.11],

[ 0.23, 0.35, 1.01, 0.35, 0.12, 0.13, 0.27, 0.28],

[ 0.03, 0.45, 0.35, 1.01, -0.09, -0.01, 0.08, 0.08],

[ 0.54, 0.16, 0.12, -0.09, 1.01, 0.68, 0.32, 0.46],

[ 0.68, 0.17, 0.13, -0.01, 0.68, 1.01, 0.52, 0.64],

[ 0.44, 0.06, 0.27, 0.08, 0.32, 0.52, 1.01, 0.76],

[ 0.44, 0.11, 0.28, 0.08, 0.46, 0.64, 0.76, 1.01]])# We can also use StandardScaler().fit_transform()

X_transformed = StandardScaler().fit_transform(X)

# transformed variance-covariance matrix (nearly equals to correlation matrix)

np.cov(X_transformed, rowvar=False)array([[ 1.01, 0.28, 0.23, 0.03, 0.54, 0.68, 0.44, 0.44],

[ 0.28, 1.01, 0.35, 0.45, 0.16, 0.17, 0.06, 0.11],

[ 0.23, 0.35, 1.01, 0.35, 0.12, 0.13, 0.27, 0.28],

[ 0.03, 0.45, 0.35, 1.01, -0.09, -0.01, 0.08, 0.08],

[ 0.54, 0.16, 0.12, -0.09, 1.01, 0.68, 0.32, 0.46],

[ 0.68, 0.17, 0.13, -0.01, 0.68, 1.01, 0.52, 0.64],

[ 0.44, 0.06, 0.27, 0.08, 0.32, 0.52, 1.01, 0.76],

[ 0.44, 0.11, 0.28, 0.08, 0.46, 0.64, 0.76, 1.01]])QR Factorization

# np.random.randn() Return a sample (or samples) from the “standard normal” distribution.

a = np.random.randn(9, 6)

# a = q@r; where q^T @ q == I, r is upper triangular

# np.linalg.qr() Compute the qr factorization of a matrix.

# Factor the matrix a as qr, where q is orthonormal and r is upper-triangular.

q, r = np.linalg.qr(a)

print(a, '\n\n', q, '\n\n', r)[[ 0.58 -0.31 0.74 1.01 1.53 -0.71]

[ 0.68 1.46 0.83 -0.44 -1.04 -0.46]

[-0.87 0.15 -1.26 -0.36 -0.73 -0.18]

[-0.12 0.67 -0.85 -0.95 0.42 -0.72]

[-1.26 0.43 -0.29 0.47 0.33 -0.45]

[ 0.84 -0.02 -0.17 -1.4 0.31 1.66]

[-0.51 -0.09 -0.58 -0.97 -0.56 0.14]

[-1.72 1.39 1.41 0.22 -0.33 0.38]

[-0.65 -0.77 0.92 0.77 0.56 -0.35]]

[[-0.21 0.08 0.32 0.35 -0.55 0.12]

[-0.25 -0.73 0.17 0.04 0.3 0.33]

[ 0.32 0.03 -0.5 0.12 0.2 -0.06]

[ 0.04 -0.29 -0.4 -0.07 -0.58 0.49]

[ 0.46 -0.06 -0.15 0.3 -0.29 -0.15]

[-0.31 -0.08 -0.07 -0.64 -0.36 -0.45]

[ 0.19 0.1 -0.21 -0.5 0.11 0.4 ]

[ 0.63 -0.44 0.42 -0.22 -0.11 -0.23]

[ 0.24 0.42 0.45 -0.23 -0.03 0.45]]

[[-2.74 0.65 0.12 0.53 -0.4 -0.35]

[ 0. -2.23 -0.59 0.87 1.01 0.08]

[ 0. 0. 2.55 1.49 0.67 -0. ]

[ 0. 0. 0. 1.66 0.5 -1.51]

[ 0. 0. 0. 0. -1.79 0.15]

[ 0. 0. 0. 0. 0. -1.44]]Chapter 3. Linear Methods for Regression

\(\S\) 3.1. Introduction

A linear regression model assumes that the regression function \(\text{E}(Y|X)\) is linear in the inputs \(X_1,\cdots,X_p\). Linear models were largely developed in the precomputer age of statistics, but even in today’s computer era there are still good reasons to study and use them. They are simple and often provide an adequate and interpretable description of how the inputs affect the output.

For prediction purposes they can sometimes outperform fancier nonlinear models, especially in situations with small numbers of training cases, low signal-to-noise ratio or sparse data.

Finally, linear methods can be applied to transformations of the inputs and this considerably expands their scope. These generalization are sometimes called basis-function methods (Chapter 5).

In this chapter we describe linear methods for regression, while in the next chapter we discuss linear methods for classification.

On some topics we go into considerable detail, as it is out firm belief that an understanding of linear methods is essential for understanding nonlinear ones.

In fact, many nonlinear techniques are direct generalizations of the linear methods discussed here.

\(\S\) 3.2. Linear Regression Models and Least Squares

The linear model

We have

- an input vector \(X^T = (X_1, X_2, \cdots, X_p)\) and

- a real-valued output \(Y\) to predict.

The linear regression model has the form with unknown parameters \(\beta_j\)’s,

\[\begin{equation} f(X) = \beta_0 + \sum_{j=1}^p X_j\beta_j. \end{equation}\]

The linear model either assumes that the regression function \(\text{E}(Y|X)\) is linear, or that the linear model is a reasonable approximation.

The variable \(X_j\) can come from different sources:

- Quantitative inputs, and its transformations, e.g., log, squared-root, square,

- basis expansions, e.g., \(X_2=X_1^2, X_3=X_1^3\), leading to a polynomial representation,

- numeric or “dummy” coding of the levels of qualitative inputs.

For example, if \(G\) is a five-level factor input, we might create \(X_j=I(G=j),\) for \(j = 1,\cdots,5\). - Interactions between variables, e.g., \(X_3=X_1\cdot X_2\).

No matter the source of the \(X_j\), the model is linear in the parameters.

Least squares fit

Typically we have a set of training data

- \((x_1, y_1), \cdots, (x_N, y_N)\) from which to estimate the parameters \(\beta\).

- Each \(x_i = (x_{i1}, x_{i2}, \cdots, x_{ip})^T\) is a vector of feature measurements for the \(i\)th case.

The most popular estimation method is least squares, in which we pick coefficients \(\beta=(\beta_0,\beta_1,\cdots,\beta_p)^T\) to minimize the residual sum of squares

\[\begin{align} \text{RSS}(\beta) &= \sum_{i=1}^N\left(y_i - f(x_i)\right)^2 \\ &= \sum_{i=1}^N \left(y_i - \beta_0 - \sum_{j=1}^px_{ij}\beta_j\right)^2. \end{align}\]

From a statistical point of view, this criterion is reasonable if the training observations \((x_i,y_i)\) represent independent random draws from their population. Even if the \(x_i\)’s were not drawn randomly, the criterion is still valid if the \(y_i\)’s are conditionally independent given the inputs \(x_i\).

See FIGURE 3.1 in the textbook for illustration of the geometry of least-squares fitting in \(\mathbb{R}^{p+1}\) space occupied by the pairs \((X,Y)\).

Note that RSS makes no assumptions about the validity of the linear model; it simply finds the best linear fit to the data. Least squares fitting is intuitively satisfying no matter how the data arise; the criterion measures the average lack of fit.

Solution of least squares

How do we minimize RSS?

Denote

- \(\mathbf{X}\) the \(N\times(p+1)\) matrix with each row an input vector (with a 1 in the first position),

- \(\mathbf{y}\) the \(N\)-vector of outputs in the training set.

Then we can write RSS as

\[\begin{equation} \text{RSS}(\beta) = \left(\mathbf{y}-\mathbf{X}\beta\right)^T\left(\mathbf{y}-\mathbf{X}\beta\right) = \|\mathbf{y}-\mathbf{X}\beta\|^2. \end{equation}\]

This is a quadratic function in the \(p+1\) parameters. Differentiating w.r.t. \(\beta\) we obtain

\[\begin{align} \frac{\partial\text{RSS}}{\partial\beta} &= -2\mathbf{X}^T\left(\mathbf{y}-\mathbf{X}\beta\right) \\ \frac{\partial^2\text{RSS}}{\partial\beta\partial\beta^T} &= 2\mathbf{X}^T\mathbf{X} \end{align}\]

Assuming (for the moment) that \(\mathbf{X}\) has full column rank, and hence \(\mathbf{X}^T\mathbf{X}\) is positive definite (therefore it is guaranteed to the unique minimum exists), we set the first derivative to zero

\[\begin{equation} \mathbf{X}^T(\mathbf{y}-\mathbf{X}\beta) = 0 \end{equation}\]

to obtain the unique solution

\[\begin{equation} \hat\beta = \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}. \end{equation}\]

The predicted values at an input vector \(x_0\) are given by

\[\begin{equation} \hat{f}(x_0) = (1:x_0)^T\hat\beta, \end{equation}\]

and fitted values at the training samples are

\[\begin{equation} \hat{y} = \mathbf{X}\hat\beta = \mathbf{X}\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y} = \mathbf{H}\mathbf{y}, \end{equation}\]

where \(\hat{y_i}=\hat{f}(x_i)\). The matrix

\[\begin{equation} \mathbf{H} = \mathbf{X}\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T \end{equation}\]

is sometimes called the “hat” matrix or projection metrix because it puts the hat on \(\mathbf{y}\) and project \(\mathbf{y}\) to \(\hat{\mathbf{y}}\) in \(col(\mathbf{X})\).

Geometrical representation of the least squares estimate

FIGURE 3.2 shows a different geometrical representation of the least squares estimate, this time in \(\mathbb{R}^N\).

We denote the column vector of \(\mathbf{X}\) by \(\mathbf{x}_0\), \(\mathbf{x}_1\), \(\cdots\), \(\mathbf{x}_p\), with \(\mathbf{x}_0 \equiv 1\). For much of what follows, this first column is treated like any other.

These vectors span a subspace of \(\mathbb{R}^N\), also referred to as the column space of \(\mathbf{X}\) denoted by \(\text{col}(\mathbf{X})\). We minimize RSS by choosing \(\hat\beta\) so that the residual vector \(\mathbf{y}-\hat{\mathbf{y}}\) is orthogonal to this subspace. This orthogonality is expressed in

\[\begin{equation} \mathbf{X}^T(\mathbf{y}-\mathbf{X}\beta) = 0, \end{equation}\]

and the resulting estimate \(\hat{\mathbf{y}}\) is hence the orthogonal projection of \(\mathbf{y}\) onto this subspace. The hat matrix \(\mathbf{H}\) computes the orthogonal projection, and hence it is also known as a projection matrix.

Rank deficiency

It might happen that the columns of \(\mathbf{X}\) are not linearly independent, so that \(\mathbf{X}\) is not of full rank. This would occur, for example, if two of the inputs were perfectly correlated, e.g., \(\mathbf{x}_2=3\mathbf{x}_1\).

Then \(\mathbf{X}^T\mathbf{X}\) is singular and the least squares coefficients \(\hat\beta\) are not uniquely defined. However, the fitted values \(\hat{\mathbf{y}}=\mathbf{X}\hat\beta\) are still the projection of \(\mathbf{y}\) onto the \(\text{col}(\mathbf{X})\); there are just more than one way to express that projection in terms of the column vectors of \(\mathbf{X}\).

The non-full-rank case occurs most often when one or more qualitative inputs are coded in a redundant fashion.

There is usually a natural way to resolve the non-unique representation, by recording and/or dropping redundant columns in \(\mathbf{X}\). Most regression software packages detect these redundancies and automatically implement some strategy for removing them.

Rank deficientcies can also occur in signal and image analysis, where the number of inputs \(p\) can exceed the number of training cases \(N\). In this case, the features are typically reduced by filtering or else the fitting is controlled by regularization (\(\S\) 5.2.3 and Chapter 18).

Sampling properties of \(\hat{\beta}\)

Up to now we have made minimal assumptions about the true distribution of the data. In order to pin down the sampling properties of \(\hat\beta\), we now assume that

- the observations \(y_i\) are uncorrelated and

- \(y_i\) have constant variance \(\sigma^2\),

- the \(x_i\) are fixed (nonrandom).

\[\begin{align} \text{E}(\hat{\beta})&=\text{E}\Bigl(\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}\Bigr)\\ &=\Bigl(\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\Bigr)\text{E}(\mathbf{y})\\ &=(\mathbf{X}^T\mathbf{X})^{-1}\mathbf{X}^T\mathbf{X}\beta=\beta \end{align}\]

\[\begin{align} \hat{\beta}-\text{E}(\hat{\beta})&=\Bigl(\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}\Bigr)-\Bigl(\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{X}\beta\Bigr)\\ &=\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T(\mathbf{y}-\mathbf{X}\beta)\\ &=\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\epsilon \end{align}\] where \(\epsilon\) is a random column vector of dimension \(N\).

The variance-covariance matrix of the least squares estmiates is

\[\begin{align} \text{Var}(\hat{\beta}) &= \text{Var}\left(\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}\right) \\ &= \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\text{Var}\left(\mathbf{y}\right)\mathbf{X}\left(\mathbf{X}^T\mathbf{X}\right)^{-1} \\ &= \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\sigma^2. \end{align}\]

Typically one estimates the variance \(\sigma^2\) by

\[\begin{equation} \hat\sigma^2 = \frac{1}{N-p-1}\sum_{i=1}^N\left(y_i-\hat{y}_i\right)^2\\ =\frac{1}{N-p-1}\sum_{i=1}^N\left(y_i-x_i^T\hat{\beta}\right)^2, \end{equation}\]

where the denominator \(N-p-1\) rather than \(N\) makes \(\hat\sigma^2\) an unbiased estimate of \(\sigma^2\):

\[\begin{equation} \text{E}(\hat\sigma^2) = \sigma^2. \end{equation}\]

If \(x\) is the standard variable in \(N(0, \sigma^2)\), then \(E(x^2) = \sigma^2\). It follows that the distance squared from origin in \(V\), \(\sum_{i=1}^k v_i^2\), has expectation \(k\sigma^2\). We now use the fact that in ordinary least squares \(\mathbf{\hat{y}}\) is the orthogonal projection of \(\mathbf{y}\) onto the column space of \(\mathbf{X}\) as a subspace of \(\mathbb R^N\). Under our assumption of the independence of the columns of \(\mathbf{X}\) this space has dimension \(p+1\). In the notation above \(\hat{\mathbf{y}}\in V\) with \(V\) the column space of \(\mathbf{X}\) and \(\mathbf{y}−\hat{\mathbf{y}} \in W\), where \(W\) is the orthogonal complement of the column space of \(\mathbf{X}\). Because \(\mathbf{y} \in \mathbb R^N\) and \(V\) is of dimension \(p + 1\), we know that \(W\) has dimension \(N − p − 1\) and \(\mathbf{y}−\hat{\mathbf{y}}\) is a random vector in \(W\) with distribution \(N(0, \sigma^2\mathbf{I}_{N−p−1})\). The sum of squares of the \(N\) components of \(\mathbf{y}−\hat{\mathbf{y}}\) is the square of the distance in \(W\) to the origin. Therefore \(\sum_{i=1}^{N}(\mathbf{y}_i−\hat{\mathbf{y}}_i)^2\) has expectation \((N − p − 1)\sigma^2\).

Inference and hypothesis testing

To draw inferences about the parameters and the model, additional assumptions are needed. We now assume that

- the linear model $f(X) = 0 + {j=1}^p X_j_j $ is the correct model for the mean. i.e., the conditional expectation of \(Y\) is linear in \(X\);

- the deviations of \(Y\) around its expectation are additive and Gaussian.

Hence

\[\begin{align} Y &= \text{E}\left(Y|X_1,\cdots,X_p\right)+\epsilon \\ &= \beta_0 + \sum_{j=1}^p X_j\beta_j + \epsilon, \end{align}\]

where \(\epsilon\sim N(0,\sigma^2)\). Then it is easy to show that

\[\begin{align} \hat\beta\sim N\left(\beta, \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\sigma^2\right), \\ (N-p-1)\hat\sigma^2\sim\sigma^2\chi^2_{N-p-1}. \end{align}\]

In addition \(\hat\beta\) and \(\hat\sigma^2\) are statistically independent.

We use these distributional properties to form tests of hypothesis and confidence intervals for the parameters \(\beta_j\). To test the null hypothesis \(\mu_0: \beta_j=0\), we form the standardized coefficient or Z-score

\[\begin{equation} z_j = \frac{\hat\beta_j}{\hat\sigma\sqrt{v_j}}, \end{equation}\]

where \(v_j = \left(\mathbf{X}^T\mathbf{X}\right)^{-1}_{jj}\).

Under the null hypothesis, \(z_j\sim t_{N-p-1}\), and hence a large (absolute) value of \(z_j\) will lead to rejection of this null hypothesis.

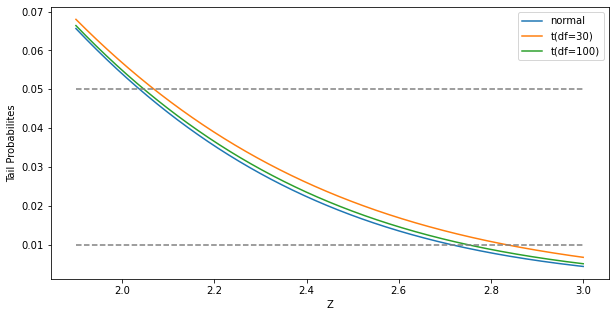

If \(\hat\sigma\) is replaced by a known value \(\sigma\), then \(z_j\) would have a standard normal distribution. The difference between the tail quatiles of \(t\)-distribution and a standard normal distribution become negligible as the sample size increases, so we typically use the normal quantiles (see FIGURE 3.3).

"""FIGURE 3.3. The tail probabilities for three distributions, t30, t100, N(0,1).

Pr(|Z| > z) for three distributions, t(df=30), t(df=100), and standard normal.

The difference between t and the standard normal becomes negligible for N > 100.

"""

import matplotlib.pyplot as plt

import scipy

import scipy.stats

x = np.linspace(1.9, 3, 1000)

pdf_gaussian = scipy.stats.norm.pdf(x)

pdf_t30 = scipy.stats.t.pdf(x, df=30)

pdf_t100 = scipy.stats.t.pdf(x, df=100)

fig = plt.figure(figsize=(10, 5))

ax = fig.add_subplot(1, 1, 1)

ax.plot(x, pdf_gaussian, label='normal')

ax.plot(x, pdf_t30, label='t(df=30)')

ax.plot(x, pdf_t100, label='t(df=100)')

ax.legend()

ax.plot([1.9, 3], [.01, .01], '--', color='gray')

ax.plot([1.9, 3], [.05, .05], '--', color='gray')

ax.set_xlabel('Z')

ax.set_ylabel('Tail Probabilites')

plt.show()

png

Often we need to test for the significance of groups of coefficients simultaneously. For example, to test if a categorical variable with \(k\) levels can be excluded from a model, we need to test whether the coefficients of the dummy variables used to represent the levels can all be set to zero.

Here we use the \(F\) statistics,

\[\begin{equation} F = \frac{(\text{RSS}_0-\text{RSS}_1)/(p_1-p_0)}{\text{RSS}_1/(N-p_1-1)}, \end{equation}\]

where

- \(\text{RSS}_1\) is for the bigger model with \(p_1+1\) parameters and

- \(\text{RSS}_0\) for the nested smaller model with \(p_0+1\) parameters,

- having \(p_1-p_0\) parameters constrained to be zero.

The \(F\) statistic measures the change in residual sum-of-squares per additional parameter in the bigger model, and it is normalized by an estimate of \(\sigma^2\).

Under the Gaussian assumption, and the null hypothesis that the smaller models is correct, the \(F\) statistics will have a \(F_{p_1-p_0,N-p_1-1}\) distribution. It can be shown (Exercise 3.1) that the \(t\)-statistic \(z_j = \hat\beta_j \big/ (\hat\sigma \sqrt{v_j})\) are equivalent to the \(F\) statistic for dropping the single coefficient \(\beta_j\) from the model.

For large \(N\), the quantiles of \(F_{p_1-p_0,N-p_1-1}\) approaches those of \(\chi^2_{p_1-p_0}/(p_1-p_0)\).

Confidence intervals

Similarly, we can isolate \(\beta_j\) in \(\hat\beta \sim N(\beta, \left(\mathbf{X}^T \mathbf{X}\right)^{-1}\sigma^2)\) to obtain a \(1-2\alpha\) confidence interval for \(\beta_j\):

\[\begin{equation} \left(\hat\beta_j-z^{1-\alpha}v_j^{\frac{1}{2}}\hat\sigma, \hat\beta_j+z^{1-\alpha}v_j^{\frac{1}{2}}\hat\sigma\right), \end{equation}\]

where \(z^{(1-\alpha)}\) is the \(1-\alpha\) percentile of the normal distribution:

\[\begin{align} z^{(1-0.025)} &= 1.96, \\ z^{(1-0.05)} &= 1.645, \text{etc}. \end{align}\]

Hence the standard practice of reporting \(\hat\beta \pm 2\cdot \text{se}(\hat\beta)\) amounts to an approximate 95% confidence interval.

Even if the Gaussian error assumption does not hold, this interval will be approximately corrent, with its coverage approaching \(1-2\alpha\) as the sample size \(N \rightarrow \infty\).

In a similar fashion we can obtain an approximate confidence set for the entire parameter vector \(\beta\), namely

\[\begin{equation} C_\beta = \left\{ \beta \big| (\hat\beta-\beta)^T\mathbf{X}^T\mathbf{X}(\hat\beta-\beta) \le \hat\sigma^2{\chi^2_{p+1}}^{(1-\alpha)}\right\}, \end{equation}\]

where \({\chi_l^2}^{(1-\alpha)}\) is the \(1-\alpha\) percentile of the chi-squared distribution on \(l\) degrees of freedom;

\[\begin{align} {\chi_5^2}^{(1-0.05)} &= 11.1, \\ {\chi_5^2}^{(1-0.1)} &= 9.2. \end{align}\]

This condifence set for \(\beta\) generates a corresponding confidence set for the true function \(f(x) = x^T\beta\), namely

\[\begin{equation} \left\{ x^T\beta \big| \beta \in C_\beta \right\} \end{equation}\]

(Exercise 3.2; FIGURE 5.4).

import scipy.stats

scipy.stats.norm(0, 1).pdf(0)0.3989422804014327scipy.stats.norm(0, 1).cdf(0)0.5#To find the probability that the variable has a value LESS than or equal

#let's say 1.64, you'd use CDF cumulative Density Function

scipy.stats.norm.cdf(1.64,0,1)0.9494974165258963scipy.stats.norm.cdf(2,0,1)0.9772498680518208#To find the probability that the variable has a value GREATER than or

#equal to let's say 1.64, you'd use SF Survival Function

scipy.stats.norm.sf(1.64,0,1)0.05050258347410371#To find the variate for which the probability is given, let's say the

#value which needed to provide a (1-0.05)% probability, you'd use the

#PPF Percent Point Function

scipy.stats.norm.ppf(.95,0,1)1.6448536269514722scipy.stats.norm.ppf(.975,0,1)1.959963984540054from scipy.stats import chi2

chi2.ppf(0.95, 5)11.070497693516351\(\S\) 3.2.1. Example: Prostate Cancer

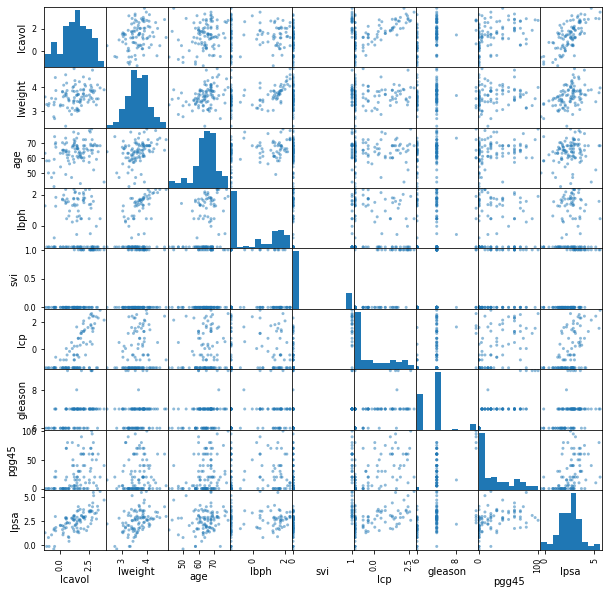

The data for this example come from a study by Stamey et al. (1989). They examined the correlation between the level of prostate-specific antigen and a number of clinical measures in men who were about to receive a radical prostatectomy.

The variables are

- log cancer volumn (\(\textsf{lcavol}\)),

- log prostate weight (\(\textsf{lweight}\)),

- \(\textsf{age}\),

- log of the amount of benign prostatic prostatic hyperplasia (\(\textsf{lbph}\)),

- seminal vesicle invasion (\(\textsf{svi}\)),

- log of capsular penetration (\(\textsf{lcp}\)),

- Gleason score (\(\textsf{gleason}\)), and

- percent of Gleason score 4 or 5 (\(\textsf{pgg45}\)).

%matplotlib inline

import math

import pandas as pd

import scipy

import scipy.stats

import matplotlib.pyplot as plt

import numpy as npdata = pd.read_csv('../../data/prostate/prostate.data', delimiter='\t',

index_col=0)

data.head(7)| lcavol | lweight | age | lbph | svi | lcp | gleason | pgg45 | lpsa | train | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | -0.579818 | 2.769459 | 50 | -1.386294 | 0 | -1.386294 | 6 | 0 | -0.430783 | T |

| 2 | -0.994252 | 3.319626 | 58 | -1.386294 | 0 | -1.386294 | 6 | 0 | -0.162519 | T |

| 3 | -0.510826 | 2.691243 | 74 | -1.386294 | 0 | -1.386294 | 7 | 20 | -0.162519 | T |

| 4 | -1.203973 | 3.282789 | 58 | -1.386294 | 0 | -1.386294 | 6 | 0 | -0.162519 | T |

| 5 | 0.751416 | 3.432373 | 62 | -1.386294 | 0 | -1.386294 | 6 | 0 | 0.371564 | T |

| 6 | -1.049822 | 3.228826 | 50 | -1.386294 | 0 | -1.386294 | 6 | 0 | 0.765468 | T |

| 7 | 0.737164 | 3.473518 | 64 | 0.615186 | 0 | -1.386294 | 6 | 0 | 0.765468 | F |

data.describe()| lcavol | lweight | age | lbph | svi | lcp | gleason | pgg45 | lpsa | |

|---|---|---|---|---|---|---|---|---|---|

| count | 97.000000 | 97.000000 | 97.000000 | 97.000000 | 97.000000 | 97.000000 | 97.000000 | 97.000000 | 97.000000 |

| mean | 1.350010 | 3.628943 | 63.865979 | 0.100356 | 0.216495 | -0.179366 | 6.752577 | 24.381443 | 2.478387 |

| std | 1.178625 | 0.428411 | 7.445117 | 1.450807 | 0.413995 | 1.398250 | 0.722134 | 28.204035 | 1.154329 |

| min | -1.347074 | 2.374906 | 41.000000 | -1.386294 | 0.000000 | -1.386294 | 6.000000 | 0.000000 | -0.430783 |

| 25% | 0.512824 | 3.375880 | 60.000000 | -1.386294 | 0.000000 | -1.386294 | 6.000000 | 0.000000 | 1.731656 |

| 50% | 1.446919 | 3.623007 | 65.000000 | 0.300105 | 0.000000 | -0.798508 | 7.000000 | 15.000000 | 2.591516 |

| 75% | 2.127041 | 3.876396 | 68.000000 | 1.558145 | 0.000000 | 1.178655 | 7.000000 | 40.000000 | 3.056357 |

| max | 3.821004 | 4.780383 | 79.000000 | 2.326302 | 1.000000 | 2.904165 | 9.000000 | 100.000000 | 5.582932 |

The correlation matrix of the predictors given in TABLE 3.1 shows many strong correlations.

"""TABLE 3.1. Correlations of predictors in the prostate cancer data

It shows many string correlations. For example, that both `lcavol` and

`lcp` show a strong relationship with the response `lpsa`, and with each

other. We need to fit the effects jointly to untangle the relationships

between the predictors and the response."""

data.corr()| lcavol | lweight | age | lbph | svi | lcp | gleason | pgg45 | lpsa | |

|---|---|---|---|---|---|---|---|---|---|

| lcavol | 1.000000 | 0.280521 | 0.225000 | 0.027350 | 0.538845 | 0.675310 | 0.432417 | 0.433652 | 0.734460 |

| lweight | 0.280521 | 1.000000 | 0.347969 | 0.442264 | 0.155385 | 0.164537 | 0.056882 | 0.107354 | 0.433319 |

| age | 0.225000 | 0.347969 | 1.000000 | 0.350186 | 0.117658 | 0.127668 | 0.268892 | 0.276112 | 0.169593 |

| lbph | 0.027350 | 0.442264 | 0.350186 | 1.000000 | -0.085843 | -0.006999 | 0.077820 | 0.078460 | 0.179809 |

| svi | 0.538845 | 0.155385 | 0.117658 | -0.085843 | 1.000000 | 0.673111 | 0.320412 | 0.457648 | 0.566218 |

| lcp | 0.675310 | 0.164537 | 0.127668 | -0.006999 | 0.673111 | 1.000000 | 0.514830 | 0.631528 | 0.548813 |

| gleason | 0.432417 | 0.056882 | 0.268892 | 0.077820 | 0.320412 | 0.514830 | 1.000000 | 0.751905 | 0.368987 |

| pgg45 | 0.433652 | 0.107354 | 0.276112 | 0.078460 | 0.457648 | 0.631528 | 0.751905 | 1.000000 | 0.422316 |

| lpsa | 0.734460 | 0.433319 | 0.169593 | 0.179809 | 0.566218 | 0.548813 | 0.368987 | 0.422316 | 1.000000 |

FIGURE 1.1 of Chapter 1 is a scatterplot matrix showing every pairwise plot between the variables.

We see that \(\textsf{svi}\) is a binary variable, and \(\textsf{gleason}\) is an ordered categorical variable.

We see, for example, that both \(\textsf{lcavol}\) and \(\textsf{lcp}\) show a strong relationship with the response \(\textsf{lpsa}\), and with each other. We need to fit the effects jointly to untangle the relationships between the predictors and the response.

"""FIGURE 1.1. Scatterplot matrix of the prostate cancer data"""

pd.plotting.scatter_matrix(data, figsize=(10, 10))

plt.show()

png

We fit a linear model to \(\textsf{lpsa}\), after first standardizing the predictors to have unit variance. We randomly split the dataset into a training set of size 67 and a test set of size 30. We applied least squares estimation to the training set, producing the estimates, standard errors and \(Z\)-scores shown in TABLE 3.2.

The \(Z\)-scores measure the effect of dropping that variable from the model. A \(Z\)-score greater than 2 in absolute value is approximately significant at the 5% level. For our example, we have 9 parameters, and the 0.025 tail quatiles of the \(t_{67-9}\) distributions are \(\pm 2.002\)!

from scipy.stats import t

t.ppf(0.975, 67-9)2.0017174830120923"""Table 3.2. Linear model fit to the prostate cancer data.

Roughly a Z score larger than two in absolute value is significant nonzero

at the p = 0.05 level.

We fit a linear model to the log of prostate-specific antigen, `lpsa`,

after first standardizing the predictors to have unit variance. We randomly

split the dataset into a training set of size 67 and a test set of size 30.

"""

data = pd.read_csv('../../data/prostate/prostate.data', delimiter='\t',

index_col=0)

data_y = data.pop('lpsa')

mask_train = data.pop('train')

data_x_normalized = data.apply(scipy.stats.zscore)

# data_normalized.describe() # check it normalized!

data_x_normalized.describe()

data_x_train = data_x_normalized[mask_train == 'T']

data_y_train = data_y[mask_train == 'T']

data_x_test = data_x_normalized[mask_train == 'F']

data_y_test = data_y[mask_train == 'F']

size_train = sum(mask_train == 'T')

size_test = sum(mask_train == 'F')

size_predictor = len(data_x_train.columns)

mat_x = np.hstack((np.ones((size_train, 1)), data_x_train.values))

vec_y = data_y_train.values

mat_xt = np.transpose(mat_x)

mat_xx_inv = scipy.linalg.inv(mat_x.T @ mat_x)

ols_beta = mat_xx_inv @ mat_x.T @ vec_y

vec_y_fitted = mat_x @ ols_beta

est_sigma2 = sum((vec_y-vec_y_fitted)**2)/(size_train-size_predictor-1)

table_term = ['Intercept'] + list(data_x_train.columns)

table_coeff = ols_beta

table_stderr = [math.sqrt(mat_xx_inv[j, j]*est_sigma2)

for j in range(size_predictor+1)]

print('{0:>15} {1:>15} {2:>15} {3:>15}'.format('Term', 'Coefficient',

'Std. Error', 'Z Score'))

print('-'*64)

for term, coeff, stderr in zip(table_term, table_coeff, table_stderr):

print('{0:>15} {1:>15f} {2:>15f} {3:>15f}'.format(term, coeff,

stderr, coeff/stderr)) Term Coefficient Std. Error Z Score

----------------------------------------------------------------

Intercept 2.464933 0.089315 27.598203

lcavol 0.676016 0.125975 5.366290

lweight 0.261694 0.095134 2.750789

age -0.140734 0.100819 -1.395909

lbph 0.209061 0.101691 2.055846

svi 0.303623 0.122962 2.469255

lcp -0.287002 0.153731 -1.866913

gleason -0.021195 0.144497 -0.146681

pgg45 0.265576 0.152820 1.737840# RSS_1:

sum((vec_y-vec_y_fitted)**2)29.42638445990841The predictor \(\textsf{lcavol}\) shows the strongest effect, with \(\textsf{lweight}\) and \(\textsf{svi}\) also strong.

Notice that \(\textsf{lcp}\) is not significant, once \(\textsf{lcavol}\) is in the model (when used in a model without \(\textsf{lcavol}\), \(\textsf{lcp}\) is strongly significant).

We can also test for the exclusion of a number of terms at once, using the \(F\)-statistic. For example, we consider dropping all the non-significant terms in TABLE 3.2, namely \(\textsf{age}\), \(\textsf{gleason}\), and \(\textsf{pgg45}\). We get

\[\begin{equation} F = \frac{(\text{RSS}_0-\text{RSS}_1)/(p_1-p_0)}{\text{RSS}_1/(N-p_1-1)}\\ =\frac{(32.81 - 29.43)/(9-5)}{29.43/(67-9)} = 1.67, \end{equation}\]

which has a \(p\)-value of

\[\begin{equation} \text{Pr}(F_{4,58} \gt 1.67) = 0.17, \end{equation}\]

and hence is not significant.

"""F test for the exclusion of a number of terms at once

For example, we cansider dropping all the non-significant terms, namely

age, lcp, gleason, and pgg45."""

print("Null hypothesis: beta[3]=beta[6]=beta[7]=beta[8]=0")

data_x_train_alt = data_x_train.drop(['age', 'lcp', 'gleason', 'pgg45'],

axis=1)

size_predictor_alt = len(data_x_train_alt.columns)

mat_x_alt = np.hstack((np.ones((size_train, 1)),

data_x_train_alt.values))

ols_beta_alt = scipy.linalg.solve(mat_x_alt.T @ mat_x_alt,

mat_x_alt.T @ vec_y)

vec_y_fitted_alt = mat_x_alt @ ols_beta_alt

rss0 = sum((vec_y-vec_y_fitted_alt)**2)

rss1 = sum((vec_y-vec_y_fitted)**2)

F_stat = (rss0-rss1)/(size_predictor-size_predictor_alt)*(size_train-size_predictor-1)/rss1

print('F = {}'.format(F_stat))

print('Pr(F({dfn},{dfd}) > {fstat:>.2f}) = {prob:.2f}'.format(

dfn=size_predictor-size_predictor_alt,

dfd=size_train-size_predictor-1,

fstat=F_stat,

prob=1-scipy.stats.f.cdf(F_stat,

dfn=size_predictor-size_predictor_alt,

dfd=size_train-size_predictor-1),

))Null hypothesis: beta[3]=beta[6]=beta[7]=beta[8]=0

F = 1.6697548846375123

Pr(F(4,58) > 1.67) = 0.17The mean prediction error on the test data is 0.521. In contrast, prediction using the mean training value of \(\textsf{lpsa}\) has a test error of 1.057, which is called the “base error rate.”

"""Prediction error on the test data

We can see that the linear model reduces the base error rate by about 50%

"""

mean_y_train = sum(vec_y)/size_train

mat_x_test = np.hstack((np.ones((size_test, 1)),

data_x_test.values))

vec_y_test = data_y_test.values

vec_y_test_fitted = mat_x_test @ ols_beta

err_base = sum((vec_y_test-mean_y_train)**2)/size_test

err_ols = sum((vec_y_test-vec_y_test_fitted)**2)/size_test

print('Base error rate = {}'.format(err_base))

print('OLS error rate = {}'.format(err_ols))Base error rate = 1.0567332280603818

OLS error rate = 0.5212740055076004Hence the linear model reduces the base error rate by about 50%.

We will return to this example later to compare various selection and shrinkage methods.

\(\S\) 3.2.2. The Gauss-Markov Theorem

One of the most famous results in statistics asserts that

the least squares estimates of the parameter \(\beta\) have the smallest variance among all linear unbiased estimates.

We will make this precise here, and also make clear that

the restriction to unbiased estimates is not necessarily a wise one.

This observation will lead us to consider biased estimates such as ridge regression later in the chapter.

The statement of the theorem

We focus on estimation of any linear combination of the parameters \(\theta=a^T\beta\). The least squares estimate of \(a^T\beta\) is

\[\begin{equation} \hat\theta = a^T\hat\beta = a^T\left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}. \end{equation}\]

Considering \(\mathbf{X}\) to be fixed and the linear model is correct, \(a^T\beta\) is unbiased since

\[\begin{align} \text{E}(a^T\hat\beta) &= \text{E}\left(a^T(\mathbf{X}^T\mathbf{X})^{-1}\mathbf{X}^T\mathbf{y}\right) \\ &= a^T(\mathbf{X}^T\mathbf{X})^{-1}\mathbf{X}^T\mathbf{X}\beta \\ &= a^T\beta \end{align}\]

The Gauss-Markov Theorem states that if we have any other linear estimator \(\tilde\theta = \mathbf{c}^T\mathbf{y}\) that is unbiased for \(a^T\beta\), that is, \(\text{E}(\mathbf{c}^T\mathbf{y})=a^T\beta\), then

\[\begin{equation} \text{Var}(a^T\hat\beta) \le \text{Var}(\mathbf{c}^T\mathbf{y}). \end{equation}\]

The proof (Exercise 3.3) uses the triangle inequality.

For simplicity we have stated the result in terms of estimation of a single parameter \(a^T \beta\), but with a few more definitions one can state it in terms of the entire parameter vector \(\beta\) (Exercise 3.3).

Implications of the Gauss-Markov theorem

Consider the mean squared error of an estimator \(\tilde\theta\) of \(\theta\):

\[\begin{align} \text{MSE}(\tilde\theta) &= \text{E}\left(\tilde\theta-\theta\right)^2 \\ &= \text{E}\left(\tilde\theta-\text{E}(\tilde\theta)+\text{E}(\tilde\theta)-\theta\right)^2\\ &= \text{E}(\tilde\theta-\text{E}(\tilde\theta))^2+\text{E}(\text{E}(\tilde\theta)-\theta)^2+2\text{E}(\tilde\theta-\text{E}(\tilde\theta))(\text{E}(\tilde\theta)-\theta)\\ &= \text{Var}\left(\tilde\theta\right) + \left[\text{E}\left(\tilde\theta\right)-\theta\right]^2 \\ &= \text{Var} + \text{Bias}^2 \end{align}\]

The Gauss-Markov theorem implies that the least squares estimator has the smallest MSE of all linear estimators with no bias. However there may well exist a biased estimator with smaller MSE. Such an estimator would trade a little bias for a larger reduction in variance.

Biased estimates are commonly used. Any method that shrinks or sets to zero some of the least squares coefficients may result in a biased estimate. We discuss many examples, including variable subset selection and ridge regression, later in this chapter.

From a more pragmatic point of view, most models are distortions of the truth, and hence are biased; picking the right model amounts to creating the right balance between bias and variance. We go into these issues in more detail in Chapter 7.

Relation between prediction accuracy and MSE

MSE is intimately related to prediction accuracy, as discussed in Chapter 2.

Consider the prediction of the new response at input \(x_0\),

\[\begin{equation} Y_0 = f(x_0) + \epsilon. \end{equation}\]

Then the expected prediction error of an estimate \(\tilde{f}(x_0)=x_0^T\tilde\beta\) is

\[\begin{align} \text{E}(Y_0 - \tilde{f}(x_0))^2 &= \text{E}\left(Y_0 -f(x_0)+f(x_0) - \tilde{f}(x_0)\right)^2\\ &= \sigma^2 + \text{E}\left(x_o^T\tilde\beta - f(x_0)\right)^2 \\ &= \sigma^2 + \text{MSE}\left(\tilde{f}(x_0)\right). \end{align}\]

Therefore, expected prediction error and MSE differ only by the constant \(\sigma^2\), representing the variance of the new observation \(y_0\).

The first error component \(\sigma^2\) is unrelated to what model is used to describe our data. It cannot be reduced for it exists in the true data generation process. The second source of error corresponding to the term \(\text{MSE}(\tilde{f}(x_0))\) represents the error in the model and is under control of the statistician. Thus, based on the above expression, if we minimize the \(\text{MSE}\) of our estimator \(\tilde{f}(x_0)\) we are effectively minimizing the expected (quadratic) prediction error which is our ultimate goal anyway.

We will explore methods that minimize the mean square error. The mean square error can be broken down into two terms: a model variance term and a model bias squared term. We will explore methods that seek to keep the total contribution of these two terms as small as possible by explicitly considering the trade-offs that come from methods that might increase one of the terms while decreasing the other.

\(\S\) 3.2.3. Multiple Regression from Simple Univariate Regression

If there are \(n\) points \((x_1,y_1),(x_2,y_3),...,(x_n,y_n)\), the straight line \(y=a+bx\) minimizing the sum of the squares of the vertical distances from the data points to the line \(L=\sum_{i=1}^{n}(y_i-a-bx_i)^2\), then we take partial derivatives of L with respect to \(a\) and \(b\) and let them equal to \(0\) to get least squares coefficients \(a\) and \(b\):

\[\frac{\partial L}{\partial b}=-2\sum_{i=1}^{n}(y_i-a-bx_i)x_i=0\], then \[\sum_{i=1}^{n}x_iy_i=a\sum_{i=1}^{n}x_i+b\sum_{i=1}^{n}x_i^2\]

And, \[\frac{\partial L}{\partial a}=-2\sum_{i=1}^{n}(y_i-a-bx_i)=0\], then

\[\sum_{i=1}^{n}y_i=na+b\sum_{i=1}^{n}x_i\]

these 2 equations are:

\[

\begin{bmatrix}

\displaystyle\sum_{i=1}^{n}x_i & \displaystyle\sum_{i=1}^{n}x_i^2\\

n & \displaystyle\sum_{i=1}^{n}x_i\\

\end{bmatrix}

\begin{bmatrix}

a\\

b

\end{bmatrix}

=

\begin{bmatrix}

\displaystyle\sum_{i=1}^{n}x_iy_i\\

\displaystyle\sum_{i=1}^{n}y_i

\end{bmatrix}

\]

then, using Cramer’s rule

\[\begin{align}

b&=\frac{\begin{vmatrix}

\displaystyle\sum_{i=1}^{n}x_i & \displaystyle\sum_{i=1}^{n}x_iy_i\\

n & \displaystyle\sum_{i=1}^{n}y_i\\

\end{vmatrix}}{\begin{vmatrix}

\displaystyle\sum_{i=1}^{n}x_i & \displaystyle\sum_{i=1}^{n}x_i^2\\

n & \displaystyle\sum_{i=1}^{n}x_i\\

\end{vmatrix}}\\

&=\frac{(\displaystyle\sum_{i=1}^{n}x_i)(\displaystyle\sum_{i=1}^{n}y_i)-n(\displaystyle\sum_{i=1}^{n}x_iy_i)}{(\displaystyle\sum_{i=1}^{n}x_i)^2-n\displaystyle\sum_{i=1}^{n}x_i^2}\\

&=\frac{n(\displaystyle\sum_{i=1}^{n}x_iy_i)-(\displaystyle\sum_{i=1}^{n}x_i)(\displaystyle\sum_{i=1}^{n}y_i)}{n\displaystyle\sum_{i=1}^{n}x_i^2-(\displaystyle\sum_{i=1}^{n}x_i)^2}\\

&=\frac{(\displaystyle\sum_{i=1}^{n}x_iy_i)-\frac{1}{n}(\displaystyle\sum_{i=1}^{n}x_i)(\displaystyle\sum_{i=1}^{n}y_i)}{\displaystyle\sum_{i=1}^{n}x_i^2-\frac{1}{n}(\displaystyle\sum_{i=1}^{n}x_i)^2}\\

&=\frac{\langle\mathbf x, \mathbf y\rangle-\frac{1}{n}\langle\mathbf x, \mathbf 1\rangle\langle\mathbf y, \mathbf 1\rangle}{\langle\mathbf x, \mathbf x\rangle-\frac{1}{n}\langle\mathbf x, \mathbf 1\rangle\langle\mathbf x, \mathbf 1\rangle}\\

&=\frac{\langle\mathbf x, \mathbf y\rangle-\bar{x}\langle\mathbf y, \mathbf 1\rangle}{\langle\mathbf x, \mathbf x\rangle-\bar{x}\langle\mathbf x, \mathbf 1\rangle}\\

&=\frac{\langle\mathbf x-\bar{x}\mathbf 1, \mathbf y\rangle}{\langle\mathbf x-\bar{x}\mathbf 1, \mathbf x\rangle}\\

&=\frac{\langle\mathbf x-\bar{x}\mathbf 1, \mathbf y\rangle}{\langle\mathbf x-\bar{x}\mathbf 1, \mathbf x-\bar{x}\mathbf 1\rangle}

\end{align}\]

and \(a=\frac{\displaystyle\sum_{i=1}^{n}y_i-b\sum_{i=1}^{n}x_i}{n}=\bar y-b\bar x\), which shows point \((\bar x, \bar y)\) is in the line.

Thus we see that obtaining an estimate of the second coefficient \(b\) is really two one-dimensional regressions followed in succession. We first regress \(\mathbf x\) onto \(\mathbf 1\) and obtain the residual \(\mathbf z = \mathbf x − \bar{x}\mathbf 1\). We next regress \(\mathbf y\) onto this residual \(\mathbf z\). The direct extension of these ideas results in Algorithm 3.1: Regression by Successive Orthogonalization or Gram-Schmidt for multiple regression.

We call \(\hat y_i=a+bx_i\) the predicted value of \(y_i\), and \(y_i-\hat y_i\) the \(i^{th}\) residual.

The linear model with \(p \gt 1\) inputs is called multiple linear regression model.

The least squares estimates

\[\begin{equation} \hat\beta = \left(\mathbf{X}^T \mathbf{X}\right)^{-1} \mathbf{X}^T \mathbf{y} \end{equation}\]

for this model are best understood in terms of the estimates for the univariate (\(p=1\)) linear model, as we indicate in this section.

Suppose a univariate model with no intercept, i.e.,

\[\begin{equation} Y=X\beta+\epsilon. \end{equation}\]

The least squares estimate and residuals are

\[\begin{align} \hat\beta &= \frac{\sum_1^N x_i y_i}{\sum_1^N x_i^2} = \frac{\langle\mathbf{x},\mathbf{y}\rangle}{\langle\mathbf{x},\mathbf{x}\rangle}, \\ r_i &= y_i - x_i\hat\beta, \\ \mathbf{r} &= \mathbf{y} - \mathbf{x}\hat\beta, \end{align}\]

where

- \(\mathbf{y}=(y_1,\cdots,y_N)^T\),

- \(\mathbf{x}=(x_1,\cdots,x_N)^T\) and

- \(\langle\cdot,\cdot\rangle\) denotes the dot product notation.

As we will see,

this simple univariate regression provides the building block for multiple linear regression.

Building blocks for multiple linear regression

Suppose next that the columns of the data matrix \(\mathbf{X} = \left[\mathbf{x}_1,\cdots,\mathbf{x}_p\right]\) are orthogonal, i.e.,

\[\begin{equation} \langle \mathbf{x}_j,\mathbf{x}_k\rangle = 0\text{ for all }j\neq k. \end{equation}\]

Then it is easy to check that the multiple least squares estimates are equal to the univariate estimates:

\[\begin{equation} \hat\beta_j = \frac{\langle\mathbf{x}_j,\mathbf{y}\rangle}{\langle\mathbf{x}_j,\mathbf{x}_j\rangle}, \forall j \end{equation}\]

In other words, when the inputs are orthogonal, they have no effect on each other’s parameter estimates in the model.

Orthogonal inputs occur most often with balanced, designed experiments (where orthogonality is enforced), but almost never with observational data. Hence we will have to orthogonalize them in order to carry this idea further.

Orthogonalization

Suppose next that we have an intercept and a single input \(\mathbf{x}\). Then the least squares coefficient of \(\mathbf{x}\) has the form

\[\begin{equation} \hat\beta_1 = \frac{\langle\mathbf{x}-\bar{x}\mathbf{1},\mathbf{y}\rangle}{\langle\mathbf{x}-\bar{x}\mathbf{1},\mathbf{x}-\bar{x}\mathbf{1}\rangle}, \end{equation}\]

where \(\bar{x} = \sum x_i /N\) and \(\mathbf{1} = \mathbf{x}_0\). And also note that

\[\begin{equation} \bar{x}\mathbf{1} = \frac{\langle\mathbf{1},\mathbf{x}\rangle}{\langle\mathbf{1},\mathbf{1}\rangle}\mathbf{1}, \end{equation}\]

which means the fitted value in the case we regress \(\mathbf{x}\) on \(\mathbf{x}_0=\mathbf{1}\). Therefore we can view \(\hat\beta_1\) as the result of two application of the simple regression with the following steps: 1. Regress \(\mathbf{x}\) on \(\mathbf{1}\) to produce the residual \(\mathbf{z}=\mathbf{x}-\bar{x}\mathbf{1}\); 2. regress \(\mathbf{y}\) on the residual \(\mathbf{z}\) to give the coefficient \(\hat\beta_1\).

In this procedure, “regreess \(\mathbf{b}\) on \(\mathbf{a}\)” means a simple univariate regression of \(\mathbf{b}\) on \(\mathbf{a}\) with no intercept, producing coefficient \(\hat\gamma=\langle\mathbf{a},\mathbf{b}\rangle/\langle\mathbf{a},\mathbf{a}\rangle\) and residual vector \(\mathbf{b}-\hat\gamma\mathbf{a}\). We say that \(\mathbf{b}\) is adjusted for \(\mathbf{a}\), or is “orthogonalized” w.r.t. \(\mathbf{a}\).

In other words, 1. orthogonalize \(\mathbf{x}\) w.r.t. \(\mathbf{x}_0=\mathbf{1}\); 2. just a simple univariate regression, using the orthogonal predictors \(\mathbf{1}\) and \(\mathbf{z}\).

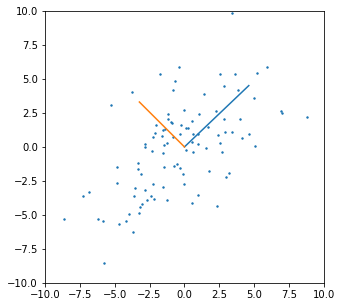

FIGURE 3.4 shows this process for two general inputs \(\mathbf{x}_1\) and \(\mathbf{x}_2\). The orthogonalization does not change the subspace spanned by \(\mathbf{x}_0\) and \(\mathbf{x}_1\), it simply produces an orthogonal basis for representing it.

This recipe gerenalizes to the case of \(p\) inputs, as shown in ALGORITHM 3.1.

ALGORITHM 3.1. Regression by successive orthogonalization (Gram-Schmidt orthogonilization)

- Initialize \(\mathbf{z}_0=\mathbf{x}_0=\mathbf{1}\).

- For \(j = 1, 2, \cdots, p\),

regress \(\mathbf{x}_j\) on \(\mathbf{z}_0,\mathbf{z}_1,\cdots,\mathbf{z}_{j-1}\) to produce

- coefficients \(\hat\gamma_{lj} = \langle\mathbf{z}_l,\mathbf{x}_j\rangle/\langle\mathbf{z}_l,\mathbf{z}_l\rangle\), for \(l=0,\cdots, j-1\) and

- residual vector \(\mathbf{z}_j=\mathbf{x}_j - \sum_{k=0}^{j-1}\hat\gamma_{kj}\mathbf{z}_k\).

- Regress \(\mathbf{y}\) on the residual \(\mathbf{z}_p\) to give the estimate \(\hat\beta_p\),

\[\begin{equation} \hat\beta_p = \frac{\langle\mathbf{z}_p,\mathbf{y}\rangle}{\langle\mathbf{z}_p,\mathbf{z}_p\rangle}. \end{equation}\]

Note that the inputs \(\mathbf{z}_0,\cdots,\mathbf{z}_{j-1}\) in step 2 are orthogonal, hence the simple regression coefficients computed there are in fact also the multiple regression coefficients.

The result of this algorithm is

\[\begin{equation} \hat\beta_p = \frac{\langle \mathbf{z}_p, \mathbf{y} \rangle}{\langle \mathbf{z}_p, \mathbf{z}_p \rangle}. \end{equation}\]

Re-arranging the residual in step 2, we can see that each of the \(\mathbf{x}_j\) is

\[\begin{equation} \mathbf{x}_j = \mathbf{z}_j + \sum_{k=0}^{j-1} \hat\gamma_{kj}\mathbf{z}_k, \end{equation}\]

which is a linear combination of the \(\mathbf{z}_k\), \(k \le j\). Since the \(\mathbf{z}_j\) are all orthogonal, they form a basis for the \(\text{col}(\mathbf{X})\), and hence the least squares projection onto this subspace is \(\hat{\mathbf{y}}\).

Since \(\mathbf{z}_p\) alone involves \(\mathbf{x}_p\) (with coefficient 1), we see that the coefficient \(\hat\beta_p\) is indeed the multiple regression coefficient of \(\mathbf{y}\) on \(\mathbf{x}_p\). This key result exposes the effect of correlated inputs in mutiple regression.

Note also that by rearranging the \(j\)th multiple regression coefficient is the univariate regression coefficient of \(\mathbf{y}\) on \(\mathbf{x}_{j\cdot 012\cdots(j-1)(j+1)\cdots p}\), the residual after regressing \(\mathbf{x}_j\) on \(\mathbf{x}_0,\mathbf{x}_1,\cdots,\mathbf{x}_{j-1},\mathbf{x}_{j+1},\cdots,\mathbf{x}_p\):

The multiple regression coefficient \(\hat\beta_j\) represents the additional contribution of \(\mathbf{x}_j\) on \(\mathbf{y}\), after \(\mathbf{x}_j\) has been adjusted for \(\mathbf{x}_0,\mathbf{x}_1,\cdots,\mathbf{x}_{j-1},\mathbf{x}_{j+1},\cdots,\mathbf{x}_p\).

Gram-Schmidt procedure and QR decomposition

Algorithm 3.1 is known as the Gram-Schmidt procedure for multiple regression, and is also a useful numerical strategy for computing the estimates. We can obtain from it not just \(\hat\beta_p\), but also the entire multiple least squares fit (Exercise 3.4).

We can represent step 2 of Algorithm 3.1 in matrix form:

\[\begin{equation} \mathbf{X} = \mathbf{Z\Gamma}, \end{equation}\]

where

- \(\mathbf{Z}\) has as columns the \(\mathbf{z}_j\) (in order)

- \(\mathbf{\Gamma}\) is the upper triangular matrix with entries \(\hat\gamma_{kj}\).

Introducing the diagonal matrix \(\mathbf{D}\) with \(D_{jj}=\|\mathbf{z}_j\|\), we get

\[\begin{align} \mathbf{X} &= \mathbf{ZD}^{-1}\mathbf{D\Gamma} \\ &= \mathbf{QR}, \end{align}\]

the so-called QR decomposition of \(\mathbf{X}\). Here

- \(\mathbf{Q}\) is an \(N\times(p+1)\) orthogonal matrix s.t. \(\mathbf{Q}^T\mathbf{Q}=\mathbf{I}\),

- \(\mathbf{R}\) is a \((p+1)\times(p+1)\) upper triangular matrix.

The QR decomposition represents a convenient orthogonal basis for the \(\text{col}(\mathbf{X})\). It is easy to see, for example, that the ordinary least squares (OLS) solution is given by

\[\begin{align} \hat\beta &= \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}\\ &=\left(\mathbf{R}^T\mathbf{Q}^T\mathbf{Q}\mathbf{R}\right)^{-1}\mathbf{R}^T\mathbf{Q}^T\mathbf{y}\\ &=\left(\mathbf{R}^T\mathbf{R}\right)^{-1}\mathbf{R}^T\mathbf{Q}^T\mathbf{y}\\ &=\mathbf{R}^{-1}\mathbf{R}^{-T}\mathbf{R}^T\mathbf{Q}^T\mathbf{y}\\ &=\mathbf{R}^{-1}\mathbf{Q}^T\mathbf{y}, \\ \hat{\mathbf{y}} &= \mathbf{X}\hat\beta =\mathbf{Q}\mathbf{R}\mathbf{R}^{-1}\mathbf{Q}^T\mathbf{y}=\mathbf{QQ}^T\mathbf{y}. \end{align}\]

This last equation expresses the fact in ordinary least squares we obtain our fitted vector \(\mathbf y\) by first computing the coefficients of \(\mathbf y\) in terms of the basis spanned by the columns of \(\mathbf Q\) (these coefficients are given by the vector \(\mathbf Q^T\mathbf y\)). We next construct \(\hat{\mathbf y}\) using these numbers as the coefficients of the column vectors in \(\mathbf Q\) (this is the product \(\mathbf{QQ}^T\mathbf y\)).

Note that the triangular matrix \(\mathbf{R}\) makes it easy to solve (Exercise 3.4).

\(\S\) 3.2.4. Multiple Outputs

Suppose we have

- multiple outputs \(Y_1,Y_2,\cdots,Y_K\)

- inputs \(X_0,X_1,\cdots,X_p\)

- a linear model for each output

\[\begin{align} Y_k &= \beta_{0k} + \sum_{j=1}^p X_j\beta_{jk} + \epsilon_k \\ &= f_k(X) + \epsilon_k \end{align}\]

the coefficients for the \(k\)th outcome are just the least squares estimates in the regression of \(y_k\) on \(x_0,x_1,\cdots,x_p\) . Multiple outputs do not affect one another’s least squares estimates.

With \(N\) training cases we can write the model in matrix notation

\[\begin{equation} \mathbf{Y}=\mathbf{XB}+\mathbf{E}, \end{equation}\]

where

- \(\mathbf{Y}\) is \(N\times K\) with \(ik\) entry \(y_{ik}\),

- \(\mathbf{X}\) is \(N\times(p+1)\) input matrix,

- \(\mathbf{B}\) is \((p+1)\times K\) parameter matrix,

- \(\mathbf{E}\) is \(N\times K\) error matrix.

A straightforward generalization of the univariate loss function is

\[\begin{align} \text{RSS}(\mathbf{B}) &= \sum_{k=1}^K \sum_{i=1}^N \left( y_{ik} - f_k(x_i) \right)^2 \\ &= \text{trace}\left( (\mathbf{Y}-\mathbf{XB})^T(\mathbf{Y}-\mathbf{XB}) \right) \end{align}\]

The least squares estimates have exactly the same form as before

\[\begin{equation} \hat{\mathbf{B}} = \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{Y}. \end{equation}\]

\(\S\) 3.3. Subset Selection

Two reasons why we are often not satisfied with the least squares estimates:

1. Prediction accuracy.

The least squares estimate often have low bias but large variance. Prediction accuracy can sometimes be improved by shrinking or setting some coefficients to zero. By doing so we sacrifice a little bit of bias to reduce the variance of the predicted values, and hence may improve the overall prediction accuracy.

2. Interpretation.

With a large number of predictiors, we often would like to determine a smaller subset that exhibit the strongest effects. In order to get the “big picture,” we are willing to sacrifice some of the small details.

In this section we describe a number of approaches to variable subset selection with linear regression. In later sections we discuss shrinkage and hybrid approaches for controlling variance, as well as other dimension-reduction strategies. These all fall under the general heading model selection. Model selection is not restricted to linear models; Chapter 7 covers this topic in some detail.

With subset selection we retain only a subset of the variables, and eliminate the rest from the model. Least squares regression is used to estimate the coefficients of the inputs that are retained. There are a number of different strategies for choosing the subset.

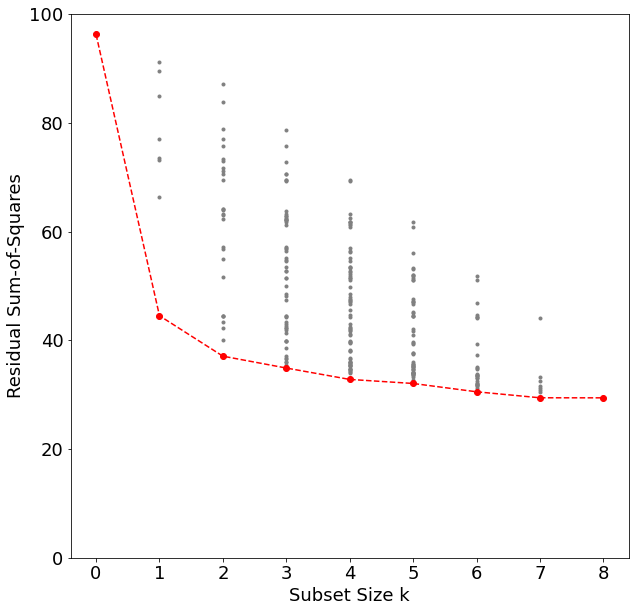

\(\S\) 3.3.1. Best-Subset Selection

Best subset regression finds for each \(k\in\lbrace0,1,2,\cdots,p\rbrace\) the subset of size \(k\) that gives the smallest residual sum of squares. An efficient algorithm – the leaps and bounds procedure (Furnival and Wilson, 1974) – makes this feasible for \(p\) as large as 30 or 40.

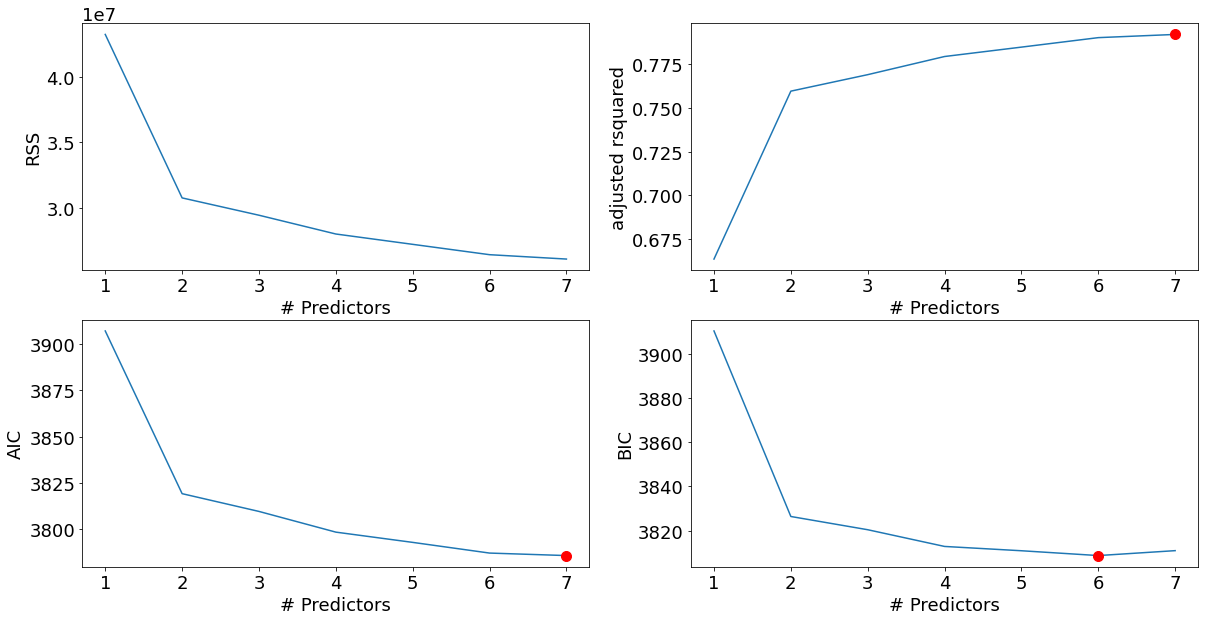

"""FIGURE 3.5. All possible subset models for the prostate cancer example

At each subset size is shown the residual sum-of-squares for each model of

that size."""

import math

import collections

import itertools

import functools

import operator as op

import pandas as pd

import scipy

import scipy.stats

import matplotlib.pyplot as plt

import numpy as npdata = pd.read_csv('../../data/prostate/prostate.data', delimiter='\t',

index_col=0)

data_y = data['lpsa']

data_x_normalized = data.drop(['train', 'lpsa'], axis=1)\

.apply(scipy.stats.zscore)

# data_normalized.describe() # check it normalized!

data_x_normalized.describe()| lcavol | lweight | age | lbph | svi | lcp | gleason | pgg45 | |

|---|---|---|---|---|---|---|---|---|

| count | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 | 9.700000e+01 |

| mean | 4.578239e-17 | 6.844468e-16 | 4.131861e-16 | -2.432190e-17 | -3.662591e-17 | 3.662591e-17 | -2.174664e-17 | 5.636957e-17 |

| std | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 | 1.005195e+00 |

| min | -2.300218e+00 | -2.942386e+00 | -3.087227e+00 | -1.030029e+00 | -5.256575e-01 | -8.676552e-01 | -1.047571e+00 | -8.689573e-01 |

| 25% | -7.139973e-01 | -5.937689e-01 | -5.219612e-01 | -1.030029e+00 | -5.256575e-01 | -8.676552e-01 | -1.047571e+00 | -8.689573e-01 |

| 50% | 8.264956e-02 | -1.392703e-02 | 1.531086e-01 | 1.383966e-01 | -5.256575e-01 | -4.450983e-01 | 3.444069e-01 | -3.343557e-01 |

| 75% | 6.626939e-01 | 5.806076e-01 | 5.581506e-01 | 1.010033e+00 | -5.256575e-01 | 9.762744e-01 | 3.444069e-01 | 5.566470e-01 |

| max | 2.107397e+00 | 2.701661e+00 | 2.043304e+00 | 1.542252e+00 | 1.902379e+00 | 2.216735e+00 | 3.128363e+00 | 2.695054e+00 |

data_x_normalized.columnsIndex(['lcavol', 'lweight', 'age', 'lbph', 'svi', 'lcp', 'gleason', 'pgg45'], dtype='object')data_x_train = data_x_normalized[data['train'] == 'T']

data_y_train = data_y[data['train'] == 'T']

data_x_test = data_x_normalized[data['train'] == 'F']

data_y_test = data_y[data['train'] == 'F']

vec_y = data_y_train.values

vec_y_test = data_y_test.values

size_train = sum(data['train'] == 'T')

size_test = sum(data['train'] == 'F')

size_predictor = len(data_x_train.columns)Use function ‘ols_with_column_names’ to concatenate vector \(\mathbf 1\) and columns of ‘data_x_train’ to get \(\mathbf X\), then solve \[\hat{\mathbf{\beta}} = \left(\mathbf{X}^T\mathbf{X}\right)^{-1}\mathbf{X}^T\mathbf{y}.\] to get \(\hat{\mathbf{\beta}}\), then calculate ‘vec_y_fitted’ \[\text{vec_y_fitted}=\mathbf{X}\hat{\mathbf{\beta}}\]

def ols_with_column_names(df_x:pd.DataFrame, vec_y:np.ndarray,

*column_names) ->float:

if column_names:

df_x_subset = df_x[list(column_names)]

mat_x = np.hstack((np.ones((len(df_x), 1)),

df_x_subset.values))

else:

mat_x = np.ones((len(df_x), 1))

ols_beta = scipy.linalg.solve(mat_x.T @ mat_x, mat_x.T @ vec_y)

vec_y_fitted = mat_x @ ols_beta

return ols_beta, vec_y_fitted# The reduce(fun,seq) function is used to apply a particular function passed

# in its argument to all of the list elements mentioned in the sequence passed along

print (functools.reduce(lambda a,b : a*b, range(8, 2, -1)))20160# calculate the factorial a!/b!

print(functools.reduce(op.mul, range(8, 2, -1)))20160Use function ‘ncr’ to calculate the Combinations: \[{n \choose r}=\frac{n!}{(n-r)!r!}\]

Use ‘ols_with_subset_size’ function to calculate \(\hat{\mathbf{\beta}}\) and \(\text{RSS}\) of every combinations of the columns of every possible size.

Use ‘itertools.combinations(p,r)’ to iter r-length tuples, in sorted order, no repeated elements.

def ncr(n:int, r:int) ->int:

"""Compute combination number nCr"""

r = min(r, n-r)

if r == 0:

return 1

numer = functools.reduce(op.mul, range(n, n-r, -1))

denom = functools.reduce(op.mul, range(1, r+1))

return numer//denom

def ols_with_subset_size(df_x:pd.DataFrame, vec_y:np.ndarray,

k:int) ->np.ndarray:

if k == 0:

ols_beta, vec_y_fitted = ols_with_column_names(df_x, vec_y)

return [{

'column_names': 'constant',

'beta': ols_beta,

'rss': ((vec_y-vec_y_fitted)**2).sum(),

}]

column_combi = itertools.combinations(data_x_normalized.columns, k)

result = []

for column_names in column_combi:

ols_beta, vec_y_fitted = ols_with_column_names(df_x, vec_y,

*column_names)

result.append({

'column_names': column_names,

'beta': ols_beta,

'rss': ((vec_y-vec_y_fitted)**2).sum(),

})

return result# The number_of_combinations of different sizes

for i in range(1,size_predictor):

print(ncr(size_predictor, i))8

28

56

70

56

28

8np.ones(28)*2array([2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2.,

2., 2., 2., 2., 2., 2., 2., 2., 2., 2., 2.])fig35 = plt.figure(figsize=(10, 10))

ax = fig35.add_subplot(1, 1, 1)

rss_min = []

for k in range(size_predictor+1):

number_of_combinations = ncr(size_predictor, k)

ols_list = ols_with_subset_size(data_x_train, vec_y, k)

ax.plot(np.ones(number_of_combinations)*k, [d['rss'] for d in ols_list],

'o', color='gray', markersize=3)

ols_best = min(ols_list, key=op.itemgetter('rss'))

rss_min.append(ols_best['rss'])

ax.plot(range(size_predictor+1), rss_min, 'o--', color='r', markersize=6)

ax.set_xlabel('Subset Size k')

ax.set_ylim(0, 100)

ax.set_ylabel('Residual Sum-of-Squares')

plt.show()

png

ols_list[{'column_names': ('lcavol',

'lweight',

'age',

'lbph',

'svi',

'lcp',

'gleason',

'pgg45'),

'beta': array([ 2.46493292, 0.67601634, 0.26169361, -0.14073374, 0.20906052,

0.30362332, -0.28700184, -0.02119493, 0.26557614]),

'rss': 29.426384459908405}]Note that the best subset of size 2, for example, need not include the variable that was in the best subset if size 1. The best-subset curve is necessarily decreasing, so cannot be used to select the subsit size \(k\). The question of how to choose \(k\) involves the tradeoff between bias and variance, along with more subjective desire for parsimony. There are a number of criteria that one may use; typically we choose the smallest model that minimizes an estimate of the expected prediction error.

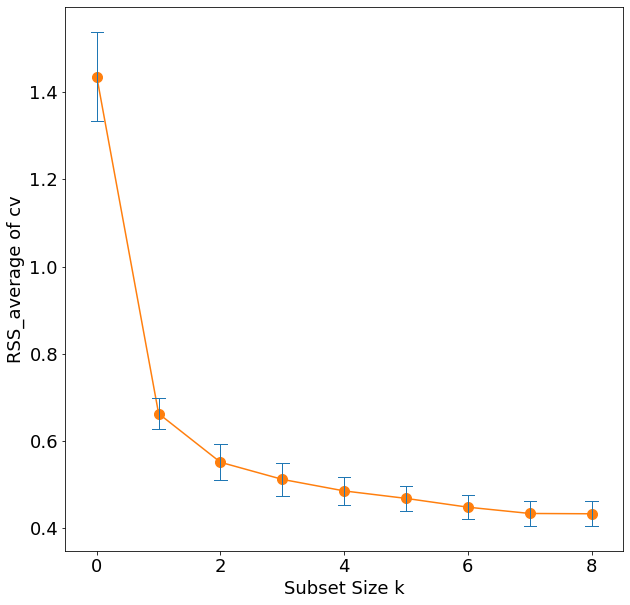

Many of the other approaches that we discuss in this chapter are similar, in that they use the training data to produce a sequence of models varying in complexity and indexed by a single parameter. In the next section we use cross-validation to estimate prediction error and select \(k\); the \(\text{AIC}\) criterion is a popular alternative.

"""Hitters dataset from ISLR"""

%matplotlib inline

import pandas as pd

import numpy as np

import itertools

import time

import statsmodels.api as sm

import matplotlib.pyplot as plt# Here we apply the best subset selection approach to the Hitters data.

# We wish to predict a baseball player’s Salary on the basis of various statistics associated with performance in the previous year.

hitters_df = pd.read_csv('../../data/Hitters.csv')

hitters_df.head()| Unnamed: 0 | AtBat | Hits | HmRun | Runs | RBI | Walks | Years | CAtBat | CHits | … | CRuns | CRBI | CWalks | League | Division | PutOuts | Assists | Errors | Salary | NewLeague | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -Andy Allanson | 293 | 66 | 1 | 30 | 29 | 14 | 1 | 293 | 66 | … | 30 | 29 | 14 | A | E | 446 | 33 | 20 | NaN | A |

| 1 | -Alan Ashby | 315 | 81 | 7 | 24 | 38 | 39 | 14 | 3449 | 835 | … | 321 | 414 | 375 | N | W | 632 | 43 | 10 | 475.0 | N |

| 2 | -Alvin Davis | 479 | 130 | 18 | 66 | 72 | 76 | 3 | 1624 | 457 | … | 224 | 266 | 263 | A | W | 880 | 82 | 14 | 480.0 | A |

| 3 | -Andre Dawson | 496 | 141 | 20 | 65 | 78 | 37 | 11 | 5628 | 1575 | … | 828 | 838 | 354 | N | E | 200 | 11 | 3 | 500.0 | N |

| 4 | -Andres Galarraga | 321 | 87 | 10 | 39 | 42 | 30 | 2 | 396 | 101 | … | 48 | 46 | 33 | N | E | 805 | 40 | 4 | 91.5 | N |

5 rows × 21 columns

# the Salary variable is missing for some of the players

print("Number of null values:", hitters_df["Salary"].isnull().sum())Number of null values: 59# We see that Salary is missing for 59 players.

# The dropna() function removes all of the rows that have missing values in any variable:

# Print the dimensions of the original Hitters data (322 rows x 20 columns)

print("Dimensions of original data:", hitters_df.shape)

# Drop any rows the contain missing values, along with the player names

hitters_df_clean = hitters_df.dropna(axis=0).drop('Unnamed: 0', axis=1)

# Print the dimensions of the modified Hitters data (263 rows x 20 columns)

print("Dimensions of modified data:", hitters_df_clean.shape)

# One last check: should return 0

print("Number of null values:", hitters_df_clean["Salary"].isnull().sum())Dimensions of original data: (322, 21)

Dimensions of modified data: (263, 20)

Number of null values: 0# Some of our predictors are categorical, so we'll want to clean those up as well.

# We'll ask pandas to generate dummy variables for them, separate out the response variable, and stick everything back together again:

dummies = pd.get_dummies(hitters_df_clean[['League', 'Division', 'NewLeague']])

y = hitters_df_clean.Salary

# Drop the column with the independent variable (Salary), and columns for which we created dummy variables

X_ = hitters_df_clean.drop(['Salary', 'League', 'Division', 'NewLeague'], axis=1).astype('float64')

# Define the feature set X.

X = pd.concat([X_, dummies[['League_N', 'Division_W', 'NewLeague_N']]], axis=1)X.head()| AtBat | Hits | HmRun | Runs | RBI | Walks | Years | CAtBat | CHits | CHmRun | CRuns | CRBI | CWalks | PutOuts | Assists | Errors | League_N | Division_W | NewLeague_N | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 315.0 | 81.0 | 7.0 | 24.0 | 38.0 | 39.0 | 14.0 | 3449.0 | 835.0 | 69.0 | 321.0 | 414.0 | 375.0 | 632.0 | 43.0 | 10.0 | 1 | 1 | 1 |

| 2 | 479.0 | 130.0 | 18.0 | 66.0 | 72.0 | 76.0 | 3.0 | 1624.0 | 457.0 | 63.0 | 224.0 | 266.0 | 263.0 | 880.0 | 82.0 | 14.0 | 0 | 1 | 0 |

| 3 | 496.0 | 141.0 | 20.0 | 65.0 | 78.0 | 37.0 | 11.0 | 5628.0 | 1575.0 | 225.0 | 828.0 | 838.0 | 354.0 | 200.0 | 11.0 | 3.0 | 1 | 0 | 1 |

| 4 | 321.0 | 87.0 | 10.0 | 39.0 | 42.0 | 30.0 | 2.0 | 396.0 | 101.0 | 12.0 | 48.0 | 46.0 | 33.0 | 805.0 | 40.0 | 4.0 | 1 | 0 | 1 |

| 5 | 594.0 | 169.0 | 4.0 | 74.0 | 51.0 | 35.0 | 11.0 | 4408.0 | 1133.0 | 19.0 | 501.0 | 336.0 | 194.0 | 282.0 | 421.0 | 25.0 | 0 | 1 | 0 |

# We can perform best subset selection by identifying the best model that contains a given number of predictors, where best is quantified using RSS.

# We'll define a helper function to outputs the best set of variables for each model size:

def processSubset(feature_set):

# Fit model on feature_set and calculate RSS

model = sm.OLS(y,X[list(feature_set)])

regr = model.fit()

RSS = ((regr.predict(X[list(feature_set)]) - y) ** 2).sum()

return {"model":regr, "RSS":RSS}X.columnsIndex(['AtBat', 'Hits', 'HmRun', 'Runs', 'RBI', 'Walks', 'Years', 'CAtBat',

'CHits', 'CHmRun', 'CRuns', 'CRBI', 'CWalks', 'PutOuts', 'Assists',

'Errors', 'League_N', 'Division_W', 'NewLeague_N'],

dtype='object')def getBest(k):

tic = time.time()

results = []

for combo in itertools.combinations(X.columns, k):

results.append(processSubset(combo))

# Wrap everything up in a nice dataframe

models = pd.DataFrame(results)

# Choose the model with the highest RSS,

# pandas.DataFrame.loc() access a group of rows and columns by label(s) or a boolean array

best_model = models.loc[models['RSS'].argmin()]

toc = time.time()

print("Processed", models.shape[0], "models on", k, "predictors in", (toc-tic), "seconds.")

# Return the best model, along with some other useful information about the model

return best_model# This returns a DataFrame containing the best model that we generated, along with some extra information about the model.

# Now we want to call that function for each number of predictors k :

models_best = pd.DataFrame(columns=["RSS", "model"])

tic = time.time()

for i in range(1,8):

models_best.loc[i] = getBest(i)

toc = time.time()

print("Total elapsed time:", (toc-tic), "seconds.")Processed 19 models on 1 predictors in 0.05321931838989258 seconds.

Processed 171 models on 2 predictors in 0.30821847915649414 seconds.

Processed 969 models on 3 predictors in 1.750037431716919 seconds.

Processed 3876 models on 4 predictors in 6.91033411026001 seconds.

Processed 11628 models on 5 predictors in 21.13918924331665 seconds.

Processed 27132 models on 6 predictors in 52.62217926979065 seconds.

Processed 50388 models on 7 predictors in 107.20377969741821 seconds.

Total elapsed time: 190.4251265525818 seconds.# Now we have one big DataFrame that contains the best models we've generated along with their RSS:

models_best| RSS | model | |

|---|---|---|

| 1 | 4.321393e+07 | <statsmodels.regression.linear_model.Regressio… |

| 2 | 3.073305e+07 | <statsmodels.regression.linear_model.Regressio… |

| 3 | 2.941071e+07 | <statsmodels.regression.linear_model.Regressio… |

| 4 | 2.797678e+07 | <statsmodels.regression.linear_model.Regressio… |

| 5 | 2.718780e+07 | <statsmodels.regression.linear_model.Regressio… |

| 6 | 2.639772e+07 | <statsmodels.regression.linear_model.Regressio… |

| 7 | 2.606413e+07 | <statsmodels.regression.linear_model.Regressio… |

#We can get a full rundown of a single model using the summary() function:

print(models_best.loc[2, "model"].summary()) OLS Regression Results

=======================================================================================

Dep. Variable: Salary R-squared (uncentered): 0.761

Model: OLS Adj. R-squared (uncentered): 0.760

Method: Least Squares F-statistic: 416.7

Date: Tue, 16 Feb 2021 Prob (F-statistic): 5.80e-82

Time: 15:26:02 Log-Likelihood: -1907.6

No. Observations: 263 AIC: 3819.

Df Residuals: 261 BIC: 3826.

Df Model: 2

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Hits 2.9538 0.261 11.335 0.000 2.441 3.467

CRBI 0.6788 0.066 10.295 0.000 0.549 0.809

==============================================================================

Omnibus: 117.551 Durbin-Watson: 1.933

Prob(Omnibus): 0.000 Jarque-Bera (JB): 654.612

Skew: 1.729 Prob(JB): 7.12e-143

Kurtosis: 9.912 Cond. No. 5.88

==============================================================================

Notes:

[1] R² is computed without centering (uncentered) since the model does not contain a constant.

[2] Standard Errors assume that the covariance matrix of the errors is correctly specified.print(models_best.loc[7, "model"].summary()) OLS Regression Results

=======================================================================================

Dep. Variable: Salary R-squared (uncentered): 0.798

Model: OLS Adj. R-squared (uncentered): 0.792

Method: Least Squares F-statistic: 144.2

Date: Tue, 16 Feb 2021 Prob (F-statistic): 4.76e-85

Time: 15:27:23 Log-Likelihood: -1885.9

No. Observations: 263 AIC: 3786.

Df Residuals: 256 BIC: 3811.

Df Model: 7

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Hits 1.6800 0.490 3.426 0.001 0.714 2.646

Walks 3.4000 1.196 2.843 0.005 1.045 5.755

CAtBat -0.3288 0.090 -3.665 0.000 -0.506 -0.152

CHits 1.3470 0.312 4.316 0.000 0.732 1.962

CHmRun 1.3494 0.415 3.248 0.001 0.531 2.167

PutOuts 0.2482 0.074 3.336 0.001 0.102 0.395

Division_W -111.9438 36.786 -3.043 0.003 -184.386 -39.502

==============================================================================

Omnibus: 108.568 Durbin-Watson: 2.008

Prob(Omnibus): 0.000 Jarque-Bera (JB): 808.968

Skew: 1.457 Prob(JB): 2.16e-176

Kurtosis: 11.082 Cond. No. 6.81e+03

==============================================================================

Notes:

[1] R² is computed without centering (uncentered) since the model does not contain a constant.

[2] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[3] The condition number is large, 6.81e+03. This might indicate that there are

strong multicollinearity or other numerical problems.# Show the best 19-variable model (there's actually only one)

print(getBest(19)["model"].summary())Processed 1 models on 19 predictors in 0.007192373275756836 seconds.

OLS Regression Results

=======================================================================================

Dep. Variable: Salary R-squared (uncentered): 0.810

Model: OLS Adj. R-squared (uncentered): 0.795

Method: Least Squares F-statistic: 54.64

Date: Tue, 16 Feb 2021 Prob (F-statistic): 1.31e-76

Time: 15:30:00 Log-Likelihood: -1877.9

No. Observations: 263 AIC: 3794.

Df Residuals: 244 BIC: 3862.

Df Model: 19

Covariance Type: nonrobust

===============================================================================

coef std err t P>|t| [0.025 0.975]

-------------------------------------------------------------------------------

AtBat -1.5975 0.600 -2.663 0.008 -2.779 -0.416

Hits 7.0330 2.374 2.963 0.003 2.357 11.709

HmRun 4.1210 6.229 0.662 0.509 -8.148 16.390

Runs -2.3776 2.994 -0.794 0.428 -8.276 3.520

RBI -1.0873 2.613 -0.416 0.678 -6.234 4.059

Walks 6.1560 1.836 3.352 0.001 2.539 9.773

Years 9.5196 10.128 0.940 0.348 -10.429 29.468

CAtBat -0.2018 0.135 -1.497 0.136 -0.467 0.064

CHits 0.1380 0.678 0.204 0.839 -1.197 1.473

CHmRun -0.1669 1.625 -0.103 0.918 -3.367 3.033

CRuns 1.5070 0.753 2.001 0.047 0.023 2.991

CRBI 0.7742 0.696 1.113 0.267 -0.596 2.144

CWalks -0.7851 0.329 -2.384 0.018 -1.434 -0.137

PutOuts 0.2856 0.078 3.673 0.000 0.132 0.439

Assists 0.3137 0.220 1.427 0.155 -0.119 0.747

Errors -2.0463 4.350 -0.470 0.638 -10.615 6.522

League_N 86.8139 78.463 1.106 0.270 -67.737 241.365

Division_W -97.5160 39.084 -2.495 0.013 -174.500 -20.532

NewLeague_N -23.9133 79.361 -0.301 0.763 -180.234 132.407

==============================================================================

Omnibus: 97.217 Durbin-Watson: 2.024

Prob(Omnibus): 0.000 Jarque-Bera (JB): 626.205

Skew: 1.320 Prob(JB): 1.05e-136

Kurtosis: 10.083 Cond. No. 2.06e+04

==============================================================================

Notes:

[1] R² is computed without centering (uncentered) since the model does not contain a constant.

[2] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[3] The condition number is large, 2.06e+04. This might indicate that there are

strong multicollinearity or other numerical problems.#we can access just the parts we need using the model's attributes. For example, if we want the R2 value:

models_best.loc[2, "model"].rsquared0.7614950002332872#We can examine these to try to select the best overall model. Let's start by looking at R2 across all our models:

# Gets the second element from each row ('model') and pulls out its rsquared attribute

models_best.apply(lambda row: row[1].rsquared, axis=1)1 0.664637

2 0.761495

3 0.771757

4 0.782885

5 0.789008

6 0.795140

7 0.797728

dtype: float64print(models_best.apply(lambda row: row[1].aic, axis=1))

print(models_best.apply(lambda row: row[1].aic, axis=1).argmin())

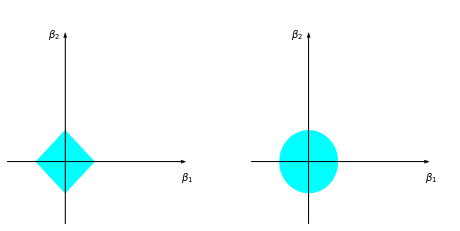

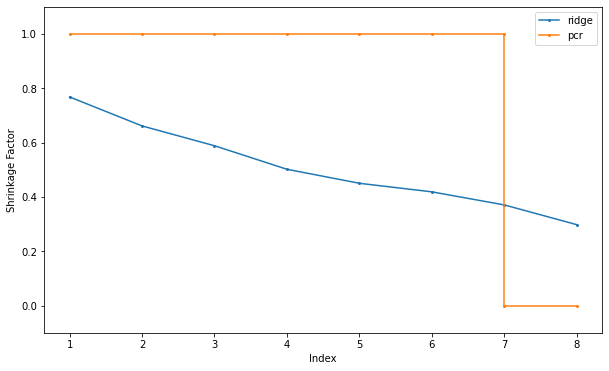

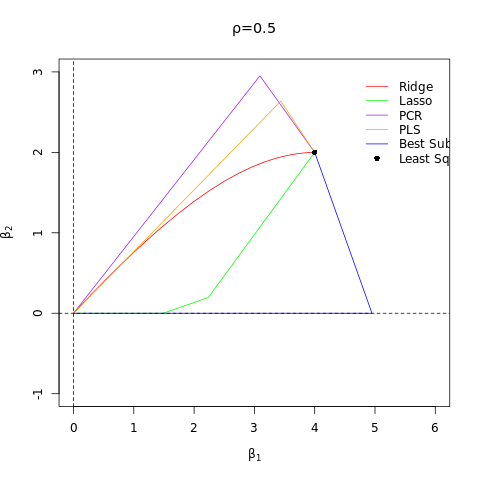

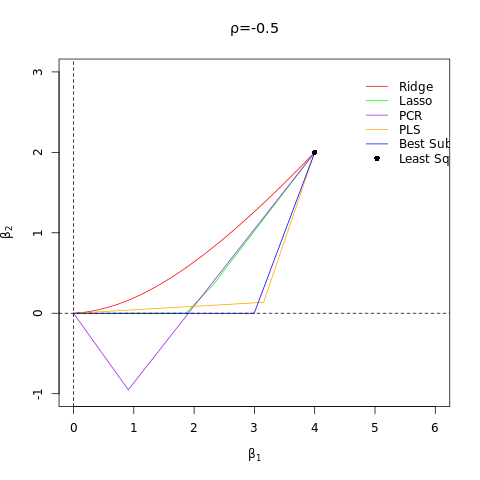

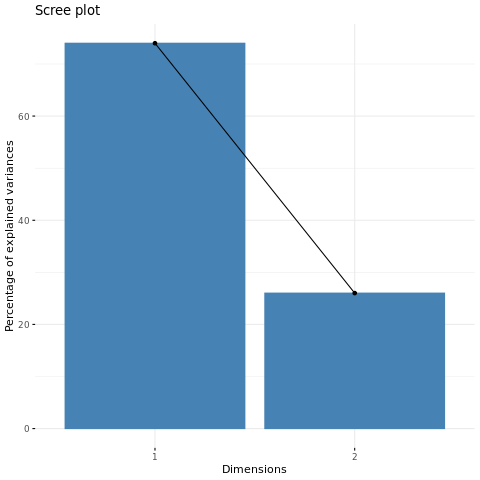

print(models_best.apply(lambda row: row[1].aic, axis=1).min())1 3906.865252